Video:

Drive links:https://drive.google.com/file/d/1tj29Zt4eafPmq3sbn2XQxWIdDe19ptf9/view?usp=sharing

https://drive.google.com/file/d/1iaTtnn3k2h35bS9jtLQnl48PngWvzTUW/view?usp=sharing

User Testing Documentation for the Project

To evaluate the user experience of the game, the following steps were conducted:

Participants: Two users were asked to play the game without prior instructions.

Environment: Each participant was given access to the joystick and mouse, along with the visual display of the game.

Recording: Gameplay sessions were recorded, capturing both screen activity and user interactions with the joystick and mouse.

Feedback: After the session, participants were asked to share their thoughts on the experience, including points of confusion and enjoyment.

Observations from User Testing

Most users instinctively tried to use the joystick to control the player.

Mapping joystick movement to player control was understood quickly.

Dying when hitting the wall was unexpected for both players, but they learned to avoid the walls and play more carefully quickly.

The dual control option (mouse click and joystick button) for starting the game worked well.

Powerups:

Participants found the power-up visuals engaging and intuitive.

Some users struggled to understand the effects of power-ups initially (e.g., what happens when picking up a turtle, or a lightning bolt)

But once they passed through the powerups they understood the effects it had.

Game Objectives:

The goal (reaching the endpoint) was clear to all participants.

Participants appreciated the timer and “Lowest Time” feature as a challenge metric.

What Worked Well

Joystick Integration: Smooth player movement with joystick controls was highly praised.

Visual Feedback: Power-up icons and heart-based life indicators were intuitive.

Engagement: Participants were motivated by the timer and the ability to beat their lowest time.

Obstacle Design: The maze structure was well-received for its balance of challenge and simplicity.

Areas for Improvement:

Power-up Explanation:

Players were unclear about the effects of power-ups until they experienced them.

I think this part does not need changing as it adds to the puzzling aspect of the game and makes further playthroughs more fun.

Collision Feedback:

When colliding with walls or losing a life, the feedback was clear as they could hear the sound effect and can see the heart lost at the top of the screen.

Lessons Learned

Need for Minimal Guidance: I like the challenge aspect of playing the game for the first time, with the lack of instructions, players are inspired to explore which increases their intrigue in the game.

Engaging Visuals and Sounds: Participants valued intuitive design elements like heart indicators and unique power-up icons.

Changes Implemented Based on Feedback

The speed was decreased slightly as the high speed was leading to many accidental deaths, The volume for the death feedback was increased to more clearly indicate what happens when a player consumes a death powerup or collide with a wall.

GAME:

Concept

The project is an interactive maze game that integrates an Arduino joystick controller to navigate a player through obstacles while collecting or avoiding power-ups. The objective is to reach the endpoint in the shortest possible time, with features like power-ups that alter gameplay dynamics (speed boosts, slowdowns, life deductions) and a life-tracking system with visual feedback.

- Player Movement: Controlled via the Arduino joystick.

- Game Start/Restart: Triggered by a joystick button press or mouse click.

- Power-Ups: Randomly spawned collectibles that provide advantages or challenges.

- Objective: Navigate the maze, avoid obstacles, and reach the goal with the least possible time.

The game is implemented using p5.js for rendering visuals and managing game logic, while Arduino provides the physical joystick interface. Serial communication bridges the joystick inputs with the browser-based game.

Design

Joystick Input:

X and Y axes: Control player movement.

Button press: Start or restart the game.

Visuals:

Player represented as a black circle.

Heart icons track lives.

Power-ups visually distinct ( icon-based).

Feedback:

Life loss triggers sound effects and visual feedback.

Timer displays elapsed and lowest times.

Game-over and win screens provide clear prompts.

Arduino Code:

const int buttonPin = 7; // The pin connected to the joystick button

int buttonState = HIGH; // Assume button is not pressed initially

void setup() {

Serial.begin(9600); // Start serial communication

pinMode(buttonPin, INPUT_PULLUP); // Set the button pin as input with pull-up resistor

}

void loop() {

int xPos = analogRead(A0); // Joystick X-axis

int yPos = analogRead(A1); // Joystick Y-axis

buttonState = digitalRead(buttonPin); // Read the button state

// Map analog readings (0-1023) to a more usable range if needed

int mappedX = map(xPos, 0, 1023, 0, 1000); // Normalize to 0-1000

int mappedY = map(yPos, 0, 1023, 0, 1000); // Normalize to 0-1000

// Send joystick values and button state as CSV (e.g., "500,750,1")

Serial.print(mappedX);

Serial.print(",");

Serial.print(mappedY);

Serial.print(",");

Serial.println(buttonState);

delay(50); // Adjust delay for data sending frequency

}

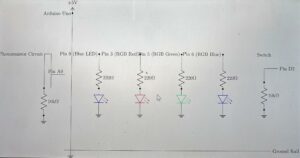

The circuit connects the joystick to the Arduino and includes connections for the button and power LEDs (to indicate remaining lives).

- Joystick:

- X-axis: A0

- Y-axis: A1

- Click (SW) connected to digital pin 7.

- VCC and GND to power the joystick module.

The p5.js sketch renders the maze, player, and power-ups, while handling game logic and serial communication.

Key features:

- Player Class: Handles movement, collision detection, and rendering.

- Power-Up Class: Manages random spawning, effects, and rendering.

- Obstacles Class: Generates Obstacles, and handles design aspects of them

- Joystick Input Handling: Updates player movement based on Arduino input.

- Game Loops: Includes logic for starting, restarting, and completing the game.

Code:

let player; //player variable

let obstacles = []; //list of obstacles

const OBSTACLE_THICKNESS = 18; //thickness of each rectangle

let rectImg, startImg; //maze pattern and start screen

let obstaclesG; // pre rendered obstacle course pattern for performance

let gameStarted = false; //game started flag

let gameEnded = false; //game ended flag

let startTime = 0; //start time

let elapsedTime = 0; //time passed since start of level

let lowestTime = Infinity; //infinity so the first level completion leads to the new lowest time

let lives = 3; // player starts with 3 lives

let collisionCooldown = false; // Tracks if cooldown is active

let cooldownDuration = 1000; // Cooldown duration in milliseconds

let lastCollisionTime = 0; // Timestamp of the last collision

let heartImg;//live hearts img

let bgMusic;

let lifeLostSound;

let winSound;

let serial; //for arduino connection

let joystickX = 500; // default joystick X position

let joystickY = 500; // default joystick Y position

let powerUps = []; // Array to store power-ups

let powerUpSpawnInterval = 10000; // interval to spawn a new

let lastPowerUpTime = 0; // time when the last power-up was spawned

let speedUpImg, slowDownImg, loseLifeImg;

let buttonPressed = false;

function preload() {

rectImg = loadImage('pattern.png'); // Load obstacle pattern

startImg = loadImage('start.png'); // Load start screen image

heartImg = loadImage('heart.png');// load heart image

bgMusic = loadSound('background_music.mp3'); // background music

lifeLostSound = loadSound('life_lost.wav'); // Sound for losing a life

winSound = loadSound('win_sound.wav'); //sound for winning

speedUpImg = loadImage('speed_up.png'); //icons for powerups

slowDownImg = loadImage('slow_down.png');

loseLifeImg = loadImage('lose_life.png');

}

function setup() {

createCanvas(1450, 900);

serial = new p5.SerialPort(); // Initialize SerialPort

serial.open('/dev/tty.usbmodem1101'); //the code for the arduino device being opened

serial.on('data', handleSerialData);

player = new Player(30, 220, 15, 5); //maze starting coordinate for player

//maze background

obstaclesG = createGraphics(1450, 900);

obstaclesG.background(220);

// Add obstacles

addObstacles(); //adds all the obstacles during setup

// loops through the list and displays each one

for (let obs of obstacles) {

obs.showOnGraphics(obstaclesG);

}

bgMusic.loop() //background music starts

}

function spawnPowerUp() {

let x, y;

let validPosition = false;

while (!validPosition) {

x = random(50, width - 50);

y = random(50, height - 50);

//a valid position for a powerup is such that it does not collide with any obstacles

validPosition = !obstacles.some(obs =>

collideRectCircle(obs.x, obs.y, obs.w, obs.h, x, y, 30)

) && !powerUps.some(pu => dist(pu.x, pu.y, x, y) < 60);

}

const types = ["speedUp", "slowDown", "loseLife"];

const type = random(types); //one random type of powerup

powerUps.push(new PowerUp(x, y, type)); //adds to powerup array

}

function handlePowerUps() {

// Spawn a new power-up if the interval has passed

if (millis() - lastPowerUpTime > powerUpSpawnInterval) {

spawnPowerUp();

lastPowerUpTime = millis(); // reset the spawn timer

}

// Display and check for player interaction with power-ups

for (let i = powerUps.length - 1; i >= 0; i--) {

const powerUp = powerUps[i];

powerUp.display();

if (powerUp.collidesWith(player)) {

powerUp.applyEffect(); // Apply the effect of the power-up

powerUps.splice(i, 1); // Remove the collected power-up

}

}

}

function draw() {

if (!gameStarted) {

background(220);

image(startImg, 0, 0, width, height);

noFill();

stroke(0);

// Start the game with joystick button or mouse click

if (buttonPressed || (mouseIsPressed && mouseX > 525 && mouseX < 915 && mouseY > 250 && mouseY < 480)) {

gameStarted = true;

startTime = millis();

}

} else if (!gameEnded) {

background(220);

image(obstaclesG, 0, 0);

player.update(obstacles); // Update player position

handlePowerUps(); // Manage power-ups

player.show(); // Display the player

// Update and display elapsed time, hearts, etc.

elapsedTime = millis() - startTime;

serial.write(`L${lives}\n`);

displayHearts();

fill(0);

textSize(22);

textAlign(LEFT);

text(`Time: ${(elapsedTime / 1000).toFixed(2)} seconds`, 350, 50);

textAlign(RIGHT);

text(

`Lowest Time: ${lowestTime < Infinity ? (lowestTime / 1000).toFixed(2) : "N/A"}`,

width - 205,

50

);

if (dist(player.x, player.y, 1440, 674) < player.r) {

endGame(); // Check if the player reaches the goal

}

} else if (gameEnded) {

// Restart the game with joystick button or mouse click

if (buttonPressed || mouseIsPressed) {

restartGame();

}

}

}

function handleSerialData() {

let data = serial.readLine().trim(); // Read and trim incoming data

if (data.length > 0) {

let values = data.split(","); // Split data by comma

if (values.length === 3) {

joystickX = Number(values[0]); // Update joystick X

joystickY = Number(values[1]); // Update joystick Y

buttonPressed = Number(values[2]) === 0; // Update button state (0 = pressed)

}

}

}

function displayHearts() { //display lives

const heartSize = 40; // size of each heart

const startX = 650; // x position for hearts

const startY = 40; // y position for hearts

for (let i = 0; i < lives; i++) { //only displays as many hearts as there are lives left

image(heartImg, startX + i * (heartSize + 10), startY, heartSize, heartSize);

}

}

function endGame() {

gameEnded = true;

noLoop(); // stop the draw loop

winSound.play(); //if game ends

serial.write("END\n");

// check if the current elapsed time is a new record

const isNewRecord = elapsedTime < lowestTime;

if (isNewRecord) {

lowestTime = elapsedTime; // update lowest time

}

// Display end screen

background(220);

fill(0);

textSize(36);

textAlign(CENTER, CENTER);

text("Congratulations! You reached the goal!", width / 2, height / 2 - 100);

textSize(24);

text(`Time: ${(elapsedTime / 1000).toFixed(2)} seconds`, width / 2, height / 2 - 50);

// Display "New Record!" message if applicable

if (isNewRecord) {

textSize(28);

fill(255, 0, 0); // Red color for emphasis

text("New Record!", width / 2, height / 2 - 150);

}

textSize(24);

fill(0); // Reset text color

text("Click anywhere to restart", width / 2, height / 2 + 50);

}

function mouseClicked() {

if (!gameStarted) {

// start the game if clicked in start button area

if (mouseX > 525 && mouseX < 915 && mouseY > 250 && mouseY < 480) {

gameStarted = true;

startTime = millis();

}

} else if (gameEnded) {

// Restart game

restartGame();

}

}

function checkJoystickClick() {

if (buttonPressed) {

if (!gameStarted) {

gameStarted = true;

startTime = millis();

} else if (gameEnded) {

restartGame();

}

}

}

function restartGame() {

gameStarted = true;

gameEnded = false;

lives = 3;

powerUps = []; // Clear all power-ups

player = new Player(30, 220, 15, 5); // Reset player position

startTime = millis(); // Reset start time

loop();

bgMusic.loop(); // Restart background music

}

function loseGame() {

gameEnded = true; // End the game

noLoop(); // Stop the draw loop

bgMusic.stop();

serial.write("END\n");

// Display level lost message

background(220);

fill(0);

textSize(36);

textAlign(CENTER, CENTER);

text("Level Lost!", width / 2, height / 2 - 100);

textSize(24);

text("You ran out of lives!", width / 2, height / 2 - 50);

text("Click anywhere to restart", width / 2, height / 2 + 50);

}

function keyPressed() { //key controls

let k = key.toLowerCase();

if (k === 'w') player.moveUp(true);

if (k === 'a') player.moveLeft(true);

if (k === 's') player.moveDown(true);

if (k === 'd') player.moveRight(true);

if (k === 'f') fullscreen(!fullscreen());

}

function keyReleased() { //to stop movement once key is released

let k = key.toLowerCase();

if (k === 'w') player.moveUp(false);

if (k === 'a') player.moveLeft(false);

if (k === 's') player.moveDown(false);

if (k === 'd') player.moveRight(false);

}

class Player { //player class

constructor(x, y, r, speed) {

this.x = x;

this.y = y;

this.r = r;

this.speed = speed;

this.movingUp = false;

this.movingDown = false;

this.movingLeft = false;

this.movingRight = false;

}

update(obsArray) { //update function

let oldX = this.x;

let oldY = this.y;

//joystick-based movement

if (joystickX < 400) this.x -= this.speed; // move left

if (joystickX > 600) this.x += this.speed; // move right

if (joystickY < 400) this.y -= this.speed; // move up

if (joystickY > 600) this.y += this.speed; // move down

// constrain to canvas

this.x = constrain(this.x, this.r, width - this.r);

this.y = constrain(this.y, this.r, height - this.r);

// restrict movement if colliding with obstacles

if (this.collidesWithObstacles(obsArray)) {

this.x = oldX; // revert to previous position x and y

this.y = oldY;

// Handle life deduction only if not in cooldown to prevent all lives being lost in quick succession

if (!collisionCooldown) {

lives--;

lastCollisionTime = millis(); // record the time of this collision

collisionCooldown = true; // activate cooldown

lifeLostSound.play(); // play life lost sound

if (lives <= 0) {

loseGame(); // Call loseGame function if lives reach 0

}

}

}

// Check if cooldown period has elapsed

if (collisionCooldown && millis() - lastCollisionTime > cooldownDuration) {

collisionCooldown = false; // reset cooldown

}

}

show() { //display function

fill(0);

ellipse(this.x, this.y, this.r * 2);

}

collidesWithObstacles(obsArray) { //checks collisions in a loop

for (let obs of obsArray) {

if (this.collidesWithRect(obs.x, obs.y, obs.w, obs.h)) return true;

}

return false;

}

collidesWithRect(rx, ry, rw, rh) { //collision detection function checks if distance between player and wall is less than player radius which means a collision occurred

let closestX = constrain(this.x, rx, rx + rw);

let closestY = constrain(this.y, ry, ry + rh);

let distX = this.x - closestX;

let distY = this.y - closestY;

return sqrt(distX ** 2 + distY ** 2) < this.r;

}

moveUp(state) {

this.movingUp = state;

}

moveDown(state) {

this.movingDown = state;

}

moveLeft(state) {

this.movingLeft = state;

}

moveRight(state) {

this.movingRight = state;

}

}

class Obstacle { //obstacle class

constructor(x, y, length, horizontal) {

this.x = x;

this.y = y;

this.w = horizontal ? length : OBSTACLE_THICKNESS;

this.h = horizontal ? OBSTACLE_THICKNESS : length;

}

showOnGraphics(pg) { //to show the obstacle pattern image repeatedly

for (let xPos = this.x; xPos < this.x + this.w; xPos += rectImg.width) {

for (let yPos = this.y; yPos < this.y + this.h; yPos += rectImg.height) {

pg.image(

rectImg,

xPos,

yPos,

min(rectImg.width, this.x + this.w - xPos),

min(rectImg.height, this.y + this.h - yPos)

);

}

}

}

}

class PowerUp {

constructor(x, y, type) {

this.x = x;

this.y = y;

this.type = type; // Type of power-up: 'speedUp', 'slowDown', 'loseLife'

this.size = 30; // Size of the power-up image

}

display() {

let imgToDisplay;

if (this.type === "speedUp") imgToDisplay = speedUpImg;

else if (this.type === "slowDown") imgToDisplay = slowDownImg;

else if (this.type === "loseLife") imgToDisplay = loseLifeImg;

image(imgToDisplay, this.x - this.size / 2, this.y - this.size / 2, this.size, this.size);

}

collidesWith(player) {

return dist(this.x, this.y, player.x, player.y) < player.r + this.size / 2;

}

applyEffect() {

if (this.type === "speedUp") player.speed += 2;

else if (this.type === "slowDown") player.speed = max(player.speed - 1, 2);

else if (this.type === "loseLife") {

lives--;

lifeLostSound.play();

if (lives <= 0) loseGame();

}

}

}

function addObstacles() {

// adding all obstacles so the collision can check all in an array

obstacles.push(new Obstacle(0, 0, 1500, true));

obstacles.push(new Obstacle(0, 0, 200, false));

obstacles.push(new Obstacle(0, 250, 600, false));

obstacles.push(new Obstacle(1432, 0, 660, false));

obstacles.push(new Obstacle(1432, 700, 200, false));

obstacles.push(new Obstacle(0, 882, 1500, true));

obstacles.push(new Obstacle(100, 0, 280, false));

obstacles.push(new Obstacle(0, 400, 200, true));

obstacles.push(new Obstacle(200, 90, 328, false));

obstacles.push(new Obstacle(300, 0, 500, false));

obstacles.push(new Obstacle(120, 500, 198, true));

obstacles.push(new Obstacle(0, 590, 220, true));

obstacles.push(new Obstacle(300, 595, 350, false));

obstacles.push(new Obstacle(100, 680, 200, true));

obstacles.push(new Obstacle(0, 770, 220, true));

obstacles.push(new Obstacle(318, 400, 250, true));

obstacles.push(new Obstacle(300, 592, 250, true));

obstacles.push(new Obstacle(420, 510, 85, false));

obstacles.push(new Obstacle(567, 400, 100, false));

obstacles.push(new Obstacle(420, 680, 100, false));

obstacles.push(new Obstacle(567, 750, 150, false));

obstacles.push(new Obstacle(420, 680, 400, true));

obstacles.push(new Obstacle(410, 90, 200, false));

obstacles.push(new Obstacle(410, 90, 110, true));

obstacles.push(new Obstacle(520, 90, 120, false));

obstacles.push(new Obstacle(410, 290, 350, true));

obstacles.push(new Obstacle(660, 90, 710, false));

obstacles.push(new Obstacle(660, 90, 100, true));

obstacles.push(new Obstacle(420, 680, 500, true));

obstacles.push(new Obstacle(410, 290, 315, true));

obstacles.push(new Obstacle(830, 0, 290, false));

obstacles.push(new Obstacle(760, 200, 70, true));

obstacles.push(new Obstacle(742, 200, 90, false));

obstacles.push(new Obstacle(950, 120, 480, false));

obstacles.push(new Obstacle(1050, 0, 200, false));

obstacles.push(new Obstacle(1150, 120, 200, false));

obstacles.push(new Obstacle(1250, 0, 200, false));

obstacles.push(new Obstacle(1350, 120, 200, false));

obstacles.push(new Obstacle(1058, 310, 310, true));

obstacles.push(new Obstacle(760, 390, 300, true));

obstacles.push(new Obstacle(660, 490, 200, true));

obstacles.push(new Obstacle(760, 582, 200, true));

obstacles.push(new Obstacle(920, 680, 130, false));

obstacles.push(new Obstacle(1040, 310, 650, false));

obstacles.push(new Obstacle(790, 760, 200, false));

obstacles.push(new Obstacle(1150, 400, 400, false));

obstacles.push(new Obstacle(1160, 560, 300, true));

obstacles.push(new Obstacle(1325, 440, 200, false));

obstacles.push(new Obstacle(1240, 325, 150, false));

obstacles.push(new Obstacle(1150, 800, 200, true));

obstacles.push(new Obstacle(1432, 850, 130, false));

obstacles.push(new Obstacle(1240, 720, 200, true));

}

What I’m Proud Of

Joystick Integration: Seamless control with physical inputs enhances immersion.

Dynamic Power-Ups: Randomized, interactive power-ups add a strategic layer.

Visual and Auditory Feedback: Engaging effects create a polished gaming experience.

Robust Collision System: Accurate handling of obstacles and player interaction.

Areas for Improvement:

- Tutorial/Instructions: Add an in-game tutorial to help new users understand power-ups and controls. This could be a simple maze with all powerups and a wall to check collision.

- Level Design: Introduce multiple maze levels with increasing difficulty.

- Enhanced Feedback: Add animations for power-up collection and collisions

Conclusion:

I had a lot of fun working on this project, it was a fun experience learning serial communication and especially integrating all the powerup logic. I think with some polishing and more features this could be a project that I could publish one day.