Concept:

For my final project, the idea switched from a wholesome cuddly teddy bear that gets happier the tighter you hug it, to a tragic ending for Daisy the duck, where she gets sadder the harder users strangle her up until the point where she dies. My intention was to create a wholesome stress reliever for finals season, but it ended up still working in that many users found it to be stress relieving, but it was in a very aggressive manner. This was due to the struggle to find a proper spot for the flex sensors to sit inside of her, so I found it easier to put it around her neck. Depending on the amount of pressure the user puts onto Daisy as they strangle her, her emotions get more and more depressing the stronger the pressure is, which is shown through the p5 display.

Picture:

Implementation:

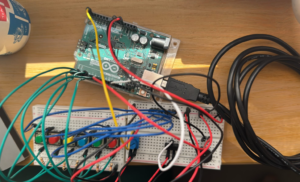

The physical aspect was relatively simple before I had to figure out how to attach the flex sensors onto Daisy. I connected two flex sensors to the Arduino board, and with the help of several male-to-male and female-to-female jumper wires, I was able to lengthen the “legs” of the flex sensors from the bread board, which allowed for more flexibility when working with the placement of the flex sensors. The difficult part was figuring out how to neatly link the flex sensors to Daisy.

The initial idea was to cut her back open and place the sensors and possibly the breadboard and Arduino inside of her, and then sew her shut. This ended up being problematic because from test hugs, a lot of people hugged her in different ways. Some hugged her with pressure on her stomach while some hugged her with pressure on her head. This would’ve caused issues with the project functioning or not, because that depends on whether users accurately apply force on the flex sensors. Additionally, I had some concerns over the wires getting loose and detached while inside of Daisy. It would’ve also made her really heavy with a thick inconvenient cable coming out of her back, which is the one that connects the Arduino board to my laptop.

I ended up revamping the idea into placing the flex sensors around her neck. Not only was this much easier to implement as I just used some strong double sided stick tape, it was also a lot easier for people to understand what to do without having to give precise detailed instructions on where to hold her. The double sided stick tape was a lot stronger than expected, and still held up after the showcase, although I’m not so sure how well the flex sensors work now. I think they’ve become a little desensitized to all the pressure.

To cover the flex sensors to make it look more neat, I initially made a paper bow to attach to her, but after looking around the IM Lab, I found a small piece of mesh fabric that made a perfect scarf for her, which covered the flex sensors really naturally.

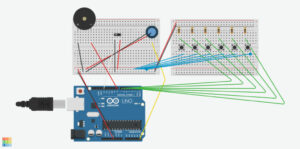

As for the P5 side of things, I took a lot from what I learned while making my midterm project, where I layered different pictures together. These layered backgrounds and pictures of Daisy would represent and visualize her mood, as each layer would show only if the pressure received from Arduino was between a certain amount. For example, if the pressure average was greater than 965, p5 would layer and display Daisy in a happy mood alongside a sunny rainbow background. She has 4 moods/stages. Happy, neutral, sad, and dead. These stages also have corresponding music that plays when the level is unlocked. The p5 also has a mood bar that matches the intensity of the pressure and also changes color to match the mood it reaches. The Arduino code calculated the average of the two flex sensors, so the p5 would display different images depending on the pressure number.

Arduino Code:

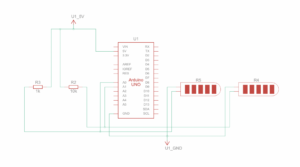

Schematic:

Challenges and Proud of:

I struggled a lot with, as mentioned earlier, the placement of the flex sensors. I really wanted to keep the wholesome concept of the initial idea I had, so I really had to brainstorm ways to execute it, but I ended up changing it. I found it to be for the better, as the idea seemed to grab people’s attention a lot more than I expected. It was really popular at the IM Showcase and everyone wanted to strangle poor Daisy, up to the point where the flex sensor pressure wouldn’t go lower than roughly 650, which meant it was really difficult for people to reach her “Sad” stage and even harder to kill her. I ended up tweaking the numbers a bit during the showcase so people could feel satisfied with reaching her “Dead” stage, but it wasn’t that way at the start of the showcase.

I was really proud of this project overall and I was satisfied with the way it turned out even though it was a complete 180 from my idea. It did relieve a lot of people’s stress, just not in the way I intended or expected it to be. It was really funny to watch everyone strangle this poor plushy just to reach the “Dead” stage, and to see the pure joy on people’s faces once they managed to kill her. I was also proud that I properly managed to add that “Dead” stage and also complementary music a bit before the showcase.

Future Improvement:

In the future, I would want to figure out how to prevent the flex sensors from “wearing out” so that it wouldn’t affect the sensitivity of it. I would also want to add more flex sensors so that people could strangle Daisy however they want without having to stay on one specific line in order for it to work from there being more surface area to work with.

Showcase:

The tragic aftermath of Daisy by the end of the IM Showcase. Very stiff.

The tragic aftermath of Daisy by the end of the IM Showcase. Very stiff.