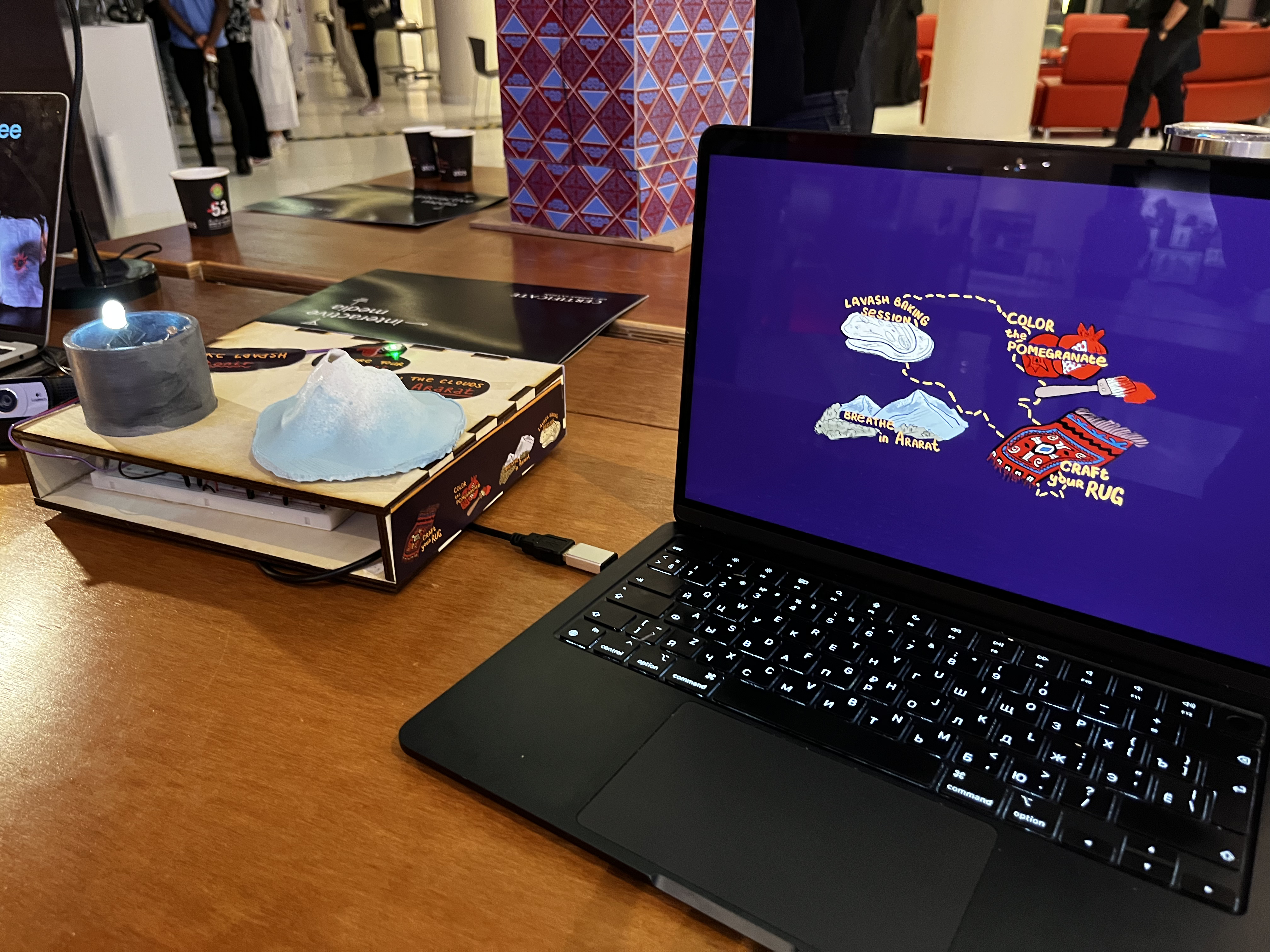

Finalized Concept & Progress

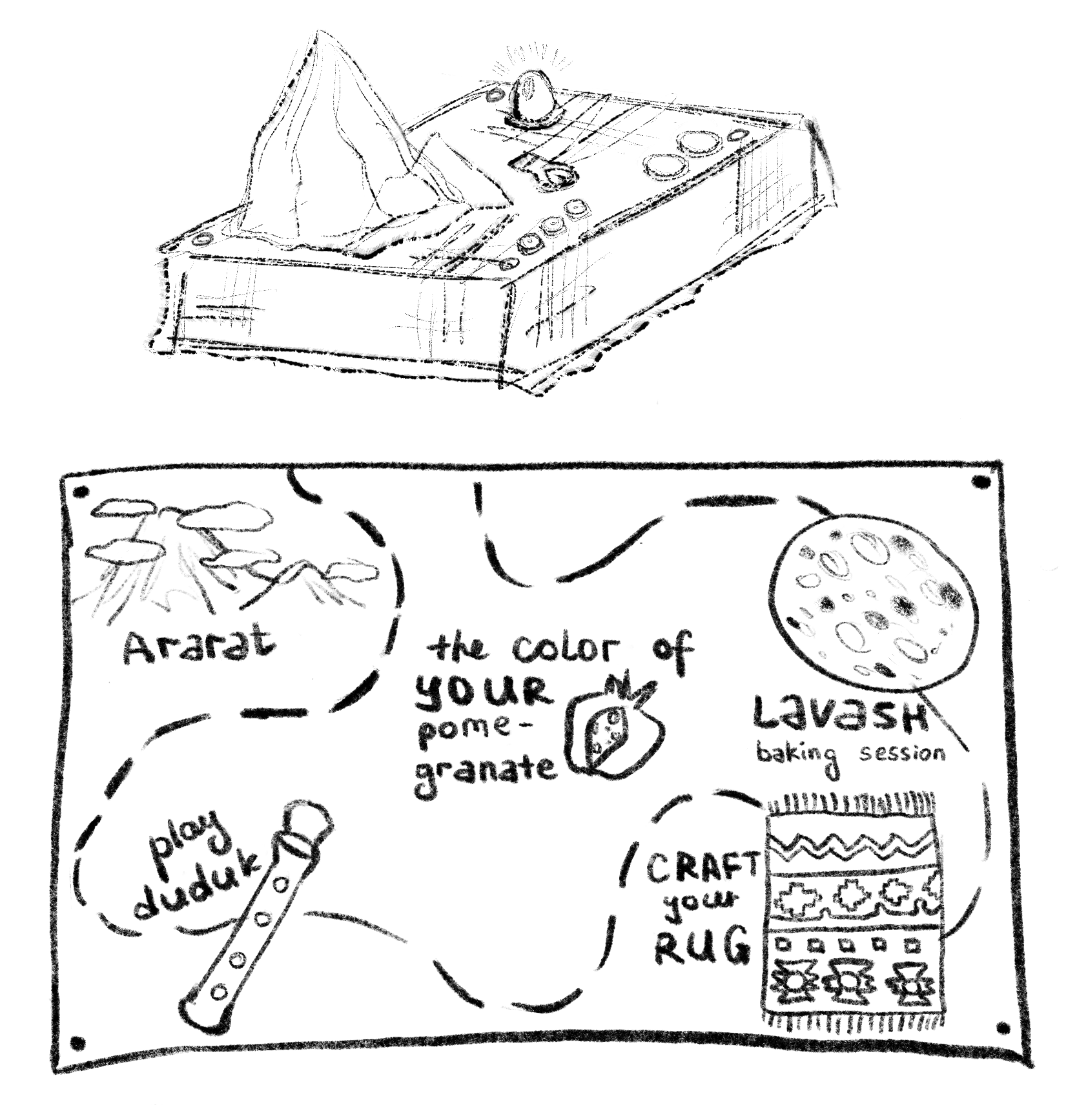

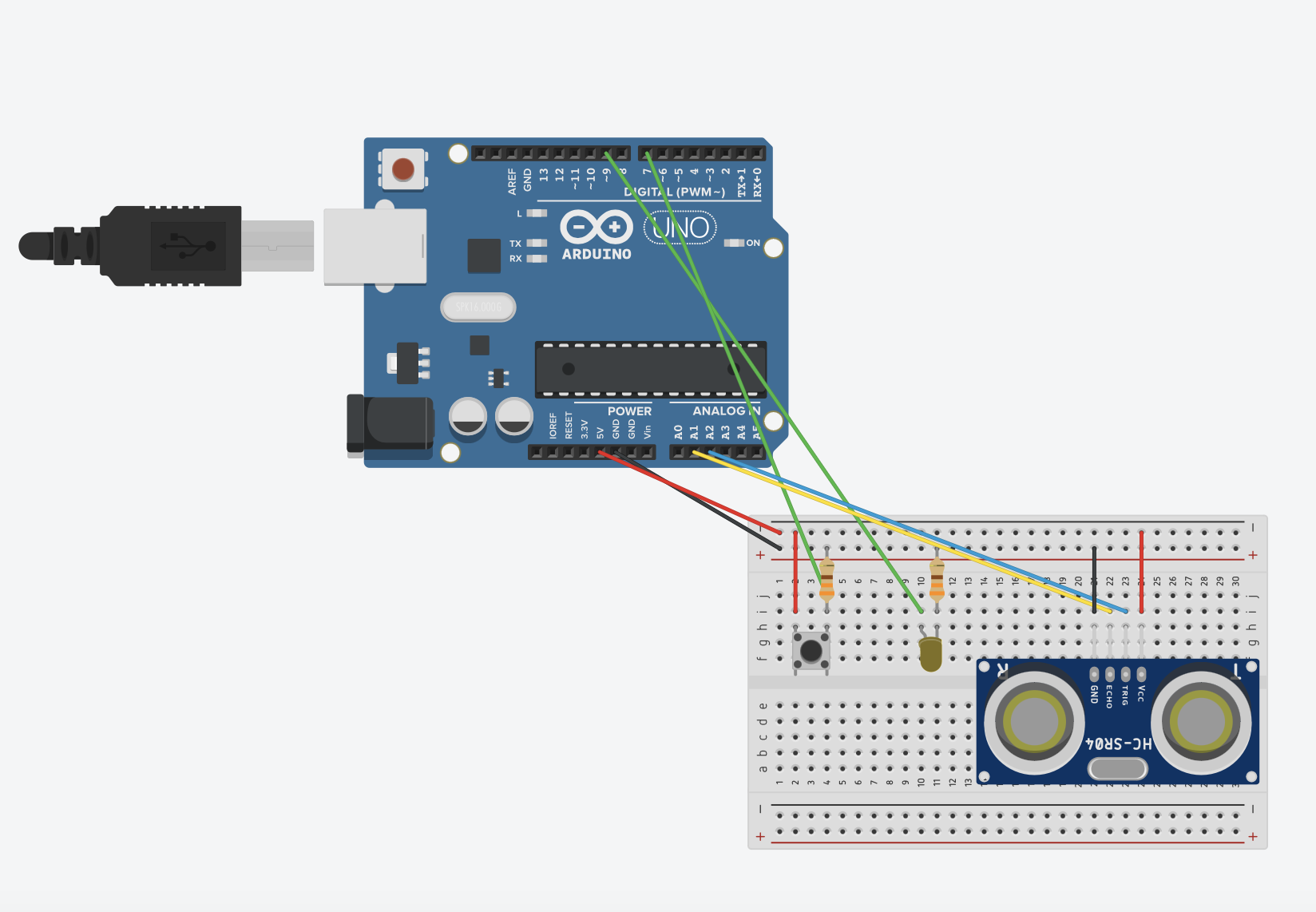

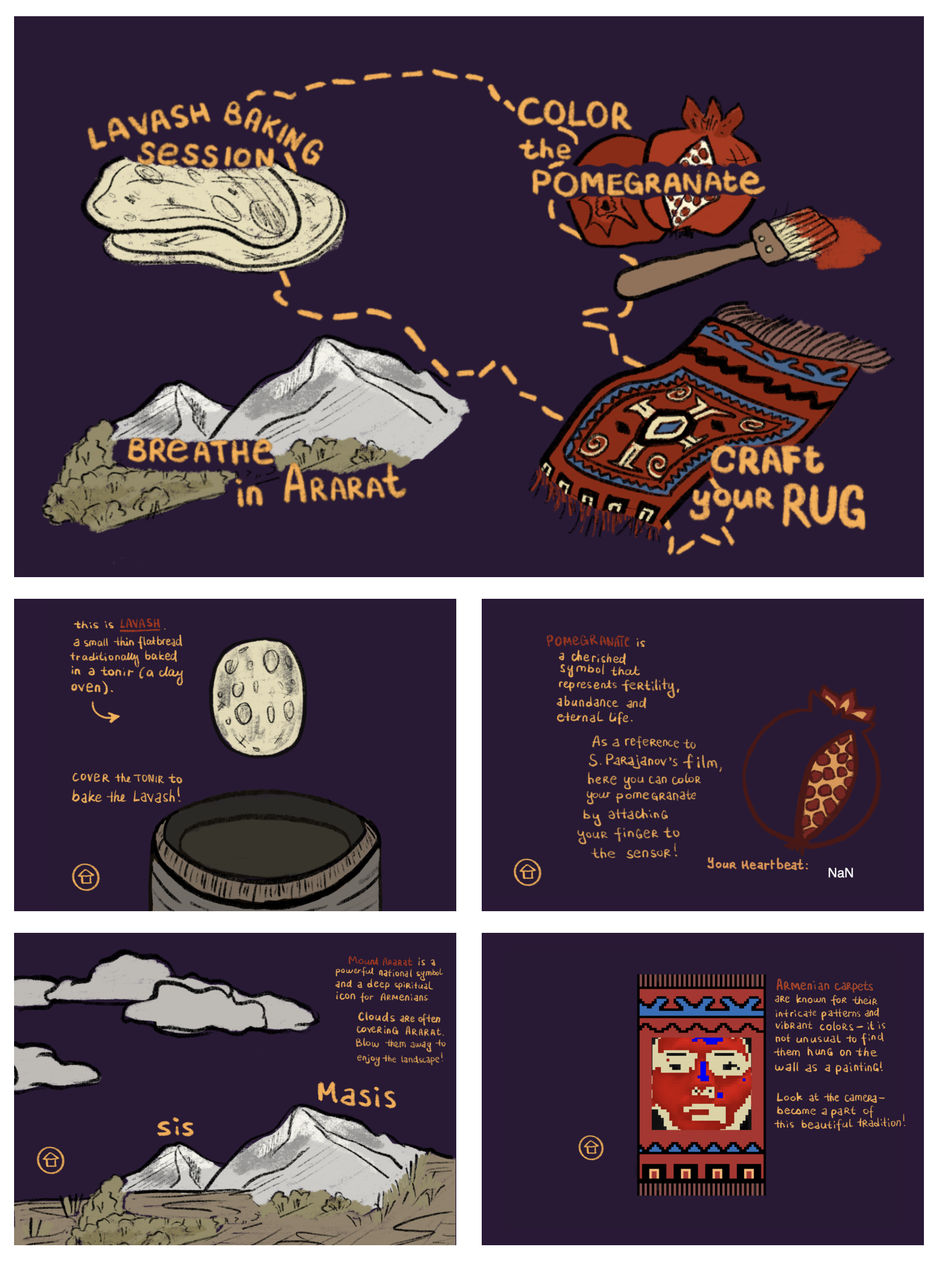

As I began working with p5.js and Arduino, I decided to focus my project on four Armenian symbols: Mount Ararat, the pomegranate, the Armenian rug, and lavash. I removed the duduk (musical instrument) from the project, as recreating it would have taken too much time, and the sounds I could input wouldn’t be similar enough to the actual instrument. Therefore, I chose to focus on these four interactions:

- Lavash Baking Session:

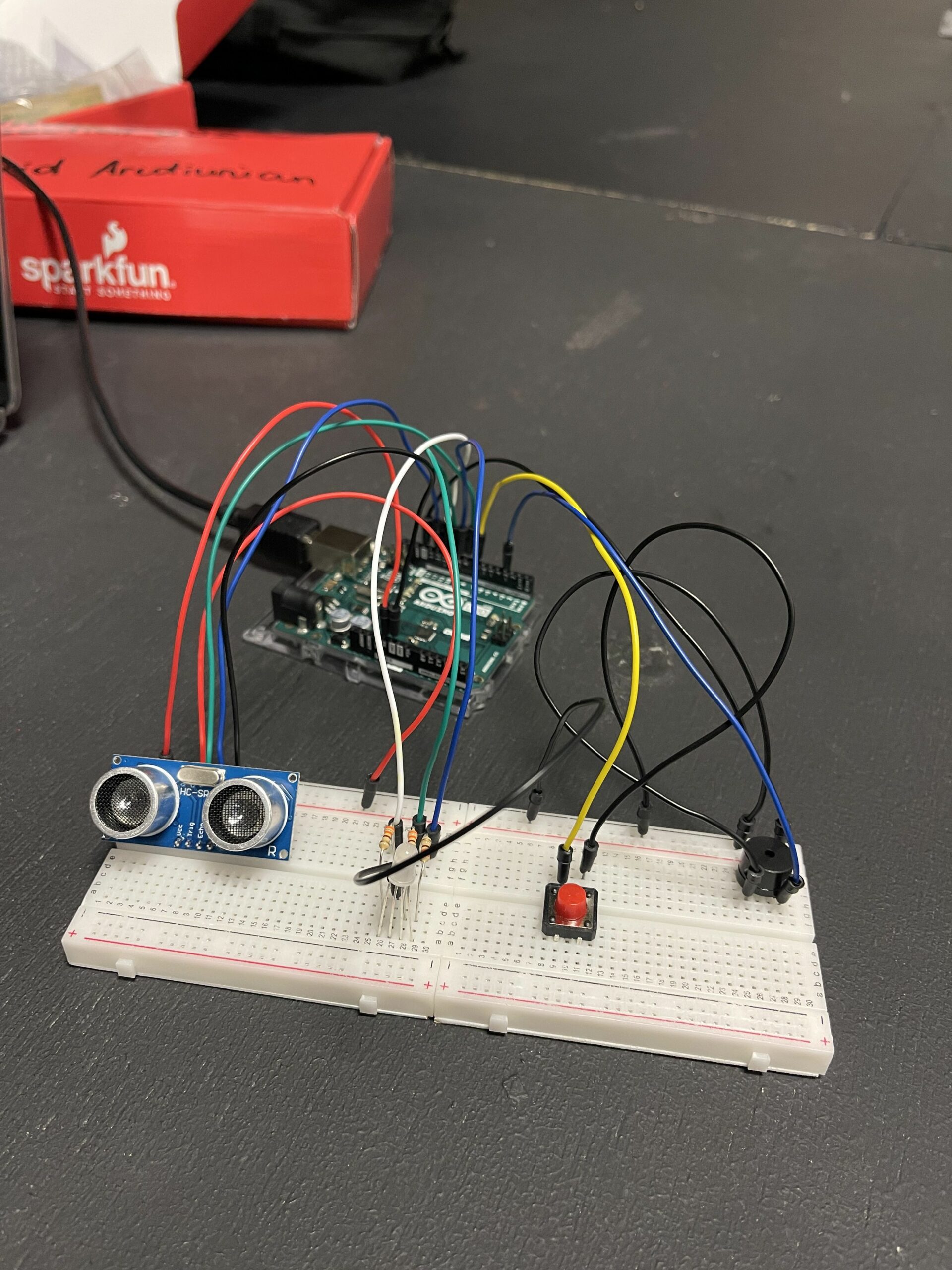

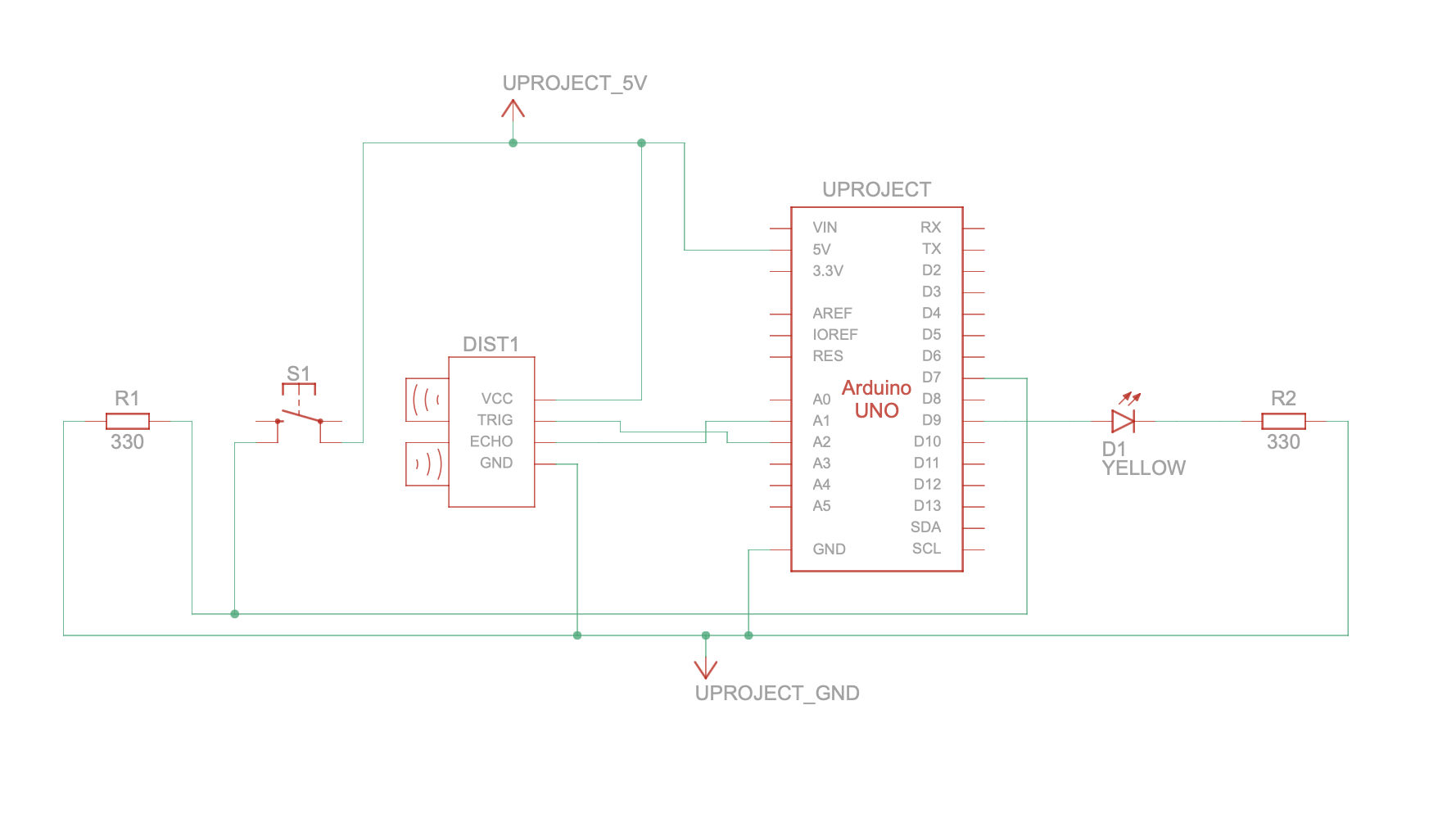

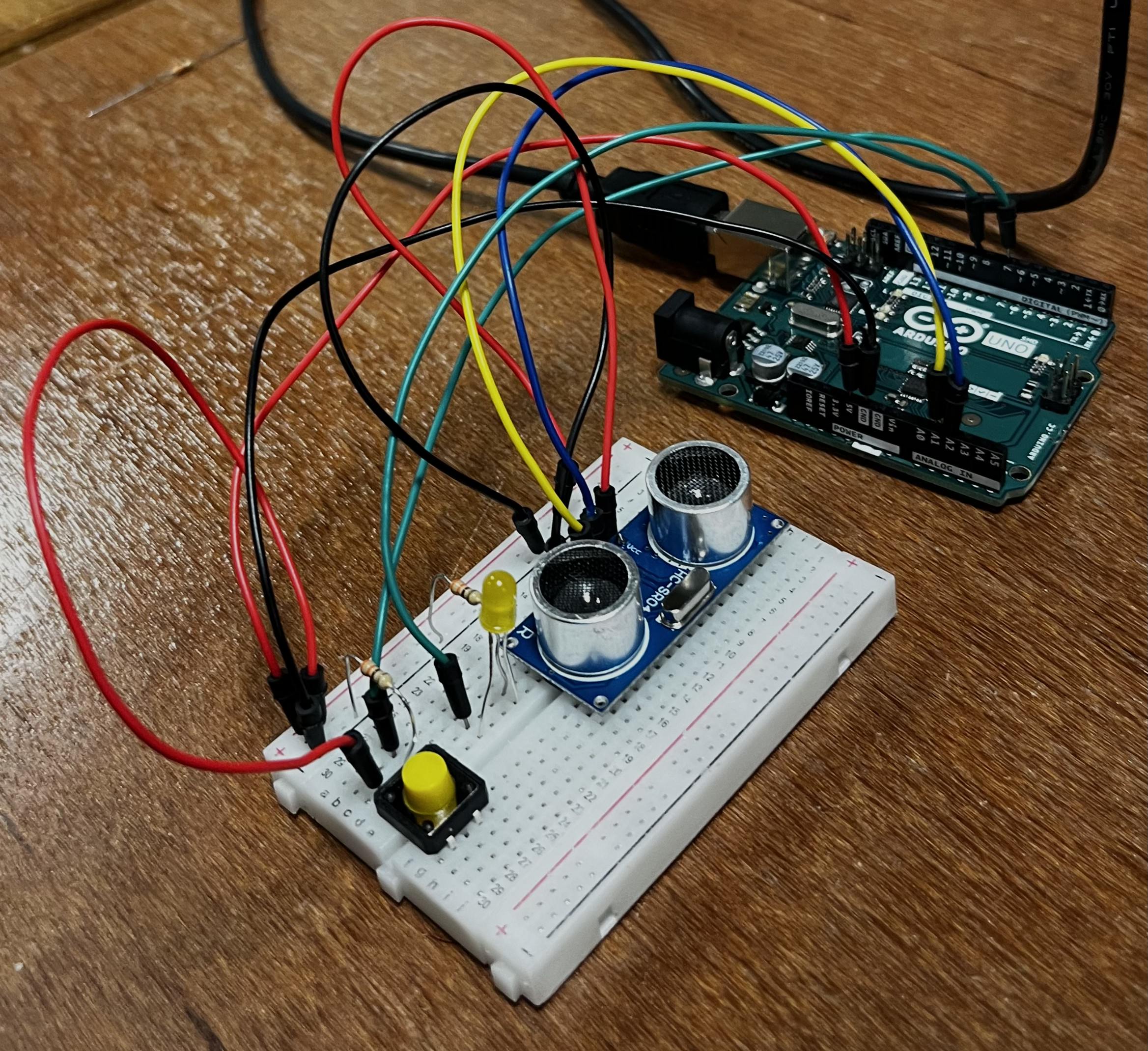

This interaction references Armenian flatbread (lavash) and imitates its baking process in a traditional clay oven (tonir). Users can cover the 3D-printed oven with both hands to place the lavash inside. When they release their hands, the lavash reappears on the p5 canvas. This interaction is based on the real-world operation of a tonir, which is typically placed in the ground to bake the lavash beneath the surface. Serial communication includes an LDR sensor from the Arduino side, which sends a signal to p5 to manipulate the lavash’s position inside the oven. An LED sensor also indicates when the lavash is being baked.

- Breathing in Ararat:

This interaction centers on Mount Ararat, the iconic peak visible from much of Armenia. As many Armenians dream of seeing Ararat through the clouds, this interaction lets users blow away the clouds to reveal the mountain. Users are encouraged to blow into the 3D-printed mountain, and with enough force, the clouds on the p5 canvas will move away, revealing the peak. I used a DHT22 sensor to detect humidity levels, which are sent to p5 to control the movement of the clouds.

- Coloring the Pomegranate:

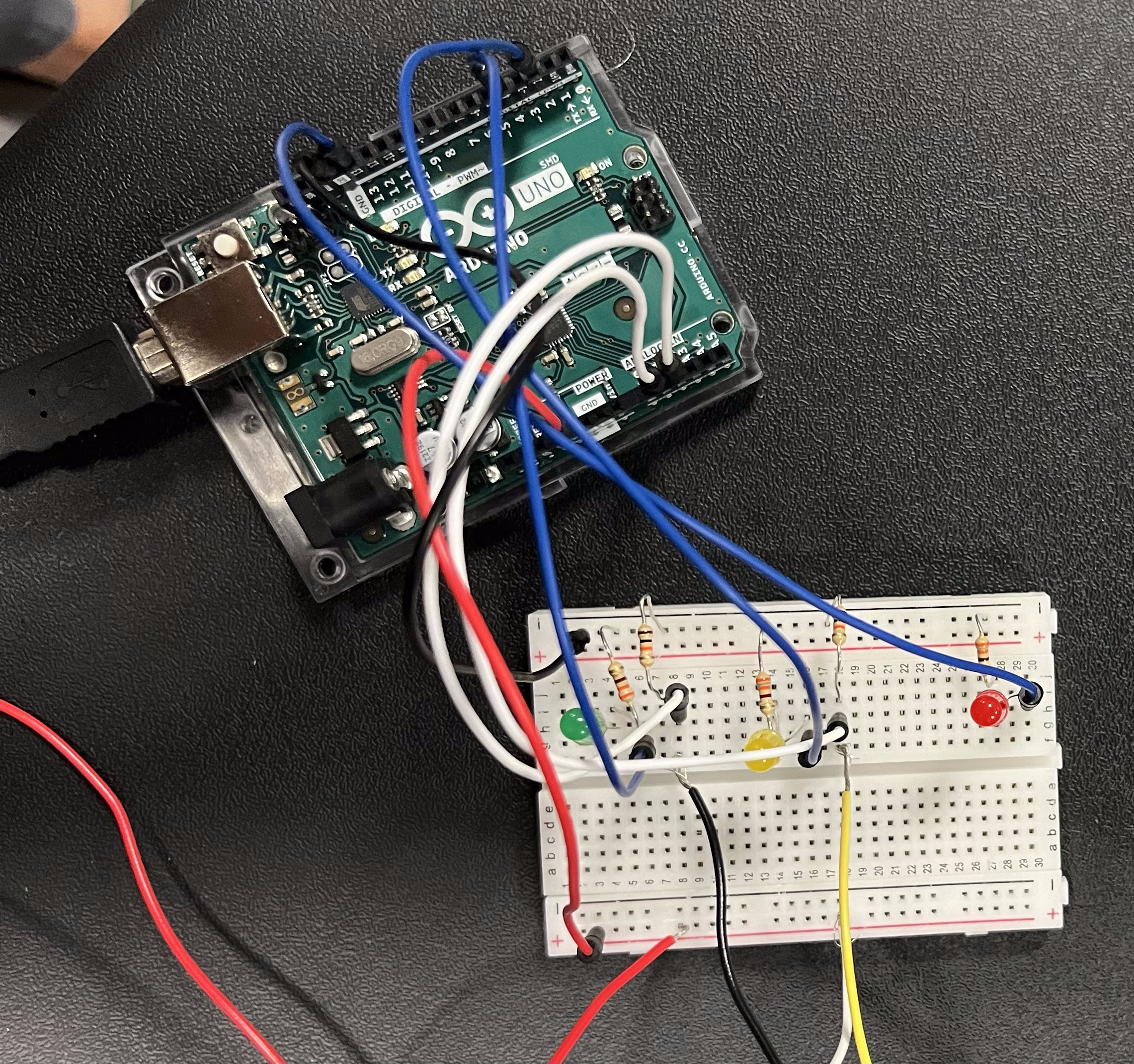

This interaction draws inspiration from Sergei Parajanov’s iconic film The Color of Pomegranates. To color the pomegranate, users place their finger on the fruit, where a pulse sensor detects their heartbeat. The pomegranate changes color based on the sensor’s readings: lower heart rates result in blue hues, while higher rates produce red tones. Serial communication reads the pulse sensor’s value on the Arduino side and displays it on the p5 canvas, adjusting the pomegranate’s color accordingly.

- Crafting the Rug:

This interaction is entirely based in p5.js. Users can see themselves integrated into the pattern of an Armenian rug, typically displayed on walls for its aesthetic value. I used the following p5.js code as a reference to capture the pixelated camera feed, and by adjusting the RGB values of four main colors (blue, black, red, and beige), I created an image that blends with the rug pattern.

Arduino + p5

p5.js Code:

Reflection & Areas for Improvement

This project challenged me to work with various types of sensors. I encountered several issues with sensors I had never used before, such as the pulse sensor. Initially, it displayed three-digit values that bore no relation to an actual heartbeat. I eventually discovered that the sensor itself is not very reliable, so I implemented constraints to ensure the displayed values resembled a realistic heartbeat. Fortunately, this approach seemed to work during the IM showcase, as users received values around 90-100. Additionally, I had to constantly calibrate the LDR and DHT22 sensor values to suit the specific environment of the showcase.

I believe the visual aspects of the project could be further refined as well. More than that, the interactions could be recorded in p5.js, allowing users to compare how quickly they could “blow the clouds” or “bake the lavash.” This would introduce an element of competition and encourage users to revisit the project repeatedly.

Overall, I am proud of what I accomplished, particularly the variety of interactions I managed to implement within a constrained timeframe. I learned a great deal from this experience and thoroughly enjoyed engaging with users during the IM showcase – hearing their feedback about their favorite interactions was especially rewarding.