The main concept of “I See Sound” is to create an immersive experience with users and music by visualizing their favorite songs. By using 2 sensors, users are able to switch shapes and colors giving them a stylistic hand in the visualization. The aim is to put I See Sound in settings where upbeat music is played, for example in concerts, musical installations, parties, etc.

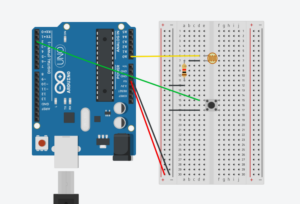

Arduino and p5.js scripts work together to create a dynamic audio-visual experience that responds to audio inputs via a photocell and a button. The Arduino script continuously monitors the environment through a photocell and a button. The photocell measures the light level and this data is read and printed. This value is then printed and sent to P5.js via serial communication. Additionally, the Arduino script checks for the state of a button. When pressed, it sends a specific command (“changeShape”) to the p5.js script. The combination of these sensors act as the main communication medium between users and the visualization.

The Arduino sends data values that include light levels and control commands, which the p5.js script reads and implements in different functions. This script is designed to respond to these inputs by altering visual and audio outputs accordingly. For example, higher light levels can result in brighter visuals, while pressing the button changes the visual form, demonstrating a real-time interactionbetween the user’s physical environment and the digital representation.

Arduino Code:

int photocellPin = 0;

int photocellReading;

int buttonPin = 2;

int buttonState = 0;

void setup() {

Serial.begin(9600);

pinMode(buttonPin, INPUT);

}

void loop() {

photocellReading = analogRead(photocellPin);

photocellReading = 1023 - photocellReading;

Serial.println(photocellReading);

buttonState = digitalRead(buttonPin);

if (buttonState == HIGH) {

Serial.println("changeShape");

delay(200);

}

delay(100);

}

p5.js Code:

let dj =0;

let sound, amplitude;

let currentShape = 'ellipse';

let currentState = 'startScreen';

let photocellData = 0;

function preload() {

sound = loadSound('sounds/aroundme.mp3');

startScreenImage = loadImage('P5 DJ.gif');

}

function setup() {

let cnv = createCanvas(700, 600);

amplitude = new p5.Amplitude();

noiseSeed(millis());

}

function draw() {

if (currentState == 'startScreen') {

displayStartScreen();

} else if (currentState == 'running') {

runVisualization();

}

}

function displayStartScreen() {

background(startScreenImage);

}

function runVisualization(){

let level = amplitude.getLevel();

photocellBackground();

let numShapes = int(map(level, 0, 5, 15, 30));

for (let i = 0; i < numShapes; i++) {

let angleOffset = TWO_PI / numShapes * i;

let x = width / 2 + 4 * (sin(frameCount * 0.02 + angleOffset) * 100 * noise(0.001 * frameCount + i));

let y = height / 2 + 4 * (cos(frameCount * 0.02 + angleOffset) * 100 * noise(0.001 * frameCount + 100 + i));

let size1 = map(sin(frameCount * 0.1 + angleOffset), -1, 1, 10, 100);

let myColor = color(255 * noise(i), 255 * noise(i + 10), 255 * noise(i + 20), 200);

fill(myColor);

let colors = ['red', 'blue', 'green', 'purple', 'maroon'];

let chosenColor = random(colors)

stroke(chosenColor);

strokeWeight(map(level, 0, 1, 10, 100));

switch (currentShape) {

case 'ellipse':

ellipse(x, y, size1, size1);

break;

case 'rectangle':

rect(x, y, size1, size1);

break;

case 'triangle':

triangle(x - size1 * 0.4, y + size1 * 0.4, x, y - size1 * 0.4, x + size1, y + size1 * 0.4);

break;

case 'star':

drawStar(x, y, 5, size1 * 0.8, size1 * 0.4);

break;

case 'spiral':

drawSpiral(x, y, size1 * 0.8);

break;

}

}

}

function photocellBackground() {

background(map(photocellData, 0, 1023, 0, 255));

}

function togglePlay() {

if (sound.isPlaying()) {

sound.pause();

} else {

sound.loop();

amplitude.setInput(sound);

}

}

function changeShape() {

const shapes = ['ellipse', 'rectangle', 'triangle', 'star', 'spiral'];

let index = shapes.indexOf(currentShape);

currentShape = shapes[(index + 1) % shapes.length];

}

function drawStar(x, y, points, radius1, radius2) {

let angle = TWO_PI / points;

let halfAngle = angle / 2.0;

beginShape();

for (let a = 0; a < TWO_PI; a += angle) {

let sx = x + cos(a) * radius2;

let sy = y + sin(a) * radius2;

vertex(sx, sy);

sx = x + cos(a + halfAngle) * radius1;

sy = y + sin(a + halfAngle) * radius1;

vertex(sx, sy);

}

endShape(CLOSE);

}

function drawSpiral(x, y, maxRadius) {

let angle = 0;

let endRadius = 0;

beginShape();

while (endRadius < maxRadius) {

let sx = x + cos(angle) * endRadius;

let sy = y + sin(angle) * endRadius;

vertex(sx, sy);

angle += 0.1;

endRadius += 0.5;

}

endShape();

}

function keyPressed() {

if (key == " " && currentState == 'startScreen') {

setUpSerial();

waitForSerial();

}

}

function waitForSerial() {

if (serialActive) {

currentState = 'running';

togglePlay();

} else {

console.log("Waiting for serial connection.. Press Space to Connect.");

setTimeout(waitForSerial, 10);

}

}

////////////////////////////////////

//READ FROM ARDUINO HERE

////////////////////////////////////

function readSerial(data){

if (data != null) {

let fromArduino = data;

if (fromArduino.length >= 1) {

dj = int(data);

print(dj)

// Echo = int(fromArduino[1]);

console.log(data); // Print data for debugging

if (data.trim() === "changeShape") {

changeShape(); // Change the shape if the correct command is received

}

if (data.trim() === "photocellReading")

photocellBackground();

}

// //////////////////////////////////

// //SEND TO ARDUINO HERE (handshake)

// //////////////////////////////////

let sendToArduino = -1;

writeSerial(sendToArduino);

}

}

Overall, I am particularly proud of myself because I really did try my best, as a beginner I found it hard to even wrap my head around most of the stuff and I am honestly very proud of myself. During the process, I didn’t feel as confident at some point however I seeked out different resources such as Berney Codes, asked some of my classmates for help (Thank You Sarah), and used ChatGPT to help me understand serial communication and revise errors in codes, as well as in creating shapes. In the future, however, I would definitely work on the interaction part more, as well as stylistic choices. I did adapt this project from the p5.Amplitude library.