Project Concept & Work in Progress

Project Concept & Work in Progress

Introduction:

The final project is turning out to be great! A concept that combines Cornhole game, as mentioned earlier, and a gamified message of putting your stuff back in their place. Seeing IM lab assistants go around, cleaning up after the students made me wonder what can get the message across while making it bother interactive and interesting. Something that makes people keep coming back to your project.

Project Development:

The first take was the hardware. The idea is to incorporate a release mechanism alongside a barrel. To construct a barrel, an idle sturdy cardboard was salvaged from the IM lab. It was cut down into two with a saw. Each being 44 cm in length and 50 mm in diameter approximately.

Then, a broken PVC pipe, which was originally part of a discard vacuum cleaner was used. This was done to recycle discarded item. It was clamped and then cut to fit the size of the barrel.

Slit/ cavity was then made into the pipe like structure for the release mechanism to latch into.

The original idea was to shoot pingpong balls. Since the pipe was hollow, it was filled with some plastic trash, and broken items to give it mass. The basic principles of conservation of momentum were to be applied.

M1V1 = M2V2 with 1 indicating the pipe , and 2 indicating the pingpong ball. Hence the ball will have greater speed to make up for the lesser mass.

Next, the discarded utensils like spoon was used. Clamped into place, I used saw to cut it in half. The other half was to be used as the release/lock mechanism for the launcher.

Next, for the tension, instead of just few handful rubber bands, in the spirit of reuse and recycle, I extracted the elastic from the masks.

Next, pilot holes were drilled using a drill machine with larger drill bit. M4 screws with washers and nuts were added to hold the rubber bands in place. A bit distance was kept between the washer and nut to hook the elastic inside them, and also to avoid the tail end obstruction with the PVC.

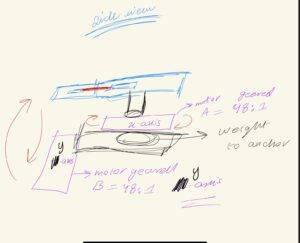

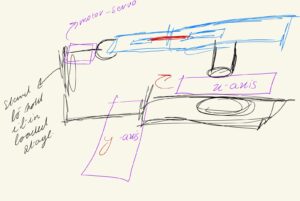

Since the weight of the whole thing only kept on increasing, the efficiency was to avoid y-axis movement, and instead go for vertical acceleration and a projectile motion.

Last but not the least, wooden pieces from IM lab were used alongside wheels and a 48:1 gear motor for turning the launcher within the horizontal axis.

The final assembled project looks something like this:

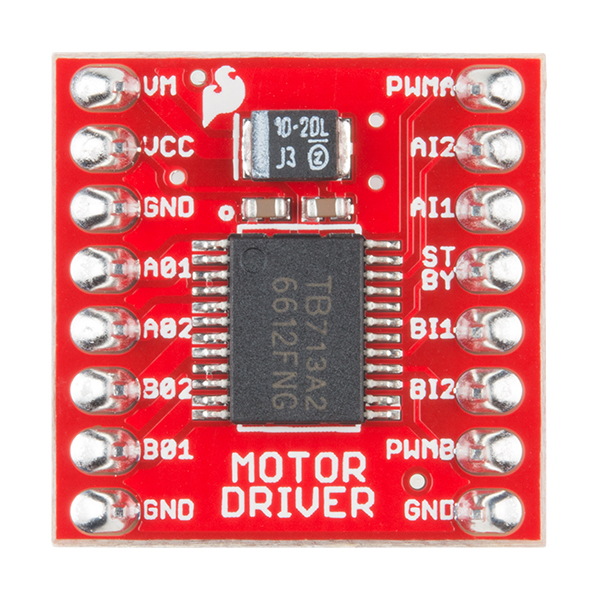

Motor driver was added to regulate and power the voltage being drawn from Arduino towards the geared motor.

Arduino and P5 Code:

Arduino:

The arduino code has pin mapped out. The motor driver receives PWM signal from

const int ain1Pin = 3;

const int ain2Pin = 4;

const int pwmPin = 5;

int score = 0;

const int startButton = A0;

const int greenButton = A1;

const int redButton = A3;

const int trigger = A5;

const int greenLED = 12;

const int redLED = 8;

const int blueLED = 7;

int trigVal = 0;

const int threshold = 400;

enum GameState { MENU, GAMEPLAY, PAUSED };

GameState gameState = MENU;

void setup() {

Serial.begin(9600);

pinMode(ain1Pin, OUTPUT);

pinMode(ain2Pin, OUTPUT);

pinMode(pwmPin, OUTPUT);

pinMode(greenLED, OUTPUT);

pinMode(redLED, OUTPUT);

pinMode(blueLED, OUTPUT);

digitalWrite(redLED, HIGH);

digitalWrite(greenLED, LOW);

digitalWrite(blueLED, LOW);

delay(500);

}

void loop() {

int startVal = analogRead(startButton);

int greenVal = analogRead(greenButton);

int redVal = analogRead(redButton);

trigVal = analogRead(trigger);

static bool lastStartPressed = false;

static bool lastTriggerPressed = false;

bool startPressed = startVal > threshold;

bool triggerPressed = trigVal > 1020;

// Serial communication from p5.js

if (Serial.available() > 0) {

String message = Serial.readStringUntil('\n');

message.trim();

if (message == "HELLO") {

Serial.println("ACKNOWLEDGED");

} else if (message == "RESET") {

gameState = MENU;

digitalWrite(redLED, HIGH);

digitalWrite(greenLED, LOW);

digitalWrite(blueLED, LOW);

digitalWrite(ain1Pin, LOW);

digitalWrite(ain2Pin, LOW);

analogWrite(pwmPin, 0);

} else if (message == "Game over") {

// Optional cleanup

}

}

// Handle start button press

if (startPressed && !lastStartPressed) {

if (gameState == MENU) {

Serial.println("Starting game!");

gameState = GAMEPLAY;

digitalWrite(redLED, LOW);

digitalWrite(greenLED, HIGH);

digitalWrite(blueLED, LOW);

digitalWrite(ain1Pin, LOW);

digitalWrite(ain2Pin, LOW);

analogWrite(pwmPin, 0);

}

else if (gameState == GAMEPLAY) {

Serial.println("Game paused!");

gameState = PAUSED;

digitalWrite(greenLED, LOW);

digitalWrite(blueLED, HIGH);

digitalWrite(ain1Pin, HIGH);

digitalWrite(ain2Pin, HIGH);

analogWrite(pwmPin, 255);

}

else if (gameState == PAUSED) {

Serial.println("Resuming game!");

gameState = GAMEPLAY;

digitalWrite(greenLED, HIGH);

digitalWrite(blueLED, LOW);

digitalWrite(ain1Pin, LOW);

digitalWrite(ain2Pin, LOW);

analogWrite(pwmPin, 0);

}

}

lastStartPressed = startPressed;

// Score trigger: rising edge detection

if (gameState == GAMEPLAY && triggerPressed && !lastTriggerPressed) {

score++;

Serial.print("SCORE:");

Serial.println(score);

}

lastTriggerPressed = triggerPressed;

// Motor control during gameplay

if (gameState == GAMEPLAY) {

if (greenVal > threshold) {

Serial.println("Green button pressed - motor right");

digitalWrite(ain1Pin, LOW);

digitalWrite(ain2Pin, HIGH);

analogWrite(pwmPin, 255);

}

else if (redVal > threshold) {

Serial.println("Red button pressed - motor left");

digitalWrite(ain1Pin, HIGH);

digitalWrite(ain2Pin, LOW);

analogWrite(pwmPin, 255);

}

else {

digitalWrite(ain1Pin, LOW);

digitalWrite(ain2Pin, LOW);

analogWrite(pwmPin, 0);

}

}

}

P5:

let rVal = 0;

let alpha = 255;

let gameState = 'MENU'; // 'MENU', 'GAMEPLAY', 'RESULT', 'WAITING_FOR_START'

let counter = 60;

let points = 0;

let score = 0;

let left = 0;

let right = 0;

let resultTimer = 0;

function setup() {

createCanvas(640, 480);

textAlign(CENTER, CENTER);

textSize(24);

}

function draw() {

background(map(rVal, 0, 1023, 0, 255), 255, 255);

fill(255, 0, 255, map(alpha, 0, 1023, 0, 255));

// text("Game State: " + gameState, 20, 30);

if (gameState === 'MENU') {

resultTimer = 0;

showMenuScreen();

} else if (gameState === 'WAITING_FOR_START') {

showWaitingScreen();

} else if (gameState === 'GAMEPLAY') {

showGameScreen();

} else if (gameState === 'RESULT') {

showResultScreen();

resultTimer++;

if (resultTimer === 180) { // ~3 seconds

writeSerial("RESET\n");

gameState = 'MENU';

}

}

if (mouseIsPressed) {

if (mouseX <= width / 2) {

left = 1;

} else {

right = 1;

}

} else {

left = right = 0;

}

}

function readSerial(data) {

data = trim(data);

// Start game and reset counter and points

if (data === "Starting game!") {

counter = 60;

points = 0;

gameState = 'GAMEPLAY';

return;

}

// Check if the data contains the score information

if (data.startsWith("SCORE:")) {

points = int(data.split(":")[1]); // Extract score from Arduino message

return;

}

// Process rVal and alpha from Arduino (existing logic)

let fromArduino = split(data, ",");

if (fromArduino.length === 2) {

rVal = int(fromArduino[0]);

alpha = int(fromArduino[1]);

}

// Send button press information back to Arduino

let sendToArduino = left + "," + right + "\n";

writeSerial(sendToArduino);

}

function showMenuScreen() {

fill(0);

textSize(32);

text("Project Clean Shot", width / 2, height / 2 - 50);

textSize(24);

text("Press Space Bar to Start Communication", width / 2, height / 2);

}

function showWaitingScreen() {

fill(0);

textSize(28);

text("Waiting for Arduino to start the game...", width / 2, height / 2);

}

function showGameScreen() {

fill(0);

textSize(64);

text(counter, width / 2, height / 2 - 50);

textSize(32);

text("Points: " + points , width / 2, height / 2 + 50);

if (frameCount % 60 === 0 && counter > 0) {

counter--;

}

if (counter <= 0) {

gameState = 'RESULT';

writeSerial("Game over\n");

}

}

function showResultScreen() {

fill(0);

textSize(48);

text("Game Over", width / 2, height / 2 - 50);

textSize(32);

text("Points: " + points, width / 2, height / 2);

textSize(24);

text("Returning to Menu...", width / 2, height / 2 + 50);

}

function keyPressed() {

if (key === " " && gameState === 'MENU') {

setUpSerial(); // comes from the serial adapter file

gameState = 'WAITING_FOR_START';

}

}

P5 Demo link: https://editor.p5js.org/alinoor_3707/sketches/QbmSLt2kR

P5 and Arduino communication:

P5 and Arduino both communicate using serial mode of communication, which via the USB communicates to one another. The Arduino for instance in our case, after measuring physical values, generates digital signal in the form of ‘Serial.println’ for instance. This is then read by P5js using the web serial API. Since I couldn’t attend the earlier class where this was discussed, I had a hard time understanding the working, and ended up venturing into the NodeJS based p5Serial software after watching an outdated tutorial online. It was after close-reading the documentation that I realized that there is a web-based serial communication interface as well. Nonetheless, using p5.web-serial the data was read. Hence, the Arduino would write and the P5 program would listen. It is; however, working other way around as well when the timer ends and the arduino is told to change the state from gameplay to menu.

Description of interactivity:

The main focus and area of attention was the hardware. 30 percent of time was dedicated to P5 based interaction and majority of the rest was dedicated to Arduino and mechanism behind the rotation and launch of the barrel. The idea was to build a tangible and sturdy structure i.e responsive. Borrowing the idea from one of the readings: A Brief Rant on the Future of Interaction Design, I wanted to build something that was not hidden underneath the glass-screen as the author described it, but was tactile and tangible in nature.

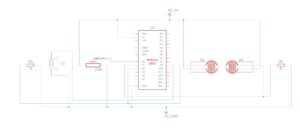

Circuit schematic:

For the circuitry, since a motor was incorporated to handle the horizontal movement, this time I referred to the tutorial notes provided by Professor Shiloh alongside the sketch for the schematic:

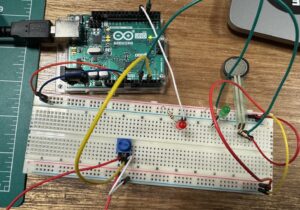

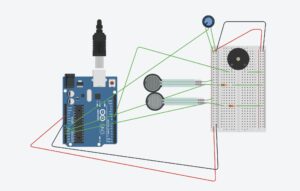

After mapping and implementing this sketch, the next matter at hand was the implementation of the Buttons and LEDs, which were done after first drafting a sketch:

NOTE: Since the Sparkfun TB6612 FNG motor driver wasn’t available in drawing tool, so an alternative has been used to refer to the mapping of the one used.

The sketch digram:

Aspect I am proud of:

The best and most favorite part that makes me happy to get done with was the crafting and hacking. There was a temptation to tinker around with the New Prusa 3-D printers inside the IM lab, however, in the spirit of craftmanship, and getting hands dirty, I decided to drill, saw, cut, glue, sand, break, and do all sorts of wonderful things. It was a crash-course, but I am now much more confident in my ability to build things, instead of placing order to 3rd party contractors to build stuff. This makes me immensely proud of myself and the thing I built with my hand – no matter the imperfections.

Areas for future improvement:

One of the areas that I did work on, but didn’t end up working was the latch mechanism for triggering the release of the barrel after it had been loaded. It popped off due to high tension in the rubber-bands/ elastic, and due to weak structural integrity of the launcher. In the future I would like to work with techniques that help avoid loose fittings and things breaking off.

Moreover, I wanted to add haptics and gyroscope, however, having limited experience with the sensor and low on time, I decided to go with tactile buttons. In the future I would like to work on response hand-gesture controlled mechanism, which also works in the y-axis with an additional motor!

Project Concept & Work in Progress

Project Concept & Work in Progress

The sketch for the wiring and connections.

The sketch for the wiring and connections. Schematic for the Arduino connection and port mapping

Schematic for the Arduino connection and port mapping