Introduction:

For this midterm project, I wanted to design something different. Something unique. Something meaningful to me in a way that I get to represent my perspective when it comes interactivity and design. Most of the games that I have played growing up, have been AAA gaming titles (top-notch graphics intensive games) on my PSP (Playstation Portable).

Be it Grand Theft Auto or God of War, I have played them all. However, if there is one game that I never got a chance to play on my PSP due to it not being released for that gaming platform , was Beyblade V-Force! It was available on Nintendo Go and other previous generation devices, but wasn’t there for the newer ‘PSP’ that I owned. I till this date, love that cartoon series. Not only was and am a loyal fan of the show, but I have collected most of the toy models from the show as well.

Brainstorming ideas + User interaction and game design:

This project started off with me wondering what is that one thing dearer to me. After spending an hour just short listing topics that I was interested in , I ended up with nothing. Not because I couldn’t think of any, but because I couldn’t decide upon the one single game. I started this project with a broken hand. My left hand is facing some minor issues, and due to which, I cannot type of hold on to things with the left hand. Particularly my thumb. This made me realize that not only does it make it difficult to program the game, but also to play it as well. My misery made me conscious of users who may struggle with the conventional controls offered typically in the gaming environment : a joystick and some buttons. It made me wonder what can I do different in my case and make this game more accessible to people like me who find it difficult to use a tangible medium to interact with the program. Hence I decided to use hand-tracking technology and sound classification. There is this whole buzz around A.I and I thought why not use a machine learning library to enhance and workout my project. Yet still, I couldn’t finalize a topic or genre to work on.

At first, I came up with the idea of a boxing game. Using head-tracking and hand tracking, the user will enter a face-off with the computer, where he/she will have to dodge by moving head left or right to move in the corresponding direction. To hit, they will have to close hand and move their whole arm to land a punch.

Flow chart of basic logic construct

Flow chart of basic logic construct

I drafted the basic visuals and what I wanted it to look like, but then as I started to work, I realized that violence is being portrayed and is not but un-befitting for an academic setting. Moreover, I wasn’t in a mood to throw punch and knock my laptop down, since I am a bit clumsy person. This was when my genius came into being. 1 day before, I decided to scrap the whole work and get started again on a new project. This time, it is what I love the most. You guessed it right – it is beyblade!

The whole idea revolves around two spinning metal tops with a top view, rotating in a stadium. These tops hit one another, and create impact. This impact either slows them down, or knocks one out , and even sometimes both out of the stadium. The first one to get knocked out or stops spinning loses, and the other one wins. I wanted to implement this, and give user the ability to control their blades using hand gesture. The user will be able to move their blade around using their index finger and thumb pointing and moving in the direction they would like their blade to move. The catch however, is that only when the thumb and index finger are closed, only then you will be able to move the blade, and to attack, only when your thumb and index finger are not touching, only then will you be able to attack and inflict damage on opponent. To save yourself from damage, you either dodge, or keeping fingers opened. These control constructs are unique and are not implemented in any game design of similar genre or nature before. I came up with this, because I cannot properly grab using my left thumb and index finger, and hence wanted to use them in the game.

I have decided to use states to transition between the menu, instruction screen, gameplay , showing win/lose screen, and returning back to the menu. This makes it convenient to use the modular code and use it inside the ‘draw’ function.

![Legal Stadiums-[bc]These are the legal tournament stadiums that bladers can agree to battle on in Official Ranked Battles.[](https://pm1.aminoapps.com/7675/b2fd66ad6e358d1a334637c68041eff8616dd030r1-512-288v2_hq.jpg)

-

-

-

ML5:

To make the controls workout, I will have to rely on Ml5.js. ML5 allows machine learning for web-based projects. In my case, I will be making use of handPose and soundClassifier modules, which happen to be pre-trained models. Hence, I won’t have to go through the hassle of training a model for the game.

-

-

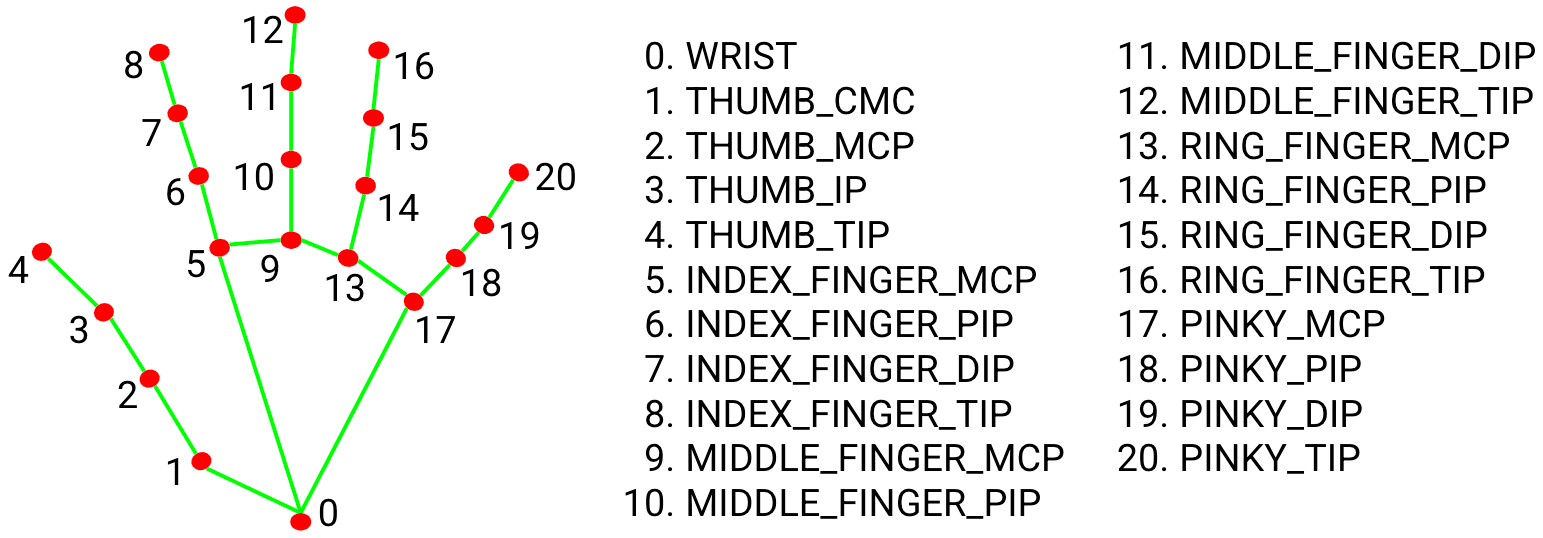

Using the key points 4 and 8, and by mapping out their relative distance, I plan on tracking and using these to return boolean values, which will be used to control the movement of the blade. I referred to coding-train’s youtube Chanel as well to learn about it, and implement it into my code.

I am yet to figure out how to use sound-classification, but will post in my final project presentation post.

Code (functions, classes, interactivity):

Class and Objects – Pseudo code.

Class and Objects – Pseudo code.

Though I am yet to code, and due to limited mobility , my progress has been slowed. Nonetheless, I sketched out the basic class and constructor function for both objects (i.e player and the opponent). Each blade will have speed, position in vertical and horizontal directions, as well as methods such as displaying and moving the blade. To check for damage and control the main layout of the game, if and else statements will be used in a separate function, will then be called inside the ‘draw function’.

Complex and trickiest part:

The trickiest part is the machine learning integration. During my tests, hand gesture works, but despite training the sound classifier, it still doesn’t return true, which will be used to trigger the signature move. Moreover, I want there to be a delay between user’s hand -gesture and the beyblade’s movement in that particular direction. This implementation of ‘rag doll’ physics is what is to be challenging.

Tackling the problems and risks:

To minimize the mess in the p5 coding environment, I am defining and grouping related algorithmic pattern into individual functions, as opposed to dumping them straightaway into the ‘draw’ function. This helps me in keeping the code organized and clean, and allows me to re-use it multiple times. Secondly, using Ml5.js was a bit risky, since this hasn’t been covered in the class, and the tutorial series does require time and dedication to it. Cherry on top, limited hand mobility put me at disadvantage. Yet still, I decided to go with this idea, since I want it to simply be unique. Something which makes the player play the game again. To make this possible, I am integrating the original sound-track from the game, and am using special effects such as upon inflicting damage. Asides from the theming, I did face an issue with letting the user know wether their hand is being tracked or not in the first place. To implement it, I simply layers the canvas on top of the video, which solved the issue for me. As of now, I am still working on it from scratch , and will document further issues and fixes in the final documentation for this mid-term project.

Basic Layout visualized.

Basic Layout visualized. Basic gameplay features:

Basic gameplay features: Game states

Game states