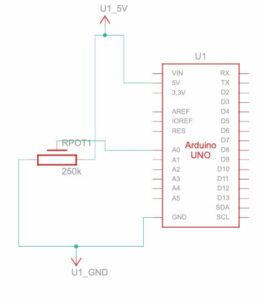

Example 1 – Arduino to p5 communication

P5 code

let X = 0; // ellipse x position

function setup() {

createCanvas(400, 400);

}

function draw() {

background(240);

fill('rgb(134,126,126)');

ellipse(X, height/2, 80);

}

function keyPressed() {

if (key === " ") {

setUpSerial(); // Start the serial connection on space bar press

}

}

// Processes incoming data from Arduino

function readSerial(data) {

if (data != null) {

let fromArduino = split(trim(data), ","); // Split incoming data by commas

if (fromArduino.length >= 1) {

// Process the first value for X position

let potValue = int(fromArduino[0]); // Convert string to integer

X = map(potValue, 0, 1023, 50, width - 50); // Map potentiometer value to X position

Y = height / 2; // Keep Y position constant

}

}

}

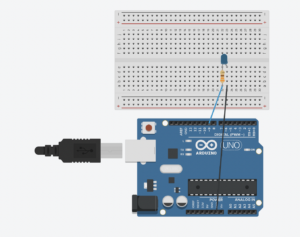

Arduino code

void setup() {

Serial.begin(9600); // serial communication

}

void loop() {

int potValue = analogRead(A0); // potentiometer value

Serial.println(potValue); // send the value to the serial port

delay(10);

}

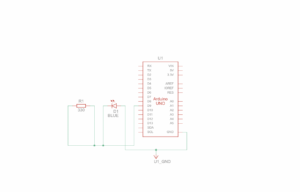

Example 2 – P5 to Arduino Communication

p5 code

let brightness = 0; // Brightness value to send to Arduino

function setup() {

createCanvas(640, 480);

textSize(18);

}

function draw() {

background(255);

if (!serialActive) {

// If serial is not active display instruction

text("Press Space Bar to select Serial Port", 20, 30);

} else {

// If serial is active, display connection status and brightness value

text("Connected", 20, 30);

text('Brightness = ' + str(brightness), 20, 50);

}

// Map the mouseX position (0 to width) to brightness (0 to 255)

brightness = map(mouseX, 0, width, 0, 255);

brightness = constrain(brightness, 0, 255); // Ensures brightness is within the range 0-255

// Sends brightness value to Arduino

sendToArduino(brightness);

}

function keyPressed() {

if (key == " ") {

// Start serial connection when the space bar is pressed

setUpSerial();

}

}

function readSerial(data) {

if (data != null) {

}

}

// We dont need this part of the code because we are not getting any value from the Arduino

// Send brightness value to Arduino

function sendToArduino(value) {

if (serialActive) {

let sendToArduino = int(value) + "\n"; // Convert brightness to an integer, add newline

writeSerial(sendToArduino); // Send the value using the serial library

}

}

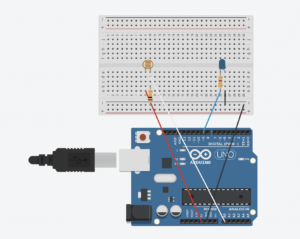

Arduino code

void setup() {

Serial.begin(9600); // serial communication

}

void loop() {

int potValue = analogRead(A0); // potentiometer value

Serial.println(potValue); // send the value to the serial port

delay(10);

}

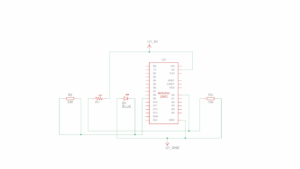

Example 3 – Bi-Directional Communication

p5 code

let velocity;

let gravity;

let position;

let acceleration;

let wind;

let drag = 0.99;

let mass = 50;

let windValue = 0; // Wind value from Arduino (light sensor)

let serialConnected = false; // Tracks if the serial port is selected

function setup() {

createCanvas(640, 360);

textSize(18);

noFill();

position = createVector(width / 2, 0);

velocity = createVector(0, 0);

acceleration = createVector(0, 0);

gravity = createVector(0, 0.5 * mass);

wind = createVector(0, 0);

}

function draw() {

background(255);

if (!serialConnected) {

// Show instructions to connect serial port

textAlign(CENTER, CENTER);

fill(0); // Black text

text("Press Space Bar to select Serial Port", width / 2, height / 2);

return; // Stop loop until the serial port is selected

} else if (!serialActive) {

// Show connection status while waiting for serial to activate

textAlign(CENTER, CENTER);

fill(0);

text("Connecting to Serial Port...", width / 2, height / 2);

return; // Stop until the serial connection is active

} else {

// display wind value and start the simulation if connected

textAlign(LEFT, TOP);

fill(0);

text("Connected", 20, 30);

text('Wind Value: ' + windValue, 20, 50);

}

// Apply forces to the ball

applyForce(wind);

applyForce(gravity);

// Update motion

velocity.add(acceleration);

velocity.mult(drag);

position.add(velocity);

acceleration.mult(0);

// Draw the ball

ellipse(position.x, position.y, mass, mass);

// Check for bounce

if (position.y > height - mass / 2) {

velocity.y *= -0.9;

position.y = height - mass / 2; // Keep the ball on top of the floor

// Sends signal to Arduino about the bounce

sendBounceSignal();

}

// Update wind force based on Arduino input

wind.x = map(windValue, 0, 1023, -1, 1); // Map sensor value to wind range

}

function applyForce(force) {

let f = p5.Vector.div(force, mass);// Force divided by mass gives acceleration

acceleration.add(f);

}

// Send a bounce signal to Arduino

function sendBounceSignal() {

if (serialActive) {

let sendToArduino = "1\n"; // Signal Arduino with "1" for a bounce

writeSerial(sendToArduino);

}

}

// incoming serial data from Arduino

function readSerial(data) {

if (data != null) {

windValue = int(trim(data)); // store the wind value

}

}

// Press space bar to initialize serial connection

function keyPressed() {

if (key == " ") {

setUpSerial(); // Initialize the serial connection

serialConnected = true; // Mark the serial port as selected

}

}

// Callback when serial port is successfully opened

function serialOpened() {

serialActive = true; // Mark the connection as active

}

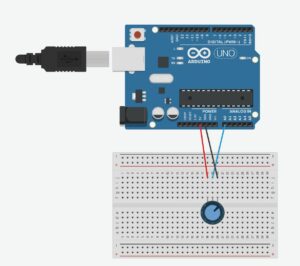

Arduino code

const int ledPin = 9; // Digital pin 9 connected to the LED

const int lightSensor = A0; // Analog input pin A0 for the light sensor

void setup() {

Serial.begin(9600); // Start serial communication

pinMode(ledPin, OUTPUT); // Set LED pin as an output

}

void loop() {

// Read the light sensor value

int lightValue = analogRead(lightSensor);

// Send the light value to p5.js

Serial.println(lightValue);

// Check for bounce signal (when ball touches the ground) from p5.js

if (Serial.available() > 0) {

int bounceSignal = Serial.parseInt();

if (Serial.read() == '\n' && bounceSignal == 1) {

// Turn on the LED briefly to indicate a bounce

digitalWrite(ledPin, HIGH);

delay(100);

digitalWrite(ledPin, LOW);

}

}

delay(50); // Small delay

}