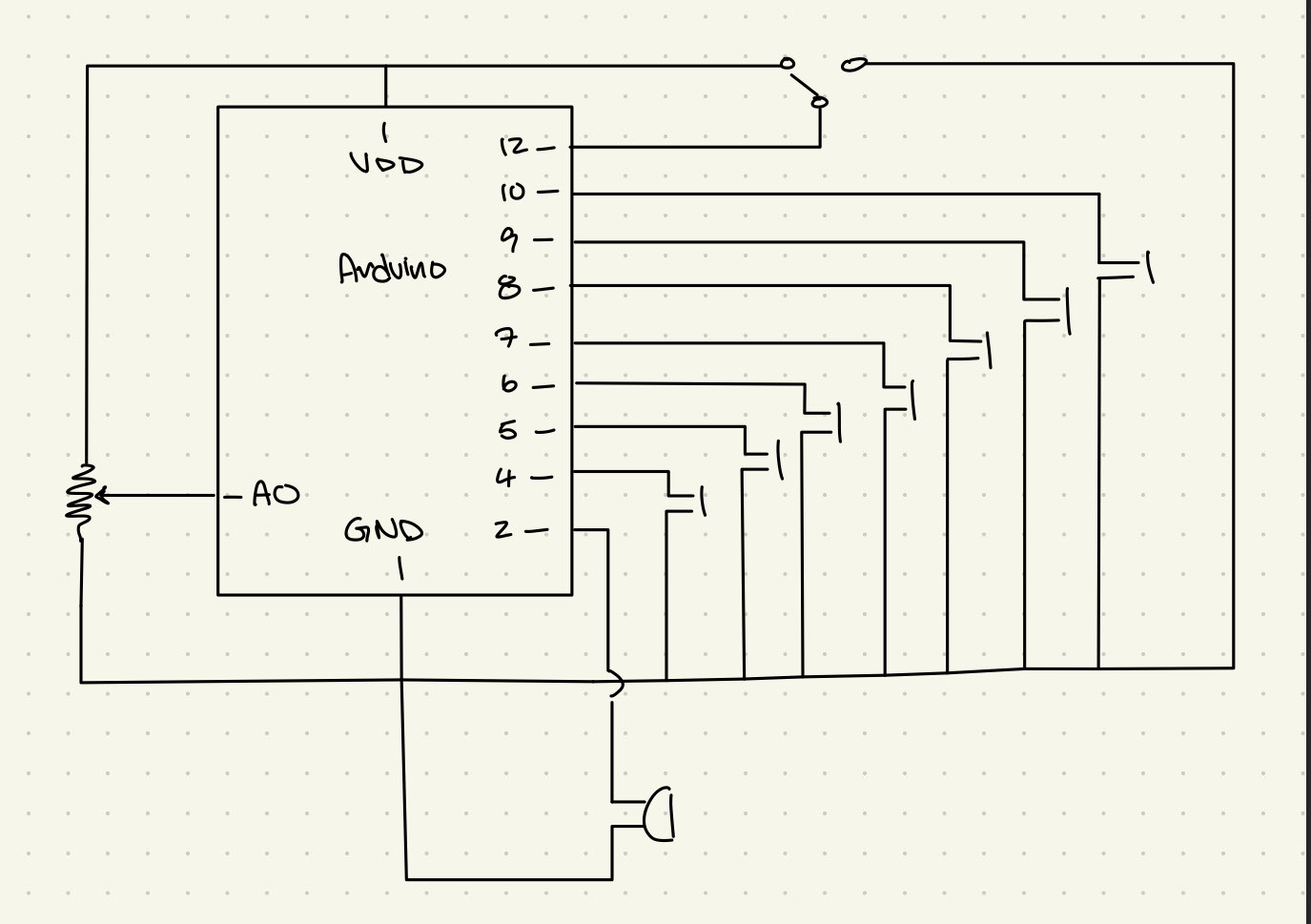

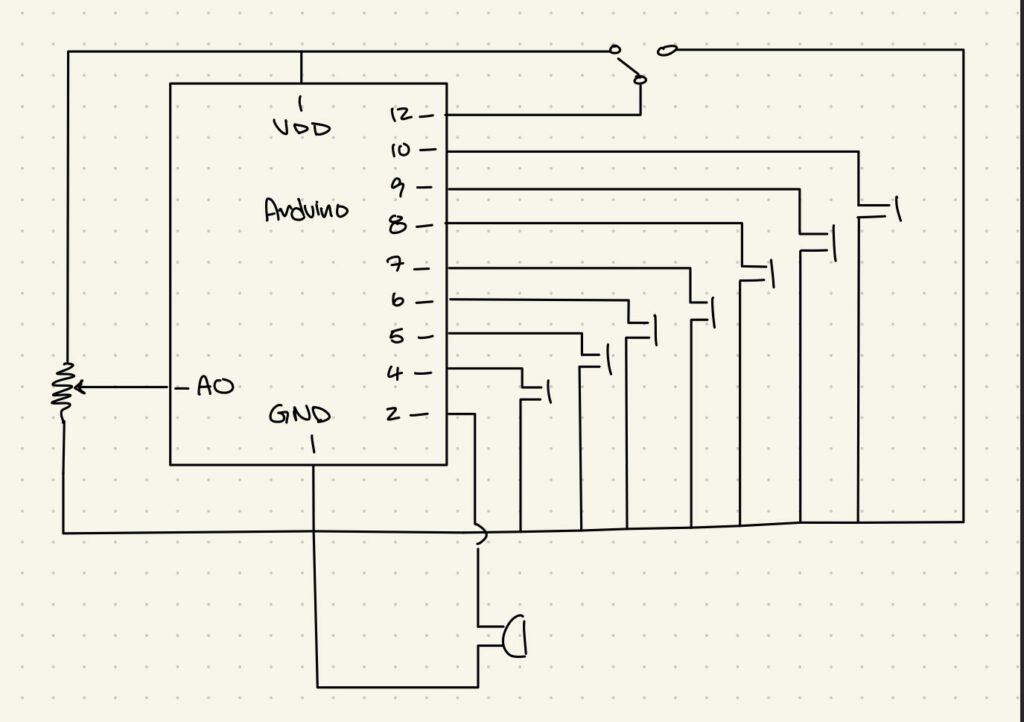

Concept

Our project, Ibrahim and I, “Echoes of Light,” emerged from a shared interest in using technology to create expressive, interactive experiences. Inspired by the way sound changes with distance, we aimed to build a musical instrument that would react naturally to light and proximity. By combining a photoresistor and distance sensor, we crafted an instrument that lets users shape sound through simple gestures, turning basic interactions into an engaging sound experience. This project was not only a creative exploration but also a chance for us to refine our Arduino skills together.

Materials Used

- Arduino Uno R3

- Photoresistor: Adjusts volume based on light levels.

- Ultrasonic Distance Sensor (HC-SR04): Modifies pitch according to distance from an object.

- Piezo Buzzer/Speaker: Outputs the sound with controlled pitch and volume.

- LED: Provides an adjustable light source for the photoresistor.

- Switch: Toggles the LED light on and off.

- Resistors: For the photoresistor and LED setup.

- Breadboard and Jumper Wires

Code

The code was designed to control volume and pitch through the analog and digital inputs from the photoresistor and ultrasonic sensor. The complete code, as documented in the previous sections, includes clear mappings and debugging lines for easy tracking.

// Define pins for the components

const int trigPin = 5; // Trigger pin for distance sensor

const int echoPin = 6; // Echo pin for distance sensor

const int speakerPin = 10; // Speaker PWM pin (must be a PWM pin for volume control)

const int ledPin = 2; // LED pin

const int switchPin = 3; // Switch pin

const int photoResistorPin = A0; // Photoresistor analog pin

// Variables for storing sensor values

int photoResistorValue = 0;

long duration;

int distance;

void setup() {

Serial.begin(9600); // Initialize serial communication for debugging

pinMode(trigPin, OUTPUT); // Set trigger pin as output

pinMode(echoPin, INPUT); // Set echo pin as input

pinMode(speakerPin, OUTPUT); // Set speaker pin as output (PWM)

pinMode(ledPin, OUTPUT); // Set LED pin as output

pinMode(switchPin, INPUT_PULLUP); // Set switch pin as input with pull-up resistor

}

void loop() {

// Check if switch is pressed to toggle LED

if (digitalRead(switchPin) == LOW) {

digitalWrite(ledPin, HIGH); // Turn LED on

} else {

digitalWrite(ledPin, LOW); // Turn LED off

}

// Read photoresistor value to adjust volume

photoResistorValue = analogRead(photoResistorPin);

// Map photoresistor value to a range for volume control (0-255 for PWM)

// Higher light level (LED on) -> lower photoresistor reading -> higher volume

int volume = map(photoResistorValue, 1023, 0, 0, 255); // Adjust mapping for your setup

// Measure distance using the ultrasonic sensor

digitalWrite(trigPin, LOW);

delayMicroseconds(2);

digitalWrite(trigPin, HIGH);

delayMicroseconds(10);

digitalWrite(trigPin, LOW);

duration = pulseIn(echoPin, HIGH);

// Calculate distance in cm

distance = duration * 0.034 / 2;

// Set frequency based on distance in the range of 2-30 cm

int frequency = 0;

if (distance >= 2 && distance <= 30) {

frequency = map(distance, 1, 100, 20000, 2000); // Closer = higher pitch, farther = lower pitch

tone(speakerPin, frequency);

analogWrite(speakerPin, volume); // Apply the volume based on photoresistor reading

} else {

noTone(speakerPin); // Silence the speaker if the distance is out of range

}

// Debugging output

Serial.print("Photoresistor: ");

Serial.print(photoResistorValue);

Serial.print("\tVolume: ");

Serial.print(volume);

Serial.print("\tDistance: ");

Serial.print(distance);

Serial.print(" cm\tFrequency: ");

Serial.println(frequency);

delay(100); // Short delay for sensor readings

}

Video Demonstration

In our video demonstration, we showcase how the instrument responds to changes in light and proximity. We toggle the LED to adjust volume and move a hand closer or farther from the ultrasonic sensor to change pitch, demonstrating the instrument’s sensitivity and interactive potential.

Reflections

The project successfully combines multiple sensors to create a reactive sound device. The integration of volume and pitch control allows for intuitive, responsive sound modulation, achieving our goal of designing an engaging, interactive instrument.

Improvements:

To improve this instrument, we would enhance the melody range, creating a more refined and versatile sound experience. This could involve using additional sensors or more sophisticated sound generation methods to provide a broader tonal range and a richer melody.