Safe Russian Roulette

Introduction

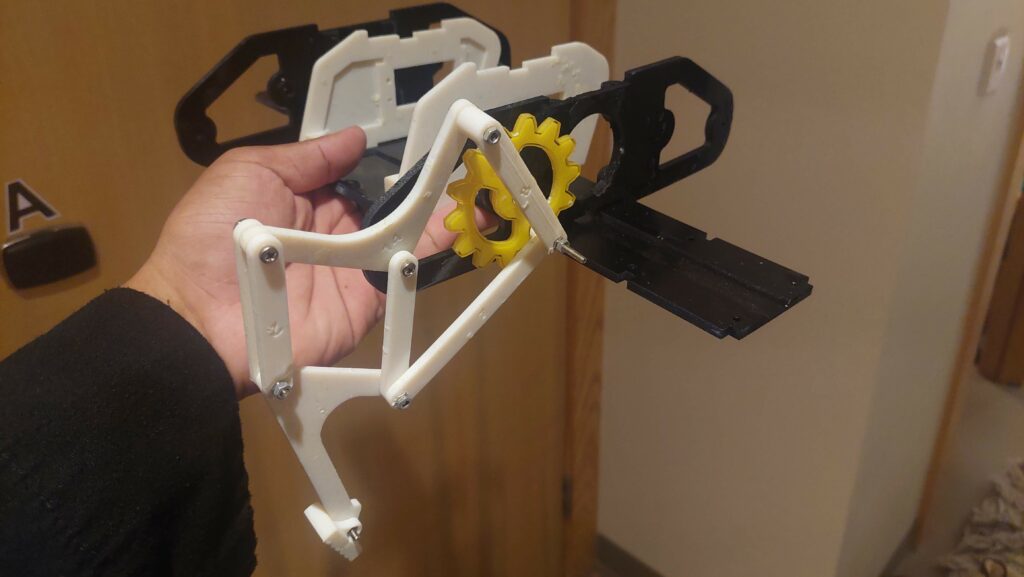

After having done a bit more research and some more ideation I am now sure of what I am going to create exactly. Of course there may be some changes down the road but the project should be at least 90% like what I’m going to outline here.

Inspiration

I originally had this idea before the midterm and it was going to be my midterm project but instead I changed it to the Mexican Standoff game. But, what I really wanted to do for both of these projects is make a game or anything that was interactive but that allowed for at least two people to enjoy the experience together.

I had been inspired by the indie game Buckshot Roulette as well. It’s a game where the player plays against a strange looking dealer who has lives and so does the player and the lives are represented by lightning bolts and there are abilities as well which makes the game more tactical. I wanted to almost recreate the game or at least the aesthetic but for now I will not do the abilities since I need to at least finish the foundation, i.e. just normal Russian Roulette.

The lightning bolts representing the lives of both the dealer and the player were done because each of them could be revived with a defibrillator. It was the lightning bolts themselves which lead to me having the idea of a player receiving an electric shock.

Then having the lives and a little menu on the side of the table inspired me to have a p5js sketch which can has a similar aesthetic.

How Russian Roulette works

& How this game will work

In the real game of Russian Roulette a revolver would be loaded with one live round and then the rest would either be empty or blanks.

In Safe Russian Roulette there will also be at least one live “round” and the rest are blanks, totaling 6 rounds. Depending on the difficulty chosen there will be between 1-5 rounds that are live. The names of the difficulties in ascending order are Standard (1 live round), Hard (1-2 live round), Harder (1-3 live rounds), Nearly Impossible (2-4 live rounds), Masochist (3-5 live rounds)

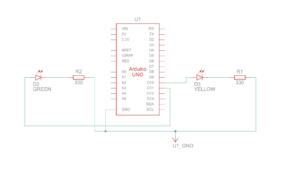

There will be 2 buttons for each player to press to either shoot themselves or the other. When the player shoots themselves they may either shock themselves or it may be a blank. If its a live round they lose and if its a blank they get a second turn which means its more likely that the next round will be live and therefore more likely that they will win if they shoot the other player. Each player has one life. Basically its a game of chance.

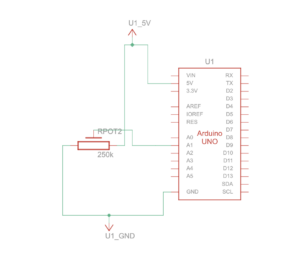

Before the players start shocking each other they will be greeted with a start page. There will be a potentiometer or knob of some kind and a button and together the user can select different options that are displayed using p5js. Options such as difficulty and retry.

There will also be some kind of lights that flash in a certain way which are in the middle of the table. It will display the number of live and blank rounds at the beginning (it can change color, red = live, white = blank) then, depending on the difficulty, it will either disappear completely, or it will show the number of rounds remaining but it will never show the number of live or blank rounds left, just the number of rounds left at most.

Then after one of the players lose it will indicate on the play screen who won and who lost and then ask the players if they want to play again or not. Also, during the game while the players are playing it will indicate the difficulty that the players are playing at so that others can see and in case they forgot.