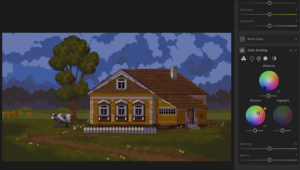

Sketch

This sketch will work in fullscreen, here is the link: https://editor.p5js.org/alexnajm/full/D8FoFUtc6

Concept and Inspiration

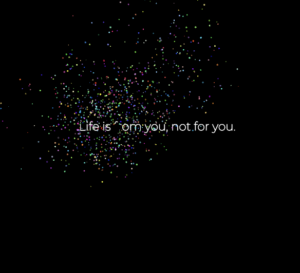

For my midterm, I decided to create some sort of audio-reactive artwork. In other words, I wanted the artwork to be controlled by the preloaded sounds.

Essentially, I created a playlist of 12 songs. I uploaded these songs and their matching cover images as well as the title and the artist. The idea is that the user can switch between songs with the left and right arrows keys, and the visuals in the background will change depending on which song is playing.

Here are the songs I picked:

- Heart-Shaped Box – Nirvana

- Cool Colorado – La Femme

- Weak For Your Love – Thee Sacred Souls

- Spooky – Dusty Springfield

- Karma Police – Radiohead

- Buddy’s Rendezvous – Lana Del Rey

- Althea – Grateful Dead

- Naive – The Kooks

- Drink Before The War – Sinead O’Connor

- Right Down The Line – Sam Evian

- She – The Blaze

- Belong In The Sun – ¿Téo?

My inspiration comes from the feelings I get when I listen to music. Sometimes, I just lay in bed and close my eyes and I start feeling the energy of the music. So I simply wanted to create an artwork that matches visuals to music, in a way that the former are controlled by the latter.

How it Works, and Code Snippets

1) The sketch begins with a start page. It has brief instructions. The user must click to start.

function displayStartPage() {

background(0);

image(star2, -150, 200, 620, 620);

image(star3, 800, 200, 520, 520);

fill(255);

textSize(32);

textAlign(CENTER, CENTER);

textFont(chosenFont);

text(

"Switch between songs\nwith the left and right arrows\n\n\n\nClick anywhere to start vibing!",

width / 2,

height / 2

);

}

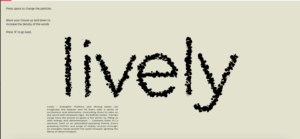

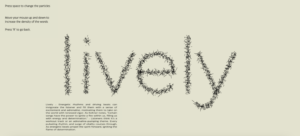

2) Once on the main page, the first song starts playing with the corresponding image, text, and visuals.

function mousePressed() {

if (currentState === "startPage") {

currentState = "mainPage";

song1.play();

}

}

function displayMainPage() {

background(0);

let colorPalette = [

color(112, 2, 2),

color(2, 34, 152),

color(228, 121, 155),

color(203, 172, 53),

color(162, 227, 232),

color(255),

color(146, 111, 55),

color(191, 66, 38),

color(84, 45, 151),

color(178, 157, 202),

color(39, 100, 151),

color(76, 128, 93),

]; // color palette array in order to change colors with every track

let currentColor = colorPalette[displayedImages.currentIndex]; // setting the current color as the color with the current index, from the color palette array

stroke(currentColor);

fill(255, 0.5);

// getting the amplitude level of the playing song and mapping it to then plug into the shape

let volume = amplitude.getLevel();

let heightMultiplier = map(volume, 0, 1, -2, height * 1.5);

// Setting the melting lines in the background

let lineSpacing = 3; // line spacing variable to set the distance between each line

let noiseScale = 0.005; // noise scaling variable to determine the smoothness of the noise

for (let y = 0; y < height; y += lineSpacing) {

// for loop which draws the parallel lines with a spacing of 3

beginShape();

for (let x = 0; x <= width; x += 120) {

// nested for loop that iterates the points along a horizontal line

let noiseVal = noise((x + frameCount) * noiseScale, y * noiseScale); // noise value variable which calculates a perlin noise value for each vertex point -- the x-coordinate is adjusted with the noise scale and the frame count, they y-coordinate is only adjusted with the noise scale

let meltingEffect = map(noiseVal, 0, 1, -heightMultiplier / 2, heightMultiplier / 2

); // the melting effect created by mapping the noise value, between 0 and 1, to a greater range in order to amplify the melting effect. The range is set according to the heightMultiplier defined above, so that the amplitude levels of the song control the movement.

curveVertex(x + meltingEffect * 1.2, y + meltingEffect * 1.2); // adding a vertex at x + melting effect and y + melting effect (horizontal + vertical offset). The vertical position is therefore altered by the noise in order to create the dynamic effect

}

endShape();

}

// display images

displayedImages.display();

amplitude.setInput(displayedImages.songs[displayedImages.currentIndex]);

}

I had a lot of fun creating this part, as I got to play around with the visuals and the amplitude. It took some time, but I think it was worth it.

3) The user can move to the next song by pressing the right arrow key, or to the previous song by pressing the left arrow key.

// keyPressed function to allow the user to change between images

function keyPressed() {

if (currentState === "mainPage") {

if (keyCode === RIGHT_ARROW) {

displayedImages.nextImage();

} else if (keyCode === LEFT_ARROW) {

displayedImages.previousImage();

}

}

}

4) There are 12 songs. If the user is at the last song and presses the right arrow key, it will go back to the first song. If the user is at the first song and presses the left arrow key, it will go back to the twelfth song.

5) There is a “Track 1” button. If it is pressed, no matter on which song the user is, it will take them back to the first song. This is the “reset” button.

// creating a button to return to track 1

song1Button = createButton("Track 1");

song1Button.position(1300, 20);

song1Button.style("background-color", "0");

song1Button.style("border", "none");

song1Button.style("font-size", "20px");

song1Button.style("color", "255");

song1Button.mousePressed(index1);

amplitude = new p5.Amplitude();

frameRate(20);

}

// index1 function incorporated into the button function, in order to get the first image, text, and sound from the array

function index1() {

if (currentState === "mainPage") {

displayedImages.currentIndex = 0;

displayedImages.updateImage();

displayedImages.stopSongs();

displayedImages.playCurrentSong();

amplitude.setInput(displayedImages.songs[displayedImages.currentIndex]);

}

}

Other than these functions, I am really proud of the visuals. I have re-included the code snippet here:

// getting the amplitude level of the playing song and mapping it to then plug into the shape

let volume = amplitude.getLevel();

let heightMultiplier = map(volume, 0, 1, -2, height * 1.5);

// Setting the melting lines in the background

let lineSpacing = 3; // line spacing variable to set the distance between each line

let noiseScale = 0.005; // noise scaling variable to determine the smoothness of the noise

for (let y = 0; y < height; y += lineSpacing) {

// for loop which draws the parallel lines with a spacing of 3

beginShape();

for (let x = 0; x <= width; x += 120) {

// nested for loop that iterates the points along a horizontal line

let noiseVal = noise((x + frameCount) * noiseScale, y * noiseScale); // noise value variable which calculates a perlin noise value for each vertex point -- the x-coordinate is adjusted with the noise scale and the frame count, they y-coordinate is only adjusted with the noise scale

let meltingEffect = map(noiseVal, 0, 1, -heightMultiplier / 2, heightMultiplier / 2

); // the melting effect created by mapping the noise value, between 0 and 1, to a greater range in order to amplify the melting effect. The range is set according to the heightMultiplier defined above, so that the amplitude levels of the song control the movement.

curveVertex(x + meltingEffect * 1.2, y + meltingEffect * 1.2); // adding a vertex at x + melting effect and y + melting effect (horizontal + vertical offset). The vertical position is therefore altered by the noise in order to create the dynamic effect

}

endShape();

}

// display images

displayedImages.display();

amplitude.setInput(displayedImages.songs[displayedImages.currentIndex]);

}

I used chatGPT to help me get the “melting” effect, which ultimately just turned into a dynamic effect. I used noise in order to create the movement, and then mapped it to a range set by the height multiplier (that includes the amplitude), which enabled the movement to be specific to the song currently playing.

Another part I am proud of is the functions inside my DisplayedImages class, which enabled the interaction of the user to switch between songs (and simultaneously with images and texts):

// function to stop the previous song when going to the next one

stopSongs(){

this.songs.forEach(song => song.stop())

}

// function to play the current song

playCurrentSong(){

this.songs[this.currentIndex].play();

amplitude.setInput(this.songs[this.currentIndex]); // this allows us to get the amplitude for the song that is currently playing

}

// function to update image and text to the current index, it was needed for the button I created, otherwise they wouldn't change

updateImage() {

this.currentImage = this.images[this.currentIndex];

this.currentText = this.texts[this.currentIndex];

}

// set what the next image, text, and song will be - also stops the current song and plays the next one

nextImage() {

this.songs[this.currentIndex].stop();

this.currentIndex = (this.currentIndex + 1) % this.images.length;

this.updateImage();

this.currentImage = this.images[this.currentIndex];

this.currentText = this.texts[this.currentIndex];

this.songs[this.currentIndex].play();

}

// set what the previous image, text, and sound will be - also stops the current song and plays the previous one

previousImage() {

this.songs[this.currentIndex].stop();

this.currentIndex =

(this.currentIndex - 1 + this.images.length) % this.images.length;

this.updateImage();

this.currentImage = this.images[this.currentIndex];

this.currentText = this.texts[this.currentIndex];

this.songs[this.currentIndex].play()

}

}

Problems and Potential Improvement

The main problem I ran into was uploading the music. For some reason, when I uploaded the files, the sketch would be stuck on loading forever (and never actually load). I tried everything I could for a good three hours, and nothing worked. Eventually, I tried redownloading all the files from scratch, and through some weird manipulation it worked, thankfully. I am just sad that I lost so much time on this when I could have added more things instead.

Another problem I ran into was when the “Track 1” button was pressed, the amplitude wouldn’t be extracted anymore, resulting in almost no movement in the visuals (and that was the same for every song playing). I ended up having to add the amplitude input in the class as well, under the playCurrentSong function.

As for improvement, I would have liked to extract the pixels from the images and have them create a gradient for the shape. I tried, but couldn’t really figure it out so I just opted with one color per song.

Something else I would like to add eventually is a pause button so that the user can pause/play the song currently playing.

Finally, I would also like to add a function so that when one song ends it automatically moves on to the next. Currently, when one song ends, it just ends. It doesn’t start over nor does it go to the next song, so no music plays. I think changing that could make the process smoother!

Conclusions

Overall, I really enjoyed creating this. It definitely wasn’t easy as there were a lot of new functions as well as elements we didn’t even talk about in class. When the sound wasn’t working it made me anxious and I honestly thought I would never finish this project. But I’m glad I figured it out, and I really did have fun making it and learning new things along the way. While there is a lot of room for improvement, I am quite proud of the way it looks!