Concept:

Code that I am proud of:

One aspect I’m particularly proud of is the implementation of object-oriented programming (OOP) principles. By structuring the code into classes like soundBoard and bubbleArt, I aimed to encapsulate related functionality and data, fostering code modularity and reusability. This approach not only enhances the readability of the code but also facilitates easier maintenance and future expansion of the project.

Class for sound Board – The ‘soundBoard’ class defines sound buttons with properties like index, position, and dimensions. Each button instance maintains an association between its index and a corresponding sound object. The ‘boxClicked’ method detects mouse clicks within button boundaries, facilitating user interaction. This class encapsulates functionality for managing interactive sound buttons, enhancing user experience through intuitive audio control.

// Class for sound button

class soundBoard {

constructor(index, xstart, ystart, boxWidth, boxHeight) {

this.index = index;

this.xPos = xstart;

this.yPos = ystart;

this.boxWidth = boxWidth;

this.boxHeight = boxHeight;

this.soundIndex = this.index;

this.sound = "";

}

// Check if the button is clicked

boxClicked(mouseXPos, mouseYPos) {

if (

mouseXPos >= this.xPos &&

mouseXPos <= this.xPos + this.boxWidth &&

mouseYPos >= this.yPos &&

mouseYPos <= this.yPos + this.boxHeight

) {

return this.index;

} else {

return -1;

}

}

}

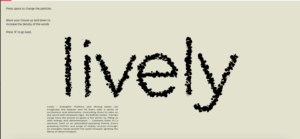

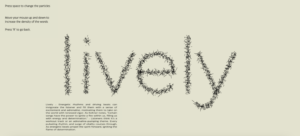

Class for bubbleArt – I made a ‘bubbleArt’ class to facilitate the creation of bubble text with customizable parameters such as word, position, font size, and style. The ‘wordToBubble’ method converts the text into a series of points, enabling the creation of bubble-shaped characters. Using the ‘brush’ method, individual bubbles or strokes are drawn based on the chosen mode (bubbles or strokes). The ‘moveBubbles’ method adjusts the position of the bubbles based on mouse input, allowing for dynamic interaction with the text. Overall, the class encapsulates functionality for generating visually appealing and interactive bubble text elements within the application.

// Class for creating bubble text

class bubbleArt {

constructor(

word,

xPos,

yPos,

fontsize,

sampleFactor,

sizeW,

sizeH,

mode,

bubbleMode

) {

this.word = word;

this.posX = xPos;

this.posY = yPos;

this.fontSize = fontsize;

this.samplefactor = sampleFactor;

this.sizeW = sizeW;

this.sizeH = sizeH;

this.mode = mode;

this.bubble = bubbleMode;

}

// Convert word to bubble text

wordToBubble() {

let points;

points = font.textToPoints(this.word, this.xPos, this.yPos, this.fontSize, {

sampleFactor: this.sampleFactor,

simplifyThreshold: 0,

});

return points;

}

// Get bounding box for text

boundBox() {

return font.textBounds(this.word, this.xPos, this.yPos, this.fontSize);

}

// Draw bubble text

drawPoints() {

let points;

points = this.wordToBubble();

if (points) {

for (let i = 0; i < points.length; i++) {

this.brush(points[i].x * this.sizeW, points[i].y * this.sizeH);

}

}

}

// Draw individual bubbles or strokes

brush(x, y) {

for (let i = 0; i < 1; i++) {

let posX = randomGaussian(0, 5);

let posY = randomGaussian(0, 5);

if (fontMode == "bubbles") {

// Drawing bubbles

let size = randomGaussian(5, 5);

ellipse(x + posX, y + posY, size, size);

} else {

// Drawing lines

let angle = random(TWO_PI);

let lineLength = randomGaussian(5, 5);

let endX = cos(angle) * lineLength + x + posX;

let endY = sin(angle) * lineLength + y + posY;

line(x + posX, y + posY, endX, endY);

}

}

}

// Move bubbles based on mouse position

moveBubbles() {

let bounds = this.boundBox();

let adjustedSampleFactor = map(mouseY, 0, windowHeight, 0.1, 3); // Adjusting sampleFactor based on mouseY position

translate(

-bounds.x * this.sizeW - (bounds.w / 2) * this.sizeW + windowWidth / 2,

-bounds.y * this.sizeH + 50 + windowHeight / 5

);

translateWidth = -(

-bounds.x * this.sizeW -

(bounds.w / 2) * this.sizeW +

windowWidth / 2

);

translateHeight = -(-bounds.y * this.sizeH + 50 + windowHeight / 5);

this.sampleFactor = adjustedSampleFactor; // Update sampleFactor

this.drawPoints();

}

}

Another highlight of the project is the integration of multimedia elements. Using preloaded assets and libraries like p5.js, I incorporated a diverse range of visual and sound files into the user experience (which was a hard task for me, I had to make sure that the files were not too heavy, and I was not aware of it before). Also while I was struggling in the start to create the sound objects for each button and associated them with their respective sound files. I was able to generate a grid layout for sound buttons by using nested loops to iterate over rows and columns. So it calculates the position of each button based on the current row and column, creating instances of the ‘soundBoard’ class and adding them to an array. I think that this approach organized the sound buttons systematically, and helped me establish the connection by assigning the sound files to the sound property of each sound object.

let index = 0;

for (let row = 0; row < 3; row++) {

for (let col = 0; col < 4; col++) {

let xstart = col * boxWidth;

let ystart = row * totalHeight;

soundObjects.push(

new soundBoard(index, xstart, ystart, boxWidth, boxHeight)

);

index++;

}

}

soundObjects[0].sound = sound1; // Associate sound object 0 with sound1

soundObjects[1].sound = sound2; // Associate sound object 1 with sound

One of the key design considerations that I wanted was the emphasis on user interaction and customization. And I was able to do it by providing users with control over sound playback and visual effects, the whole point was to personalize their experience and delve into the creative possibilities of the project.

Improvements:

While developing my sketch, I noticed that it lacked responsiveness. I realized that I relied heavily on fixed dimensions like ‘windowHeight’ and ‘windowWidth’, restricting how my visuals adapt to different screen sizes. This oversight should be addressed for future improvements to ensure a more adaptable layout. Additionally, I believe there’s room to enhance the interaction with the bubble art and lines. Currently, they serve as visual elements without meaningful interaction. In a previous project, I explored integrating them with sound generation, where mouse movements influenced the density of both sounds and visual elements. Exploring similar interactive possibilities could elevate the engagement level of the sketch. Moreover, I’m interested in learning how to integrate text directly with sound, rather than relying on images, which could further enrich the sound-visual part of my project.

Problems:

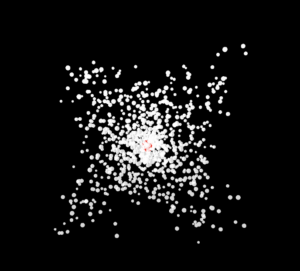

My main challenge was settling on a single idea for my project. Initially, I experimented with various concepts, aiming to recreate interactive art gifs that inspired me. After discussing ideas with friends, I finally settled on a concept. When I started making this project I encountered difficulty integrating object-oriented programming (OOP) principles into my project, so I opted to start with a simpler sketch using functions in different files. However, I faced hurdles when attempting to connect sounds with messages as I had envisioned. My original plan revolved around particle motion, which I learned about through tutorials. Later, I explored additional references to refine my understanding. Integrating user input functions proved problematic, with errors arising during execution, particularly with transitioning between different states such as ‘main Menu’, ‘sections’, ‘input’, and ‘display’.

Pictures of previous project displays:

References: