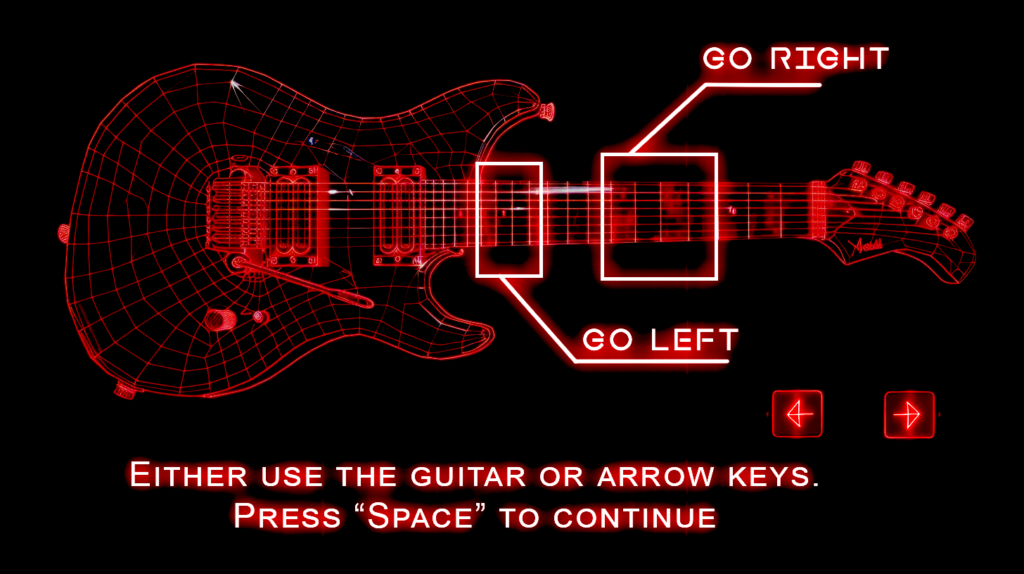

(Instructions : If you have a guitar, you can plug in your guitar and play the game. However, without a guitar, you can play with arrow keys. More instructions inside the game.)

Pi’s P5 Midterm : https://editor.p5js.org/Pi-314159/full/FCZ-y0kOM

If something goes wrong with the link above, you can also watch the full gameplay below.

Overall Concept

The Deal is a highly cinematic cyberpunk narrative I am submitting as my midterm project.

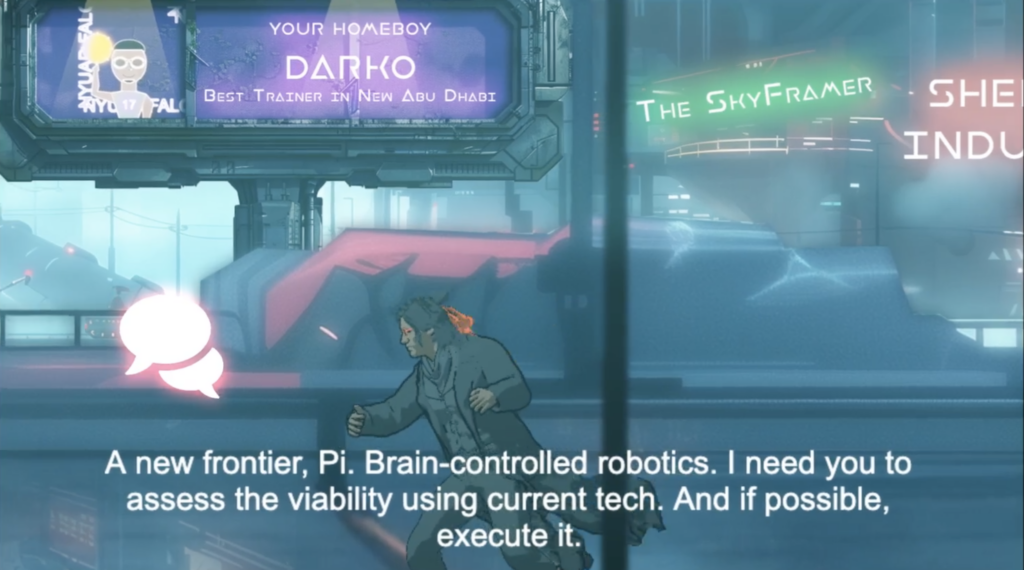

Meet Pi, the devil’s engineer, in a world where technology rules and danger lurks in every shadow. After receiving a mysterious call from a woman offering a lucrative but risky job., he’s plunged into a world of corporate espionage over brain-controlled robots.

The inspiration for this comes from my daily life, where I have to deal with tech clients, and … yes I actually do Brain controlled robots in my lab, you scan the brain waves through electroencephalogram (EEG) and use that feed that signal through a neural network to translate to robot movements. It’s in very early stage, but it is moving.

How the project works

The game is supposed to be played with the guitar. In fact, it is to be used not as a game, but as a storytelling tool by a guitar playing orator to a live audience. Below is the demonstration of the game.

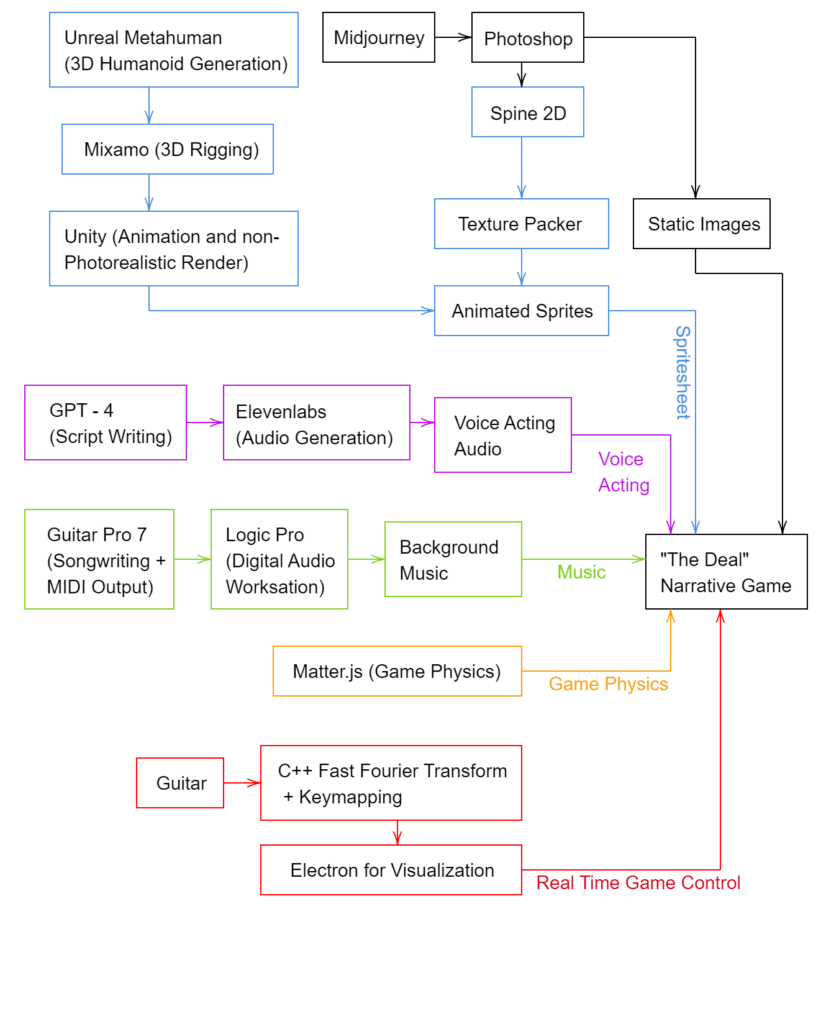

A number of tools and a not so complicated workflow is used to create this game in a week. The full workflow is illustrated below.

For a midterm project, the project is rather huge. I have 16 individual Javascript Modules working together to run the player, cinematics, background music, enemies, rendering and parallax movements. Everything is refactered into classes for optimization and code cleanliness. For example, my Player class begins as follows.

class Player {

constructor(scene, x, y, texture, frame) {

// Debug mode flag

this.debugMode = false;

this.debugText = null; // For storing the debug text object

this.scene = scene;

this.sprite = scene.matter.add.sprite(x, y, texture, frame);

this.sprite.setDepth(100);

// Ensure sprite is dynamic (not static)

this.sprite.setStatic(false);

// Setup animations for the player

this.setupAnimations();

// Initially play the idle animation

this.sprite.anims.play("idle", true);

// Create keyboard controls

this.cursors = scene.input.keyboard.createCursorKeys();

// Player physics properties

this.sprite.setFixedRotation(); // Prevents player from rotating

// Modify the update method to broadcast the player's speed

this.speed = 0;

this.isJumpingForward = false; // New flag for jump_forward state

// Walking and running

this.isWalking = false;

this.walkStartTime = 0;

this.runThreshold = 1000; // milliseconds threshold for running

// Debugging - Enable this to see physics bodies

}

//Adjust Colors

// Set the tint of the player's sprite

setTint(color) {

this.sprite.setTint(color);

}

What I am proud of

I am super proud that I was able to use a lot of my skills in making this.

For almost all the game resources, including the soundtrack, I either created it myself or generated it through AI, then plugged into the rest of the workflow for final processing. Here, in the image above, you can see me rigging and animating a robot sprite in Spine2D, where the original 2D art is generated in Midjourney.

Problems I ran Into

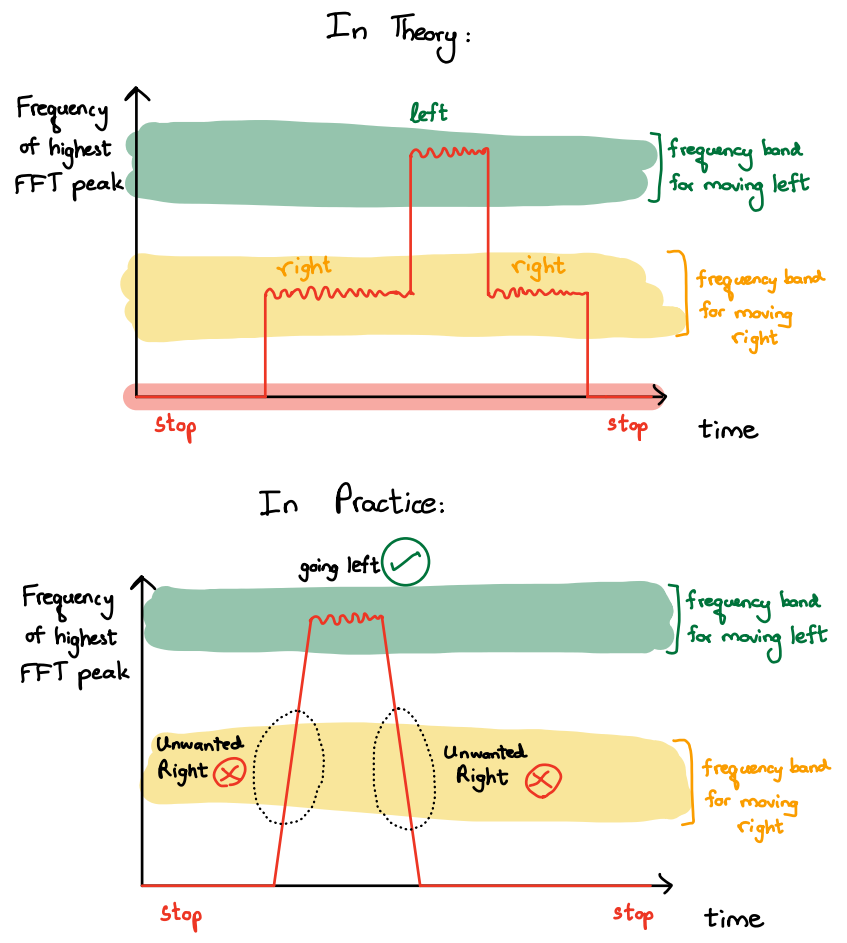

During the testing, everything went well. But during the live performance, my character got confused between moving left and moving right. When I played guitar notes for the character to move left, it moved right instead . This is because I declared the key mappings from the fft (Fast Fourier Transform) signal as frequency bands, which maps to right and left arrow keys accordingly.

In theory, it should work, but in practice, as in diagram below, once the left key mapping stops, the signal inadvertently passes through the right key mapping region (due to not having an exact vertical slope), causing unintentional right key presses.

I had to resort to the fft-> keymapping workflow since I cannot directly access the javascript runtime through my external C++ fft program. However, had the game been implemented as a native game (i.e. using Unity,Godot), then I can directly send unique UDP commands instead of keymapping regions. This would resolve the issue.

Rubric Checklist :

- Make an interactive artwork or game using everything you have learned so far (This is an interactive Game)

- Can have one or more users (A single player game, with the player acting as the storyteller to a crowd)

- Must include

At least one shape

The “BEGIN STORY” button is a shape, in order to fulfill this requirement. Everything else are images and sprites.

At least one image

We have a whole lot of images and Easter Eggs. The graphics are generated in Midjourney, and edited in Photoshop.

At least one sound

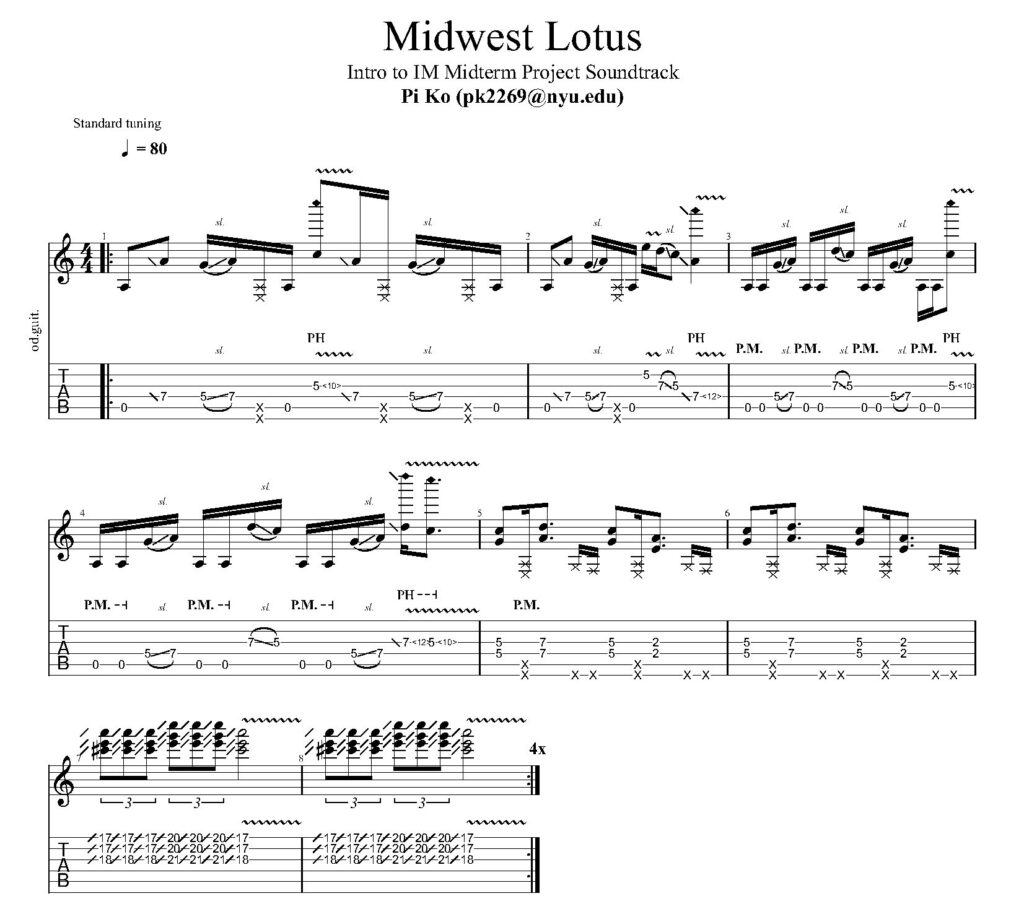

Pi composed the original soundtrack for the game. It is in A minor pentatonic for easy improvisation over it. In addition there are also loads of ambience sounds.

For the prologue monologue, I used this : https://youtu.be/Y8w-2lzM-C4

At least one on-screen text

We got voice acting and subtitles.

Object Oriented Programming

Everything is a class. We have 18 Classes in total to handle many different things, from Cinematics to Data Preloader to Background Music Manager to Parallax Background Management. Below is the Cinematic implementation.

class Cinematic {

constructor(scene, cinematicsData, player) {

this.scene = scene;

this.cinematicsData = cinematicsData;

this.player = player; // Store the player reference

this.currentCinematicIndex = 0;

this.subtitleText = null;

this.isCinematicPlaying = false;

this.collidedObject = null;

this.lastSpawnSide = "left"; // Track the last spawn side (left or right)

// Game objects container

this.gameObjects = this.scene.add.group();

this.phonecallAudio = null; // Add this line

}

create() {

// Create the text object for subtitles, but set it to invisible

this.subtitleText = this.scene.add

.text(this.scene.scale.width / 2, this.scene.scale.height * 0.5, "", {

font: "30px Arial",

fill: "#FFFFFF",

align: "center",

})

.setOrigin(0.5, 0.5)

.setDepth(10000)

.setVisible(false);

// Setup collision events

this.setupCollisionEvents();

}

executeAction(action) {

switch (action) {

case "phonecall":

console.log("Executing phone call action");

// Play the 'nokia' audio in a loop with a specified volume

if (!this.phonecallAudio) {

this.phonecallAudio = this.scene.sound.add("nokia", { loop: true });

this.phonecallAudio.play({ volume: 0.05 });

- The experience must start with a screen giving instructions and wait for user input (button / key / mouse / etc.) before starting (The main menu waits for the user click)

- After the experience is completed, there must be a way to start a new session (without restarting the sketch) (After the story, it goes back to main menu)

Interaction design (is clear to user what they are controlling, discoverability, use of signifiers, use of cognitive mapping, etc.)

(We have a super simple Keyboard or Guitar input instructions)