Concept: Recreating one of my favorite childhood game – The Brick Breaker

So I chose to have the concept for this project, is to create a musical soothing classical Brick Breaker game using the p5.js library. The game involves controlling a paddle to bounce a ball and break bricks at the top of the screen. The user interacts with the game by moving the paddle horizontally using the left and right arrow keys. The goal is to break all the bricks without letting the ball fall below the paddle. The game provides feedback through visual cues such as the ball bouncing off objects, disappearing bricks, and a scoring system. Moreover, sound effects further enhance the user experience.

Designing the Code: Elaborating important areas

1) Ball Behavior: Within the Ball class, I define the behavior of the ball. This includes its movement across the screen, detection of collisions with other objects (such as the paddle and bricks), and rendering on the canvas. This encapsulation allows for clear organization and modularization of ball-related functionality.

2) Paddle Control: The Paddle class covers the movement and display of the paddle. It handles user input from the keyboard to move the paddle horizontally across the screen, ensuring precise control for the player.

3) Brick Management: Each brick in the game is represented by the Brick class. This class manages the display of individual bricks on the canvas and provides methods for their creation, rendering, and removal during gameplay.

4) User Interaction: The mousePressed function responds to user input by triggering specific game actions, such as starting or resetting the game. This function enhances the interactivity of the game and provides a seamless user experience.

Additional functions, such as createBricks and resetGame, are responsible for initializing game elements (such as bricks) and resetting the game state, respectively. These functions streamline the codebase and improve readability by encapsulating repetitive tasks.

By breaking down the code into these specific components, I ensure a clear and organized structure, facilitating easier maintenance and future development of the game for the midterm project.

Minimizing Risk: Code I’m proud of,

display() {

fill(255, 0, 0);

ellipse(this.x, this.y, this.radius * 2);

}

checkCollision() {

if (this.x > paddle.x && this.x < paddle.x + paddle.width && this.y + this.radius > paddle.y) {

this.speedY *= -1;

paddleHitSound.play();

}

}

bounce() {

this.speedY *= -1;

ballHitSound.play();

}

hits(brick) {

let closestX = constrain(this.x, brick.x, brick.x + brick.width);

let closestY = constrain(this.y, brick.y, brick.y + brick.height);

let distance = dist(this.x, this.y, closestX, closestY);

return distance < this.radius;

}

}

One major complex aspect of the project is implementing collision detection between the ball and other game objects (paddle, bricks, walls). Ensuring accurate collision detection is crucial for the game’s mechanics and overall user experience. To minimize the risk of errors in this area, I employed two strategies:

1) Collision Detection Algorithm: Implementing this collision detection algorithms is essential because, for example in the Ball class, I used a method called hits(brick) to check if the ball collided with a brick. This method calculates the distance between the ball and the brick’s edges to determine if a collision occurred. Moreover, By using the dist() function in favor with appropriate ball coordinates, I ensured this accurate collision detection is perfectly executed.

2) Testing with Edge Cases: To validate the accuracy of this collision detection algorithm, I conducted repeated testing with various edge cases. This includes scenarios where the ball collides with the corners of bricks or with multiple objects simultaneously. By systematically testing these cases and analyzing the results, I came to conclusion that the collision detection behaves as expected under different conditions.

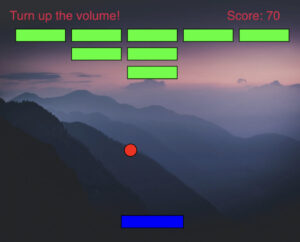

Here’s the Game:

Features & Game Mechanics:

– Game initializes with a start screen displaying “Brick Breaker” and “Click to start” message.

– The player controls the paddle using the left and right arrow keys.

– The ball bounces off the paddle, walls, and bricks.

– When the ball hits a brick, it disappears, and the player earns points.

– If the ball falls below the paddle, the game ends.

– Once game ends, it displays the “Game Over” message along with the score and “Click to replay” option.

– Clicking on the canvas after the game ends resets the game, allowing the player to replay.

Additional Features:

– Sound effects are played when the ball hits the paddle and when it hits a brick.

– The player earns points for each brick broken, and the score is displayed on the screen.

– Background music plays throughout the game to enhance the gaming experience.

Here’s a snapshot taken during the game-play:

Complete Code Snippet (With Comments):

// Define global variables

let backgroundImage;

let ball;

let paddle;

let bricks = [];

let brickRowCount = 3;

let brickColumnCount = 5;

let brickWidth = 80;

let brickHeight = 20;

let brickPadding = 10;

let brickOffsetTop = 50; // Adjusted value

let brickOffsetLeft = 30;

let score = 0;

let ballHitSound;

let paddleHitSound;

let backgroundMusic;

let gameStarted = false;

let gameOver = false;

// Preload function to load external assets

function preload() {

backgroundImage = loadImage('background_image.jpg'); // Replace 'background_image.jpg' with the path to your image file

ballHitSound = loadSound('ball_hit.mp3');

paddleHitSound = loadSound('paddle_hit.mp3');

backgroundMusic = loadSound('background_music.mp3');

}

// Setup function to initialize canvas and objects

function setup() {

createCanvas(500, 400); // Set the canvas size to match the background image size

paddle = new Paddle();

ball = new Ball();

createBricks();

backgroundMusic.loop();

// resetGame(); // Commented out, not needed here

}

// Draw function to render graphics

function draw() {

background(backgroundImage); // Draw the background image

// Display "Click to start" only when game hasn't started and isn't over

if (!gameStarted && !gameOver) {

textSize(32);

textAlign(CENTER, CENTER);

text("Brick Breaker", width / 2, height / 2 - 40);

textSize(20);

text("Click to start", width / 2, height / 2);

} else { // Game running

if (gameStarted && !gameOver) { // Run game logic only when game is started and not over

ball.update();

ball.checkCollision();

ball.display();

paddle.display();

paddle.update();

// Display and handle collisions with bricks

for (let i = bricks.length - 1; i >= 0; i--) {

bricks[i].display();

if (ball.hits(bricks[i])) {

ball.bounce();

bricks.splice(i, 1);

score += 10;

}

}

// Check if all bricks are destroyed

if (bricks.length === 0) {

gameOver = true;

}

// Display score

fill('rgb(216,32,71)')

textSize(20);

textAlign(LEFT);

text("Turn up the volume! Score: " + score, 20, 30);

}

// Display game over message

if (gameOver) {

fill('rgb(32,213,32)')

textSize(32);

textAlign(CENTER, CENTER);

text("Game Over! Score: " + score, width / 2, height / 2);

text("Click to replay", width / 2, height / 2 + 40);

}

}

}

// Mouse pressed function to start/restart the game

function mousePressed() {

if (!gameStarted || gameOver) {

resetGame();

}

}

// Reset game state and objects

function resetGame() {

gameStarted = true;

gameOver = false;

score = 0;

ball.reset();

createBricks();

}

// Function to create bricks

function createBricks() {

bricks = [];

for (let c = 0; c < brickColumnCount; c++) {

for (let r = 0; r < brickRowCount; r++) {

let x = c * (brickWidth + brickPadding) + brickOffsetLeft;

let y = r * (brickHeight + brickPadding) + brickOffsetTop;

bricks.push(new Brick(x, y));

}

}

}

// Ball class

class Ball {

constructor() {

this.reset();

}

// Reset ball position and speed

reset() {

this.x = paddle.x + paddle.width / 2;

this.y = paddle.y - this.radius;

this.speedX = 5;

this.speedY = -5;

this.radius = 10;

}

// Update ball position

update() {

this.x += this.speedX;

this.y += this.speedY;

// Reflect ball off walls

if (this.x < this.radius || this.x > width - this.radius) {

this.speedX *= -1;

}

if (this.y < this.radius) {

this.speedY *= -1;

} else if (this.y > height - this.radius) {

gameOver = true; // Game over if ball reaches bottom

}

}

// Display ball

display() {

fill(255, 0, 0);

ellipse(this.x, this.y, this.radius * 2);

}

// Check collision with paddle

checkCollision() {

if (this.x > paddle.x && this.x < paddle.x + paddle.width && this.y + this.radius > paddle.y) {

this.speedY *= -1;

paddleHitSound.play(); // Play paddle hit sound

}

}

// Bounce ball off objects

bounce() {

this.speedY *= -1;

ballHitSound.play(); // Play ball hit sound

}

// Check collision with a brick

hits(brick) {

let closestX = constrain(this.x, brick.x, brick.x + brick.width);

let closestY = constrain(this.y, brick.y, brick.y + brick.height);

let distance = dist(this.x, this.y, closestX, closestY);

return distance < this.radius;

}

}

// Paddle class

class Paddle {

constructor() {

this.width = 100;

this.height = 20;

this.x = width / 2 - this.width / 2;

this.y = height - 50;

this.speed = 10;

}

// Display paddle

display() {

fill(0, 0, 255);

rect(this.x, this.y, this.width, this.height);

}

// Update paddle position based on user input

update() {

if (keyIsDown(LEFT_ARROW)) {

this.x -= this.speed;

}

if (keyIsDown(RIGHT_ARROW)) {

this.x += this.speed;

}

this.x = constrain(this.x, 0, width - this.width);

}

}

// Brick class

class Brick {

constructor(x, y) {

this.x = x;

this.y = y;

this.width = brickWidth;

this.height = brickHeight;

}

// Display brick

display() {

fill(0, 255, 0);

rect(this.x, this.y, this.width, this.height);

}

}

Ideas to improve this project for the midterm:

1) User Experience: I’m thinking to enhance the user experience by adding features such as visual effects, animations, difficulty levels, and more interactive elements (themes) can make the game more engaging and enjoyable for players.

2) Saving High Scores Implement functionality to allow players to save the player’s progress (high scores) and comparing them with their present and previous scores.

3) Immersive Audio Design: Enhancing more immersion by adding immersive audio effects or soundscapes to game play events and interactions. This features could adds more engaging and immersive audiovisual experience for the user.