Concept

I am super jealous 😤 of people who are extremely good at one thing. Hayao Miyazaki doesn’t have to think that much, but just keep making animated movies.. he’s the greatest artist I look up to. Slash and Jimi Hendrix does not get confused, they just play guitar all time, because they are the greatest musicians. Joseph Fourier does just Mathematics and Physics, with a little history on the side… but he’s still a mathematician.

My lifelong problem is that I specialize in everything, in extreme depths. When you are a competent artist, engineer, musician, mathematician, roboticist, researcher, poet, game developer, filmmaker and a storyteller all at once, it’s really really hard to focus on one thing….

which is a problem that can be solved by doing EVERYTHING.

Hence, for my midterm, I am fully utilizing all a fraction of my skills to create the most beautiful interactive narrative game ever executed in the history of p5js editor, where I can control the game by improvising on my guitar in real time. The story will be told in the form of a poem I wrote.

Ladies and gents I present you G-POET – the Guitar-Powered Operatic Epic Tale. 🎸 the live performance with your host Pi.

This is not a show off. This is Devotion to prove my eternal loyalty and love of arts! I don’t even care about the grades. For me, arts is a matter of life or death. The beauty and the story arc of this narrative should reflect my overflowing and exploding emotions and feelings I have for arts.

Also, despite it being a super short game made using JavaScript, I want it on the same level, if not better than the most stunning cinematic 2D games ever made in history – the titles like Ori and the Blind Forest , Forgotton Anne, Hollow Knight. They are produced by studios to get that quality, I want to show what a one man army can achieve in two weeks.

Design

I am saving up the story for hype, so below are sneak peaks of the bare minimum. It’s an open world. No more spoilers.

(If the p5 sketch below loads, then use Arrows to move left and right, and space to jump )

And below, is my demonstration of controlling my game character with the guitar. If you think I can’t play the guitar… no no no Pi plays the guitar and narrate you the story, you interact with him, and tell him, oh I wanna go to that shop. And Pi will go “Sure, so I eventually went to that shop… improvise some tunes on the spot to accompany his narrations, and the game character will do that thing in real time”.

See? There’s a human storyteller in the loop, if this is not interactive, I don’t know what is.

People play games alone… This is pathetic.

~ Pi

💡Because let’s face it. People play games alone… This is pathetic. My live performance using G-POET system will bring back the vibes of a community hanging out around a bonfire listening to the stories by a storyteller…same experience but on steroids, cranked up to 11.

No, such guitar assisted interactive performance in real-time Ghibli style game has not been done to my knowledge.

(In the video, you might notice that low pitch notes causes player to go right, and high pitch notes is for going left. There are some noises, I will filter it later.)

To plug my guitar to my p5js editor, I wrote a C++ native app to calculate the frequency of my guitar notes through Fast Fourier Transform and map particular ranges of frequencies to some key press events, which is propagated to the p5js browser tab. A fraction of the C++ code to simulate key presses is

char buffer[1024];

bool leftArrowPressed = false;

bool rightArrowPressed = false;

CGKeyCode leftArrowKeyCode = 0x7B; // KeyCode for left arrow key

CGKeyCode rightArrowKeyCode = 0x7C; // KeyCode for right arrow key

while (true) {

int n = recv(sockfd, buffer, 1024, 0);

if (n > 0) {

buffer[n] = '\0';

int qValue = std::stoi(buffer);

// std::cout << "Received Q Value: " << qValue << std::endl;

if (qValue > 400 && qValue <= 700 && !leftArrowPressed) {

// Debug Log

std::cout << "Moving Left" << std::endl;

simulateKeyPress(leftArrowKeyCode, true);

leftArrowPressed = true;

if (rightArrowPressed) {

// Debug Log

std::cout << "Stop" << std::endl;

simulateKeyPress(rightArrowKeyCode, false);

rightArrowPressed = false;

}

} else if ((qValue <= 400 || qValue > 700) && leftArrowPressed) {

// Debug Log

std::cout << "Stop" << std::endl;

simulateKeyPress(leftArrowKeyCode, false);

leftArrowPressed = false;

}

Of course, I need the characters. Why do I need to browse the web for low quality graphics (or ones which does not meet my art standards), while I can create my own graphics tailored to this game specifically?

So I created a sprite sheet of myself as a game character, in my leather jacket, with my red hair tie, leather boots and sunglasses.

But is it not time consuming? Not if you are lazy and automated it 🫵. You just model Unreal metahuman yourself, plug the fbx model into mixamo to rig, plug into Unity, do wind and clothes simulation and animate. Then, apply non-photorealistic cel shading to give a hand-drawn feel, and utilize Unity Recorder to capture each animation frame, do a bit more clean up of the images through ffmpeg, then assemble the spritesheet in TexturePacker and voilà … quality sprite sheet of “your own” in half an hour.

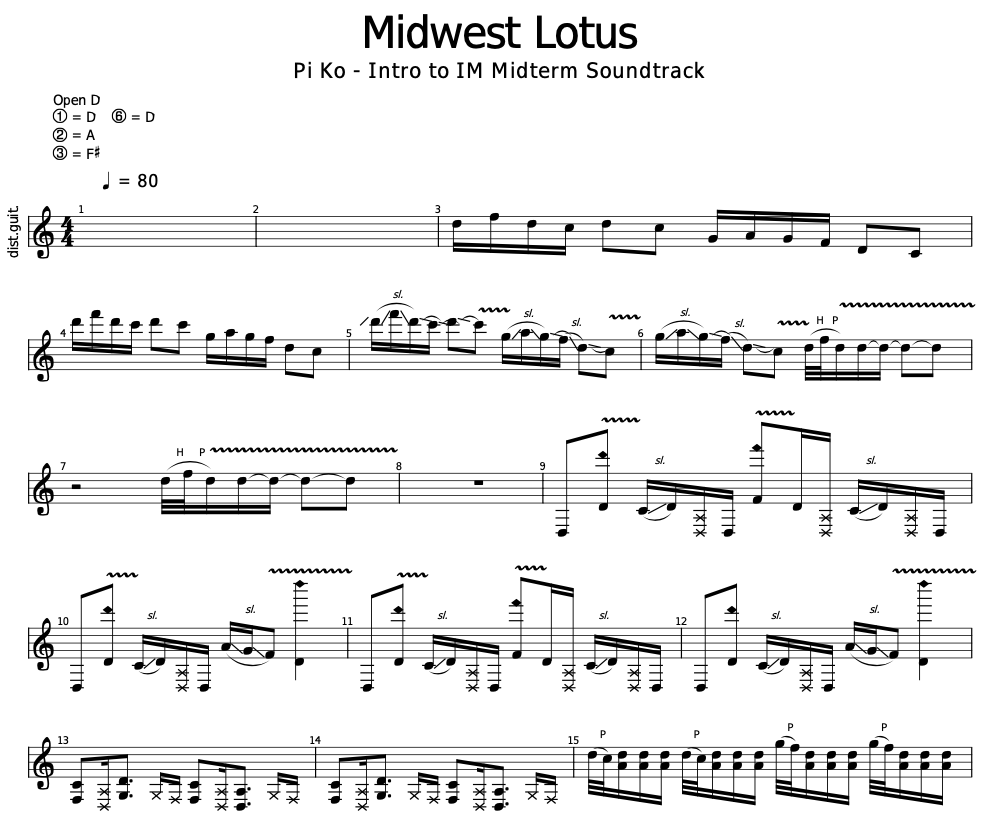

Also when improvising the guitar during the storytelling performance, I need the game background music to (1) specifically tailored to my game and (2) follow a particular key and chord progression so that I can improvise on the spot in real time without messing up. Hence, I am composing the background , below is a backing track from the game.

In terms of code, there are a lot a lot a lot of refactored classes I am implementing, including the Data Loader, player state machine, Animation Controllers, Weather System, NPC system, Parallax scrolling, UI system, Dialgoue System, and the Cinematic and Cutscene systems, and Post Processing Systems and Shader loaders. I will elaborate more on actual report, but for now, I will show an example of my Sprite Sheet loader class.

class TextureAtlasLoader {

constructor(scene) {

this.scene = scene;

}

loadAtlas(key, textureURL, atlasURL) {

this.scene.load.atlas(key, textureURL, atlasURL);

}

createAnimation(key, atlasKey, animationDetails) {

const frameNames = this.scene.anims.generateFrameNames(atlasKey, {

start: animationDetails.start,

end: animationDetails.end,

zeroPad: animationDetails.zeroPad,

prefix: animationDetails.prefix,

suffix: animationDetails.suffix,

});

this.scene.anims.create({

key: key,

frames: frameNames,

frameRate: animationDetails.frameRate,

repeat: animationDetails.repeat,

});

}

}

And I am also writing some of the GLSL fragment shaders myself, so that the looks can be enhanced to match the studio quality games. An example of the in game shaders is given below (this creates plasma texture overlay on the entire screen).

precision mediump float;

uniform float uTime;

uniform vec2 uResolution;

uniform sampler2D uMainSampler;

varying vec2 outTexCoord;

#define MAX_ITER 4

void main( void )

{

vec2 v_texCoord = gl_FragCoord.xy / uResolution;

vec2 p = v_texCoord * 8.0 - vec2(20.0);

vec2 i = p;

float c = 1.0;

float inten = .05;

for (int n = 0; n < MAX_ITER; n++)

{

float t = uTime * (1.0 - (3.0 / float(n+1)));

i = p + vec2(cos(t - i.x) + sin(t + i.y),

sin(t - i.y) + cos(t + i.x));

c += 1.0/length(vec2(p.x / (sin(i.x+t)/inten),

p.y / (cos(i.y+t)/inten)));

}

c /= float(MAX_ITER);

c = 1.5 - sqrt(c);

vec4 texColor = vec4(0.0, 0.01, 0.015, 1.0);

texColor.rgb *= (1.0 / (1.0 - (c + 0.05)));

vec4 pixel = texture2D(uMainSampler, outTexCoord);

gl_FragColor = pixel + texColor;

}

Frightening / Challenging Aspects

Yes, there were a lot of frightening aspects. I frightened my computer by forcing it to do exactly what I want.

Challenges? Well, I just imagine what I want. In the name of my true and genuine love for arts, God revealed all the codes and skills required to me through the angels to make my thoughts into reality.

Hence, the implementation of this project is like Ariana Grande’s 7 Rings lyrics.

I see it, I like it, I want it, I got it (Yep)

Risk Prevention

Nope, no risk. The project is completed so I know there is no risks to be prevented, I am just showing a fraction of it because this is the midterm “progress” report.