I wanted to make my midterm project really personal so as to motivate me to want to code and really push myself and the only topic that instantaneously came to mind was music. I am an avid music lover and have used music as a coping mechanism during times of (lowkey) depression and so I naturally garnered a really strong feeling about it. I decided to create my own version of the “coffee shop experience” which really struck a chord in me. My rendition of the “coffee shop”, as aforementioned, centers around music. A vintage radio with an on/off, skip and pause button, which necessitates user interaction, would play a sequence of 5(?) songs – more details listed below. Each song has their own uniquely specific background, each relating to a specific memory, and each background has an element of dynamicity. It’s important to note that the song title and artist name will be displayed on the radio for each song that plays.

Checklist:

1) create a radio using p5.js javascript.

(on/off button – when “on”, music plays, skip button, pause button)

(off button – sequence of songs randomizes + turns off music).

(make sure songs autoplay on loop)

2) (image and sound) – different songs(x5) have different backgrounds.

3) (list/arrays and on-screen text) – song title shows on display when song is playing.

Specific worries/ possible challenges:

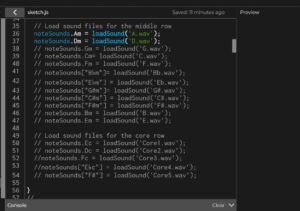

1) keeping track of specific parts of codes:

it’s absolutely imperative that my code is well organised and commented, more so for me since I’m in the process of establishing somewhat of a foundation in coding. Keeping track of variable names, classes, functions are most imperative. One solution could be to work on separate sketch.js files for specific parts (for example., backgrounds) specifically for code sections that will be encompassed in classes. Once I’ve made sure it works, I can always copy and paste it onto the main sketch.js file. I’ll also need to copy and paste regularly my main file code onto googledocs/ word doc just so that it’s easier for me to keep track and stay more organised – I find that the p5.js window size limits readability.

2) localising “click” function to specific shapes:

throughout my assignments where interactivity is present, I’ve used “click” functions that result in a change, but it has never been localised to a specific area. It was general and random, which hence enabled flexibility. Because of this, I am naturally worried of the complexity of the code. And with this follows a subsequent building anxiety: the numerous conditions (if/for loops) that majorly depend on how the user decides to interact. To me, this particular segment appears as a big knotted, balled up thread which I have to individually unpick and so at the moment seems incredibly daunting… Of course though nothing is impossible with an internet search (in-depth if necessary). According to my memory, the topic of localising the “click” function to specific shapes has been touched upon in one of the coding train videos. The same can be applied to the if and for loops. Furthermore there is always the “coffee shop experience” example I can use for guidance or as a source of reference.