Final idea:

Thinking of my final project idea, this crossed my mind.We are surrounded by the four classical elements (air, earth, fire, and water) within our environment, we interact with these elements whether we see it or not everyday. I wanted to bring that interaction to life through this project.The idea is to create 4 generative art pieces for each of these elements, that represents them in some way(using different patterns and colors etc..). Then audience member will be asked to choose which element they want to interact with. The processing art piece created for that element will move with the person interacting with it so that they feel like they are controlling the elements in a way or are In harmony with them.

I will be working solo

Material/space needs:

In order for the project to succeeded I will definitely be needing:

- Kinect /kinect adapter

- projector (space for projector, I need space and a projector because using a small screen to move the generative will be very underwhelming)

- speakers (sound effects for each element)

Hardest parts:

I think the hardest parts will be getting he Kinect to accurately locate the person, and to connect it to the processing sketches, hopefully Daniel Shiffman’s videos will come in handy. I have already started working on all the generative art pieces.

Progress so far:

These are what I have in mind for each element’s generative art piece :

For the water I already start working on it and this is what I have so far:

For the wind I am planning to use perlin noise and create something like this

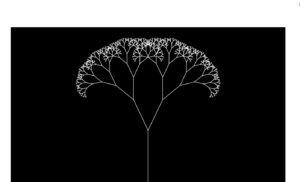

For the earth I plan to use Daniel shieffmens recursive tree as reference, and add more details to it.

For the fire I intend to use the coding train’s challenge #103, and create a fire smoke like effect.

Regarding the Kinect part of the project, I have check out the Kinect from the interactive media lab, but came to realize that I need an adapter that is specific to the Kinect that I don’t have yet. But I did work on the coding aspect of the Kinect to track the average location and this is what I have so far (reference:Daniel Shiffman):

import org.openkinect.freenect.*;

import org.openkinect.processing.*;

// The kinect stuff is happening in another class

KinectTracker tracker;

Kinect kinect;

void setup() {

size(640, 520);

kinect = new Kinect(this);

tracker = new KinectTracker();

}

void draw() {

background(255);

// Run the tracking analysis

tracker.track();

// Show the image

tracker.display();

// Let's draw the raw location

PVector v1 = tracker.getPos();

fill(50, 100, 250, 200);

noStroke();

ellipse(v1.x, v1.y, 20, 20);

// Let's draw the "lerped" location

PVector v2 = tracker.getLerpedPos();

fill(100, 250, 50, 200);

noStroke();

ellipse(v2.x, v2.y, 20, 20);

// Display some info

int t = tracker.getThreshold();

fill(0);

text("threshold: " + t + " " + "framerate: " + int(frameRate) + " " +

"UP increase threshold, DOWN decrease threshold", 10, 500);

}

// Adjust the threshold with key presses

void keyPressed() {

int t = tracker.getThreshold();

if (key == CODED) {

if (keyCode == UP) {

t+=5;

tracker.setThreshold(t);

} else if (keyCode == DOWN) {

t-=5;

tracker.setThreshold(t);

}

}

}

class KinectTracker {

// Depth threshold

int threshold = 745;

// Raw location

PVector loc;

// Interpolated location

PVector lerpedLoc;

// Depth data

int[] depth;

// What we'll show the user

PImage display;

KinectTracker() {

// This is an awkard use of a global variable here

// But doing it this way for simplicity

kinect.initDepth();

kinect.enableMirror(true);

// Make a blank image

display = createImage(kinect.width, kinect.height, RGB);

// Set up the vectors

loc = new PVector(0, 0);

lerpedLoc = new PVector(0, 0);

}

void track() {

// Get the raw depth as array of integers

depth = kinect.getRawDepth();

// Being overly cautious here

if (depth == null) return;

float sumX = 0;

float sumY = 0;

float count = 0;

for (int x = 0; x < kinect.width; x++) {

for (int y = 0; y < kinect.height; y++) {

int offset = x + y*kinect.width;

// Grabbing the raw depth

int rawDepth = depth[offset];

// Testing against threshold

if (rawDepth < threshold) {

sumX += x;

sumY += y;

count++;

}

}

}

// As long as we found something

if (count != 0) {

loc = new PVector(sumX/count, sumY/count);

}

// Interpolating the location, doing it arbitrarily for now

lerpedLoc.x = PApplet.lerp(lerpedLoc.x, loc.x, 0.3f);

lerpedLoc.y = PApplet.lerp(lerpedLoc.y, loc.y, 0.3f);

}

PVector getLerpedPos() {

return lerpedLoc;

}

PVector getPos() {

return loc;

}

void display() {

PImage img = kinect.getDepthImage();

// Being overly cautious here

if (depth == null || img == null) return;

// Going to rewrite the depth image to show which pixels are in threshold

// A lot of this is redundant, but this is just for demonstration purposes

display.loadPixels();

for (int x = 0; x < kinect.width; x++) {

for (int y = 0; y < kinect.height; y++) {

int offset = x + y * kinect.width;

// Raw depth

int rawDepth = depth[offset];

int pix = x + y * display.width;

if (rawDepth < threshold) {

// A red color instead

display.pixels[pix] = color(150, 50, 50);

} else {

display.pixels[pix] = img.pixels[offset];

}

}

}

display.updatePixels();

// Draw the image

image(display, 0, 0);

}

int getThreshold() {

return threshold;

}

void setThreshold(int t) {

threshold = t;

}

}