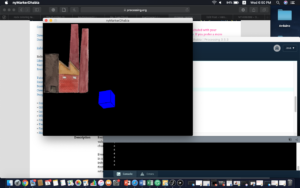

The progress of the prototype stage of the final project has been going smoothly. I have tested the kinect v2 code with additional calibrations on a container that has water in it – and the kinect was able to successfully capture the ripples and waves. Since I am catching only the blue pixels, I have placed blue acrylics at the bottom of the water container as a a prototype to test it out.

Also, for the sound part, I have inserted additional codes that I got from Aaron that uses the Processing Sound library. Currently in the code, I am calculating the minimum number of pixels shown (due to some possible errors in calibrations like reflections from the container, etc.), and I use the minimum and the maximum values of pixel counts to map the pixel count values to an amplitude between 0 and 1. The following code creates an effect where the more ripples there are in certain sections, the louder the sound becomes.

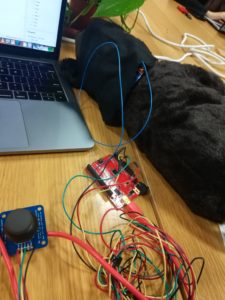

After encountering an idea of capacitive touch sensing from Aaron, so-called Touche for Arduino: Advanced Touching Sensor, I have decided to include as a part in the project and change how the final version of the project is going to look like. The capacitive touch sensor will be a trigger to initiating different loops/sound – for instance, one finger plays one sound, two finger plays two sound together or other sound alone, etc. And, the pixel counts from the waves detected from the Kinect v2 will serve as a the value for the reverb of all loops.

The below is the prototype video of using the touche advanced touching sensor library with water and fingers as the trigger for different music loops: