Progress:

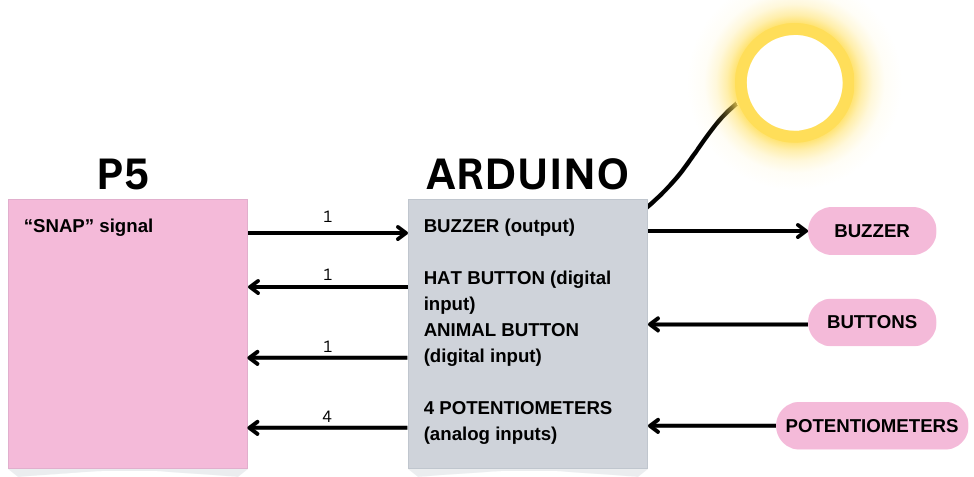

As previously mentioned in my final project concept, I intend to create an “electronic buddy” or an “art robot” (which sounds better than my previous term). Thus far, I have successfully established communication between p5 and Arduino. Specifically, I utilized the handpose model from the ml5 library to detect if the hand is within specific areas on the screen and transmit a corresponding index to the Arduino.

As this is only a preliminary test, I have two motors connected to the Arduino, rotating in one direction if the hand is in the upper third of the screen, halting if the hand is in the middle portion, and rotating in the opposite direction if the hand is in the lower third. Currently, there is only unidirectional communication from p5 to Arduino, with p5 sending data to the latter. I hope to achieve meaningful communication from the Arduino to p5 as I progress. I have included the p5 sketch, Arduino sketch, and a video of the test run for reference.

p5 sketch:

let handpose;

let value = -1;

let video;

let predictions = [];

function setup() {

createCanvas(640, 480);

video = createCapture(VIDEO);

video.size(width, height);

handpose = ml5.handpose(video, modelReady);

// This sets up an event that fills the global variable "predictions"

// with an array every time new hand poses are detected

handpose.on("predict", results => {

predictions = results;

});

// Hide the video element, and just show the canvas

video.hide();

}

function modelReady() {

console.log("Model ready!");

}

function draw() {

if (!serialActive) {

text("Press Space Bar to select Serial Port", 20, 30);

} else {

image(video, 0, 0, width, height);

// We can call both functions to draw all keypoints and the skeletons

drawKeypoints();

// draws sections on the screen

for (let i = 0; i <= width / 2; i += (width / 2) / 3) {

stroke(255, 0, 0);

line(i , 0, i, height);

}

for (let i = 0; i <= height; i += height / 3) {

stroke(255, 0, 0);

line(0, i, width / 2, i);

}

}

}

function keyPressed() {

if (key == " ") {

// important to have in order to start the serial connection!!

setUpSerial();

}

}

function readSerial(data) {

if (data != null) {

//////////////////////////////////

//SEND TO ARDUINO HERE (handshake)

//////////////////////////////////

let sendToArduino = value + "\n";

writeSerial(sendToArduino);

}

}

// A function to draw ellipses over the detected keypoints

function drawKeypoints() {

for (let i = 0; i < predictions.length; i += 1) {

const prediction = predictions[i];

let area = [0, 0, 0, 0, 0, 0, 0, 0, 0];

for (let j = 0; j < prediction.landmarks.length; j += 1) {

const keypoint = prediction.landmarks[j];

fill(0, 255, 0);

noStroke();

ellipse(keypoint[0], keypoint[1], 10, 10);

// count number of trues

// -- helps to detect the area the detected hand is in

if (withinTopLeft(keypoint[0], keypoint[1])) {

area[0] += 1;

}

if (withinTopCenter(keypoint[0], keypoint[1])) {

area[1] += 1;

}

if (withinTopRight(keypoint[0], keypoint[1])) {

area[2] += 1;

}

if (withinMiddleLeft(keypoint[0], keypoint[1])) {

area[3] += 1;

}

if (withinMiddleCenter(keypoint[0], keypoint[1])) {

area[4] += 1;

}

if (withinMiddleRight(keypoint[0], keypoint[1])) {

area[5] += 1;

}

if (withinBottomLeft(keypoint[0], keypoint[1])) {

area[6] += 1;

}

if (withinBottomCenter(keypoint[0], keypoint[1])) {

area[7] += 1;

}

if (withinBottomRight(keypoint[0], keypoint[1])) {

area[8] += 1;

}

// end of count

}

// print index

for (let i = 0; i < area.length; i += 1) {

if (area[i] == 21) {

value = i;

}

}

}

}

// returns true if a point is in a specific region

function withinTopLeft(x, y) {

if (x >= 0 && x < (width / 2) / 3 && y >=0 && y < height / 3) {

return true;

}

return false;

}

function withinTopCenter(x, y) {

if (x > (width / 2) / 3 && x < 2 * (width / 2) / 3 &&

y >=0 && y < height / 3) {

return true;

}

return false;

}

function withinTopRight(x, y) {

if (x > 2 * (width / 2) / 3 && x < (width / 2) &&

y >=0 && y < height / 3) {

return true;

}

return false;

}

function withinMiddleLeft(x, y) {

if (x >= 0 && x < (width / 2) / 3 && y > height / 3 && y < 2 * height / 3) {

return true;

}

return false;

}

function withinMiddleCenter(x, y) {

if (x > (width / 2) / 3 && x < 2 * (width / 2) / 3 &&

y > height / 3 && y < 2 * height / 3) {

return true;

}

return false;

}

function withinMiddleRight(x, y) {

if (x > 2 * (width / 2) / 3 && x < (width / 2) &&

y > height / 3 && y < 2 * height / 3) {

return true;

}

return false;

}

function withinBottomLeft(x, y) {

if (x >= 0 && x < (width / 2) / 3 && y > 2 * height / 3 && y < height) {

return true;

}

return false;

}

function withinBottomCenter(x, y) {

if (x > (width / 2) / 3 && x < 2 * (width / 2) / 3 &&

y > 2 * height / 3 && y < height) {

return true;

}

return false;

}

function withinBottomRight(x, y) {

if (x > 2 * (width / 2) / 3 && x < (width / 2) &&

y > 2 * height / 3 && y < height) {

return true;

}

return false;

}

Arduino sketch:

const int ain1Pin = 3;

const int ain2Pin = 4;

const int pwmAPin = 5;

const int bin1Pin = 8;

const int bin2Pin = 7;

const int pwmBPin = 6;

void setup() {

// Start serial communication so we can send data

// over the USB connection to our p5js sketch

Serial.begin(9600);

pinMode(LED_BUILTIN, OUTPUT);

pinMode(ain1Pin, OUTPUT);

pinMode(ain2Pin, OUTPUT);

pinMode(pwmAPin, OUTPUT); // not needed really

pinMode(bin1Pin, OUTPUT);

pinMode(bin2Pin, OUTPUT);

pinMode(pwmBPin, OUTPUT); // not needed really

// TEST BEGIN

// turn in one direction, full speed

analogWrite(pwmAPin, 255);

analogWrite(pwmBPin, 255);

digitalWrite(ain1Pin, HIGH);

digitalWrite(ain2Pin, LOW);

digitalWrite(bin1Pin, HIGH);

digitalWrite(bin2Pin, LOW);

// stay here for a second

delay(1000);

// slow down

int speed = 255;

while (speed--) {

analogWrite(pwmAPin, speed);

analogWrite(pwmBPin, speed);

delay(20);

}

// TEST END

// start the handshake

while (Serial.available() <= 0) {

digitalWrite(LED_BUILTIN, HIGH); // on/blink while waiting for serial data

Serial.println("0,0"); // send a starting message

delay(300); // wait 1/3 second

digitalWrite(LED_BUILTIN, LOW);

delay(50);

}

}

void loop() {

while (Serial.available()) {

// sends dummy data to p5

Serial.println("0,0");

// led on while receiving data

digitalWrite(LED_BUILTIN, HIGH);

// gets value from p5

int value = Serial.parseInt();

// changes brightness of the led

if (Serial.read() == '\n') {

if (value >= 0 && value <= 2) {

digitalWrite(ain1Pin, HIGH);

digitalWrite(ain2Pin, LOW);

digitalWrite(bin1Pin, HIGH);

digitalWrite(bin2Pin, LOW);

analogWrite(pwmAPin, 255);

analogWrite(pwmBPin, 255);

}

else if (value >= 3 && value <= 5) {

analogWrite(pwmAPin, 0);

analogWrite(pwmBPin, 0);

}

else {

digitalWrite(ain1Pin, LOW);

digitalWrite(ain2Pin, HIGH);

digitalWrite(bin1Pin, LOW);

digitalWrite(bin2Pin, HIGH);

analogWrite(pwmAPin, 255);

analogWrite(pwmBPin, 255);

}

}

}

// led off at end of reading

digitalWrite(LED_BUILTIN, LOW);

}

Video: