Exercise 1

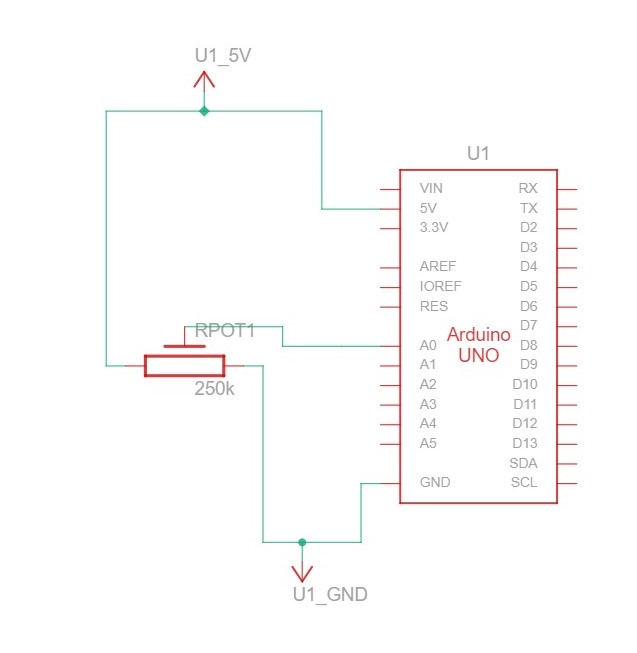

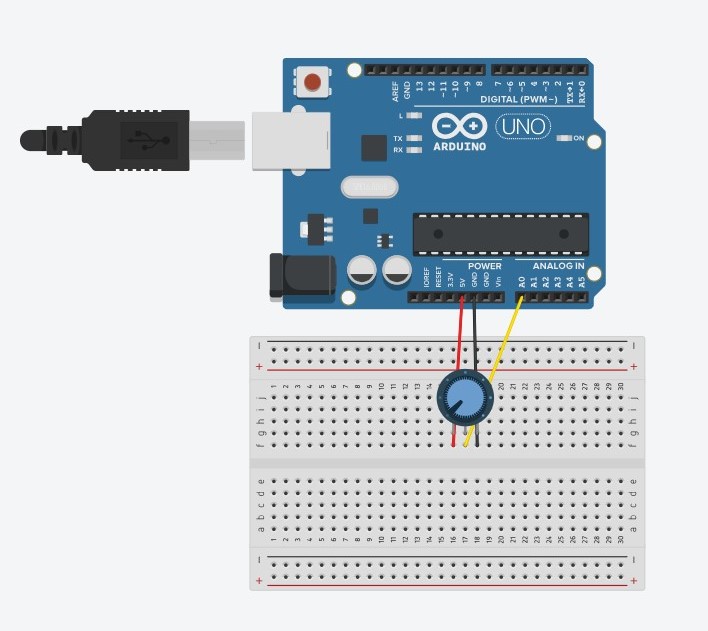

Concept

The potentiometer is used to control the x-coordinate of the ellipse drawn in p5.

Code

Arduino:

void setup() {

// Start serial communication so we can send data

// over the USB connection to our p5js sketch

Serial.begin(9600);

// We'll use the builtin LED as a status output..

pinMode(LED_BUILTIN, OUTPUT);

}

void loop() {

// gets sensor reading

int sensor = analogRead(A0);

delay(5);

// indicates data transfer

digitalWrite(LED_BUILTIN, HIGH);

// sends data to p5

Serial.println(sensor);

// indicates data transfer

digitalWrite(LED_BUILTIN, LOW);

}

p5

// variable to control x-coordinate

let circleX = 0;

function setup() {

createCanvas(640, 480);

textSize(18);

}

function draw() {

// sets background

background(255);

stroke(0);

// draws circle on canvas

// -- circleX is mapped between 0 and the width

circle(map(circleX, 0, 1023, 0, width), height / 2, 50);

// checks if serial communication has been established

if (!serialActive) {

text("Press Space Bar to select Serial Port", 20, 30);

} else {

text("Connected", 20, 30);

}

}

// sets up serial connection

function keyPressed() {

if (key == " ") {

// important to have in order to start the serial connection!!

setUpSerial();

}

}

// This function will be called by the web-serial library

// with each new *line* of data. The serial library reads

// the data until the newline and then gives it to us through

// this callback function

function readSerial(data) {

////////////////////////////////////

//READ FROM ARDUINO HERE

////////////////////////////////////

if (data != null) {

circleX = int(trim(data));

}

}

Video

Exercise 2

Concept

The brightness of an LED is controlled by the mouseX value from p5 mapped between 0 and 255.

Code

Arduino:

// led pin number

int ledPin = 5;

void setup() {

// Start serial communication so we can send data

// over the USB connection to our p5js sketch

Serial.begin(9600);

// We'll use the builtin LED as a status output.

// We can't use the serial monitor since the serial connection is

// used to communicate to p5js and only one application on the computer

// can use a serial port at once.

pinMode(LED_BUILTIN, OUTPUT);

pinMode(ledPin, OUTPUT);

// checks if led works correctly

digitalWrite(ledPin, HIGH);

delay(1000);

digitalWrite(ledPin, LOW);

// start the handshake

while (Serial.available() <= 0) {

digitalWrite(LED_BUILTIN, HIGH); // on/blink while waiting for serial data

Serial.println("0,0"); // send a starting message

delay(300); // wait 1/3 second

digitalWrite(LED_BUILTIN, LOW);

delay(50);

}

}

void loop() {

// wait for data from p5 before doing something

while (Serial.available()) {

// sends dummy data to p5

Serial.println("0,0");

// led on while receiving data

digitalWrite(LED_BUILTIN, HIGH);

// gets value from p5

int value = Serial.parseInt();

// changes brightness of the led

if (Serial.read() == '\n') {

analogWrite(ledPin, value);

}

}

// led off at end of reading

digitalWrite(LED_BUILTIN, LOW);

}

p5:

// variable to hold led brightness value

let value = 0;

function setup() {

createCanvas(640, 480);

textSize(18);

}

function draw() {

background(0);

stroke(255);

fill(255);

// checks for state of serial communication

if (!serialActive) {

text("Press Space Bar to select Serial Port", 20, 30);

} else {

text("Connected", 20, 30);

}

}

// sets up serial communication

function keyPressed() {

if (key == " ") {

// important to have in order to start the serial connection!!

setUpSerial();

}

}

// This function will be called by the web-serial library

// with each new *line* of data. The serial library reads

// the data until the newline and then gives it to us through

// this callback function

function readSerial(data) {

if (data != null) {

//////////////////////////////////

//SEND TO ARDUINO HERE (handshake)

//////////////////////////////////

// mouseX is mapped to right value before transmitted

value = int(map(mouseX, 0, width, 0, 255));

let sendToArduino = value + "\n";

writeSerial(sendToArduino);

}

}

Video

Exercise 3

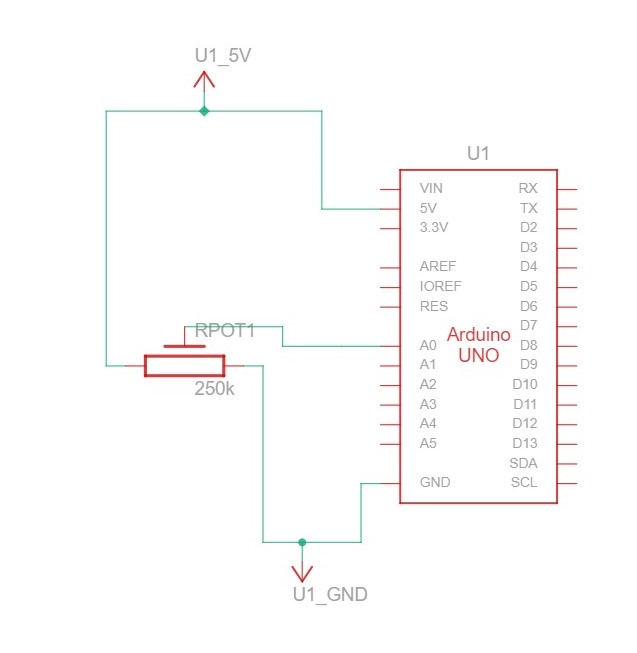

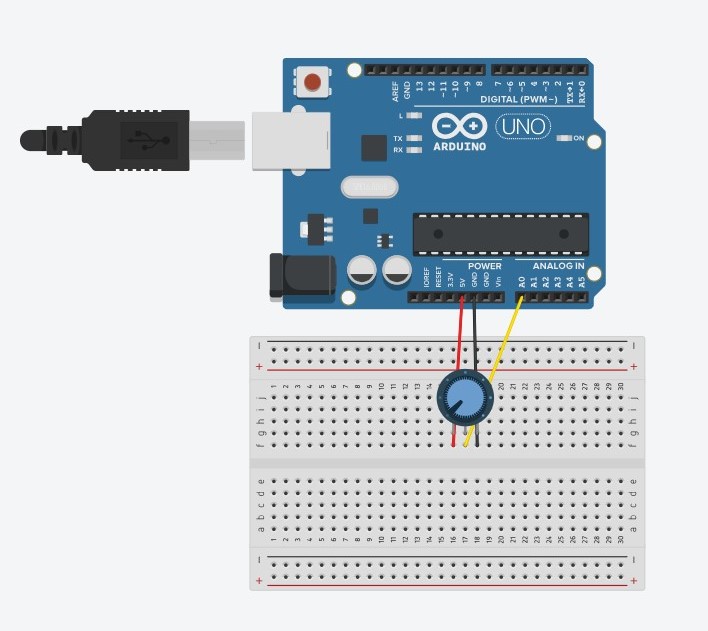

Concept

An LED lights up when the ellipse touches the ground. A potentiometer is used to control the wind variable.

Code

Arduino:

// LED pin value

int ledPin = 5;

void setup() {

// Start serial communication so we can send data

// over the USB connection to our p5js sketch

Serial.begin(9600);

// We'll use the builtin LED as a status output.

// We can't use the serial monitor since the serial connection is

// used to communicate to p5js and only one application on the computer

// can use a serial port at once.

pinMode(LED_BUILTIN, OUTPUT);

pinMode(ledPin, OUTPUT);

// start the handshake

while (Serial.available() <= 0) {

digitalWrite(LED_BUILTIN, HIGH); // on/blink while waiting for serial data

Serial.println("0"); // send a starting message

delay(300); // wait 1/3 second

digitalWrite(LED_BUILTIN, LOW);

delay(50);

}

}

void loop() {

// wait for data from p5 before doing something

while (Serial.available()) {

// led on while receiving data

digitalWrite(LED_BUILTIN, HIGH);

// gets value from p5

int value = Serial.parseInt();

// turns on or off the led depending on value from p5

if (Serial.read() == '\n') {

if (value == 1) {

digitalWrite(ledPin, HIGH);

}

else {

digitalWrite(ledPin, LOW);

}

// gets sensor value

int sensor = analogRead(A0);

delay(5);

// sends sensor value to p5

Serial.println(sensor);

}

}

// indicates end of reading

digitalWrite(LED_BUILTIN, LOW);

}

p5:

let velocity;

let gravity;

let position;

let acceleration;

let wind;

let value = 0;

let drag = 1;

let mass = 50;

function setup() {

createCanvas(640, 360);

noFill();

position = createVector(width/2, 0);

velocity = createVector(0,0);

acceleration = createVector(0,0);

gravity = createVector(0, 0.5*mass);

wind = createVector(0,0);

}

function draw() {

background(255);

stroke(0);

fill(0);

if (!serialActive) {

text("Press Space Bar to select Serial Port", 20, 30);

} else {

text("Connected", 20, 30);

applyForce(wind);

applyForce(gravity);

velocity.add(acceleration);

velocity.mult(drag);

position.add(velocity);

acceleration.mult(0);

ellipse(position.x,position.y,mass,mass);

if (position.y > height-mass/2) {

velocity.y *= -0.9; // A little dampening when hitting the bottom

position.y = height-mass/2;

// sets value to 1 to indicate touching the ground

value = 1;

}

else {

// sets value to 0 to indicate touching the ground

value = 0;

}

}

}

function applyForce(force){

// Newton's 2nd law: F = M * A

// or A = F / M

let f = p5.Vector.div(force, mass);

acceleration.add(f);

}

function keyPressed(){

if (key==UP_ARROW){

mass=random(15,80);

position.y=-mass;

velocity.mult(0);

}

if (key == " ") {

// important to have in order to start the serial connection!!

setUpSerial();

}

}

// This function will be called by the web-serial library

// with each new *line* of data. The serial library reads

// the data until the newline and then gives it to us through

// this callback function

function readSerial(data) {

////////////////////////////////////

//READ FROM ARDUINO HERE

////////////////////////////////////

if (data != null) {

// divides potentiometer value into 2

// -- increments wind value if value is equal to or greater

// -- than 511

if (int(trim(data)) >= 511) {

wind.x = 3;

}

// -- decrements otherwise

else {

wind.x = -3;

}

//////////////////////////////////

//SEND TO ARDUINO HERE (handshake)

//////////////////////////////////

let sendToArduino = value + "\n";

writeSerial(sendToArduino);

}

}

Video