Concept

Our assigment idea was sparked by a common scenario we all encounter – parking a car in reverse. In discussing the challenges of accurately judging the distance, my partner and I realized the potential hazards and the lack of a reliable solution. Considering how much we rely on the beeping sensor in our own cars for safe parking, we envisioned a solution to bring this convenience to everyone. Imagine a situation where you can’t live without that reassuring beep when you’re reversing. That’s precisely the inspiration behind our assigment – a beeping sensor and a light that mimics the safety we’ve come to depend on, implemented with a car toy to illustrate its practical application.

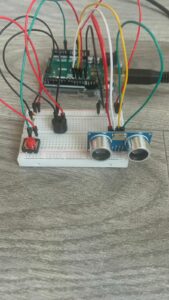

Required Hardware

– Arduino

– Breadboard

– Ultrasonic distance sensor

– Red LED

– 10k resistor

– Piezo speaker

– Jumper wires

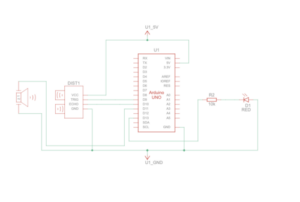

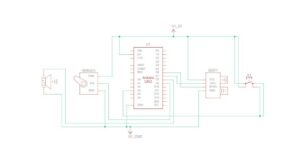

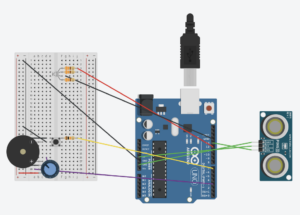

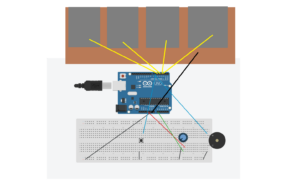

Setting Up the Components

Ultrasonic Distance Sensor Connections:

VCC to 5V

TRIG to digital pin 9

ECHO to digital pin 10

GND to GND on the Arduino

Speaker Connections:

Positive side to digital pin 11

Negative side to GND

LED Connections:

Cathode to GND

Anode to digital pin 13 via a 10k resistor

Coding the Logic

// defines pins numbers

const int trigPin = 9;

const int echoPin = 10;

const int buzzerPin = 11;

const int ledPin = 13;

// defines variables

long duration;

int distance;

int safetyDistance;

// Define pitches for the musical notes

int melody[] = {262, 294, 330, 349, 392, 440, 494, 523};

void setup() {

pinMode(trigPin, OUTPUT); // Sets the trigPin as an Output

pinMode(echoPin, INPUT); // Sets the echoPin as an Input

pinMode(buzzerPin, OUTPUT);

pinMode(ledPin, OUTPUT);

Serial.begin(9600); // Starts the serial communication

}

void loop() {

// Clears the trigPin

digitalWrite(trigPin, LOW);

delayMicroseconds(2);

// Sets the trigPin on HIGH state for 10 microseconds

digitalWrite(trigPin, HIGH);

delayMicroseconds(10);

digitalWrite(trigPin, LOW);

// Reads the echoPin, returns the sound wave travel time in microseconds

duration = pulseIn(echoPin, HIGH);

// Calculating the distance

distance = duration * 0.034 / 2;

safetyDistance = distance;

if (safetyDistance <= 5) {

// Play a musical note based on distance

int index = map(safetyDistance, 0, 5, 0, 7); // Map distance to array index

tone(buzzerPin, melody[index]); // Play the note

digitalWrite(ledPin, HIGH);

} else {

noTone(buzzerPin); // Stop the tone when not close

digitalWrite(ledPin, LOW);

}

// Prints the distance on the Serial Monitor

Serial.print("Distance: ");

Serial.println(distance);

}

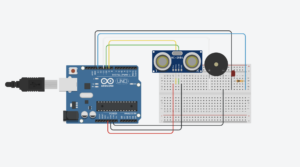

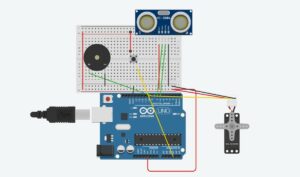

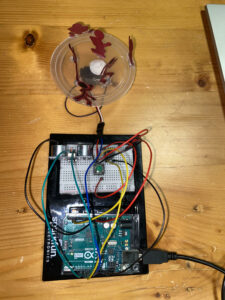

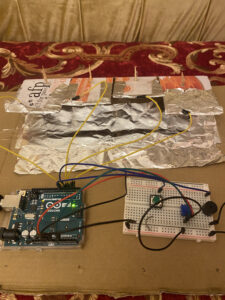

Hardware Implementation

Video Illustration (Initial idea with beeping sound)

Video Illustration 2 (using melody)

Working Explanation and Conclusion

The ultrasonic distance sensor measures the gap between the car and the sensor on the breadboard. When the distance diminishes below a predefined threshold (5 units in our design), the buzzer emits a warning sound, and the red LED illuminates, acting as a clear visual cue for the driver to halt. This Arduino-based system seamlessly combines hardware and software, offering an elegant solution to a common problem. In creating this assignment, we’ve not only simplified the process of reverse parking but also contributed to enhancing overall safety, turning our initial conversation into a tangible, practical innovation.