User Testing Experience

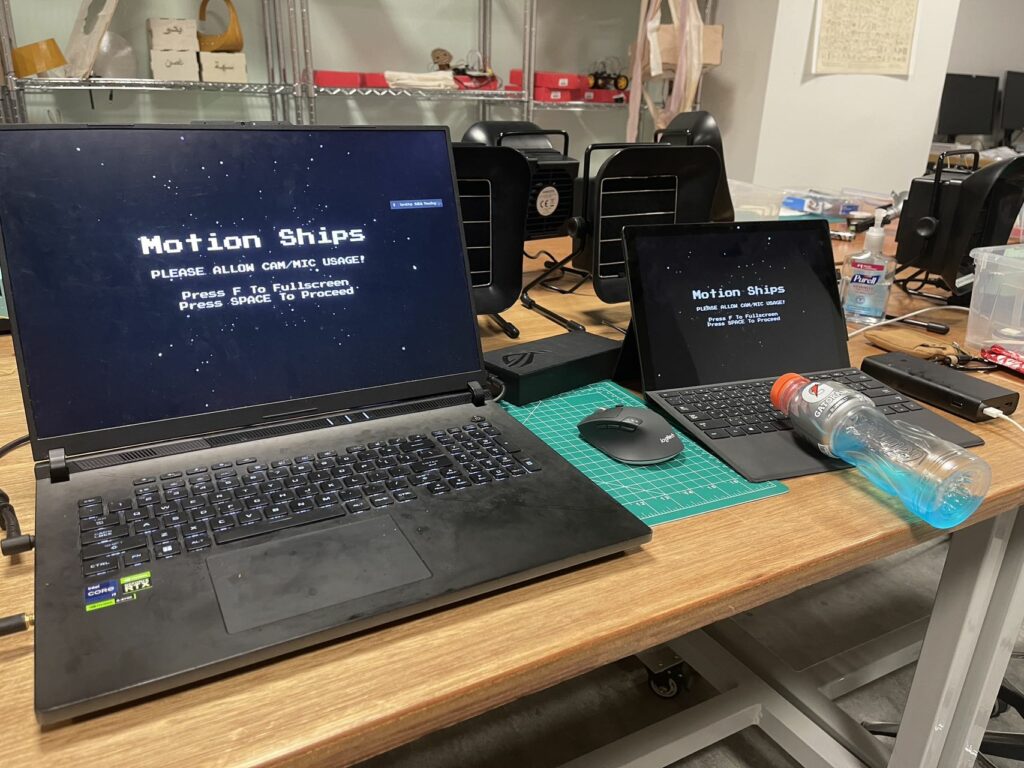

I conducted a user test with my friend, creating a scenario where he had to interact with the clock from scratch. I completely reset the device and watched how he would handle the initial setup and configuration process.

Clock Setup

Clock interaction

What I Observed

The first hurdle came with the connection process. Since the clock needs to connect to WiFi and sync with the time server, this part proved to be a bit challenging for a first-time user. My friend struggled initially to understand that he needed to connect to the clock’s temporary WiFi network before accessing the configuration interface. This made me realize that this step needs better explanation or perhaps a simpler connection method.

However, once past the connection stage, things went much smoother. The configuration interface seemed intuitive enough – he quickly figured out how to adjust the display settings and customize the clock face. The color selection and brightness controls were particularly straightforward, and he seemed to enjoy experimenting with different combinations.

Key Insights

The most interesting part was watching how he interacted with the hexagonal display. The unusual arrangement of the ping pong balls actually made him more curious about how the numbers would appear. He mentioned that comparing different fonts was satisfying , especially with the lighting background effects.

Areas for Improvement

The initial WiFi setup process definitely needs work. I’m thinking about adding a simple QR code on the device that leads directly to the configuration page, or perhaps creating a more streamlined connection process. Also, some basic instructions printed on the device itself might help first-time users understand the setup process better.

The good news is that once configured, the clock worked exactly as intended, and my friend found the interface for making adjustments quite user-friendly. This test helped me identify where I need to focus my efforts to make the project more accessible to new users while keeping the features that already work well.

Moving Forward

This testing session was incredibly valuable. It showed me that while the core functionality of my project works well, the initial user experience needs some refinement. For the future I will focus particularly on making the setup process more intuitive for first-time users.