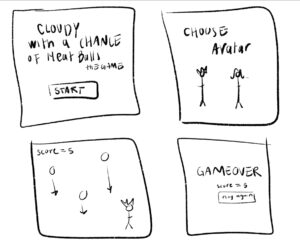

Inspiration

Anyone who knows me also knows that I’m a huge fan of the Harry Potter series and I decided to use that as the base idea for this project involving text and data. In whatever way I could, I wanted to recreate some sense of wonder and enchantment that I feel like I’m a part of every time I read one of the books or watch one of the movies. In the series, things often move and react in magical ways, and that gave me the idea to animate words and make them interactive, almost like they have a life of their own. Instead of having static text on the screen, I imagined how fun it would be if the words could scatter, repel, and float, just like magic.

Concept

The idea I finally arrived at involved a colorful word collage filled with randomly chosen and placed words from Harry Potter vocabulary (resulting in a different look/arrangement every time the code runs). When the user hovers near a word, it gets “pushed” away as if repelled by a magical force. When the screen is clicked, these words disintegrate, breaking down into smaller particles, as though they’re dissolving or being pulled apart by magic. The disintegration adds an additional layer of visual intrigue, similar to a magical spell or force at work. Once the words have completely broken down, the particles fall to the bottom of the screen and disappear. In their place, a meaningful Harry Potter quote appears on the screen. This quote stays visible for 6 seconds, offering the viewer a moment to absorb the message, before the entire cycle then resets, creating a loop that gives the experience a continuous flow.

Implementation

This is how the project turned out!

These are some of the most important parts of the code that I am proud of :))

1. Placing Words on the canvas

The first part of the project was figuring out how to place the words on the screen randomly. I wrote a function called placeWords() that tries to put each word at a random location. If the word overlaps with another, it shrinks until it fits. This way, each time the page is loaded, the words appear in different spots, making each experience unique.

// Function to place words randomly on the canvas

function placeWords() {

placedWords = [];

for (let i = 0; i < words.length; i++) {

let word = words[i];

let fontSize = 60;

let success = false;

// Trying to place the word until successful or until the font size is too small

while (!success && fontSize >= 10) {

let x = random(width);

let y = random(height);

let angle = random(1) > 0.5 ? 0 : HALF_PI;

// Checking if the word can be placed at the given position, size, and angle without overlapping

if (canPlaceWord(word, x, y, fontSize, angle)) {

placedWords.push(createWord(word, x, y, fontSize, angle));

success = true;

} else {

fontSize -= 2;

}

}

}

}

2. Animating Words

Next, I wanted the words to move away from the mouse, like they were being pushed by an invisible force. I used the mouse’s position to calculate the distance between the mouse and each word. If the mouse gets too close, the word moves away. If it moves far enough, the word slowly returns to its original spot.

// Drawing "Hogwarts" word (stationary)

drawWord(hogwartsWord);

// Drawing all other placed words with repel effect

for (let word of placedWords) {

// Calculating the horizontal/vertical distance between the mouse and the current word's position

let dx = mouseX - word.currentX;

let dy = mouseY - word.currentY;

let distance = sqrt(dx*dx + dy*dy); // calculating the total Euclidean distance between the mouse and the word using Pythagoras' theorem

let maxDistance = 100;

let repelStrength = 35; //strength of repelling force

// If the word is within the repel range (closer than maxDistance)

if (distance < maxDistance) {

// Calculating the repelling force proportional to the distance (closer = stronger force)

let force = (1 - distance / maxDistance) * repelStrength;

// Applying the repelling force to the word's horizontal/vertical position, pushing it away from the mouse

word.currentX -= dx / distance * force;

word.currentY -= dy / distance * force;

} else {

// If the word is outside the repel range, gradually moving it back to its original position using linear interpolation

word.currentX = lerp(word.currentX, word.x, 0.1);

word.currentY = lerp(word.currentY, word.y, 0.1);

}

3. Disintegrating Words

Finally, I wanted the words to break apart and float away, like a spell was cast on them. To do this, I turned each word into a set of particles that would fly off the screen when triggered. I mapped each word’s size to a number of particles and gave them random velocities to make the effect look more natural. This disintegration is accompanied by a famous Harry Potter quote (loaded from a file quotes.txt ) being displayed on the screen.

function disintegrateWords() {

// Converting each word into particles

for (let word of [hogwartsWord, ...placedWords]) {

// Calculating the number of particles to generate based on the word's font size

// Larger font sizes produce more particles (between 5 and 50 particles)

let particleCount = map(word.fontSize, 10, 120, 5, 50);

for (let i = 0; i < particleCount; i++) {

// Pushing a new particle object into the particles array with initial properties

particles.push({

x: word.currentX,

y: word.currentY,

vx: random(-2, 2),

vy: random(-5, -1),

size: random(6, 10),

color: word.color

});

}

}

// Clearing the words

placedWords = [];

// Selecting and display a random quote

currentQuote = random(quotes);

// Splitting the quote into lines of about 40 characters each

currentQuote = splitQuoteIntoLines(currentQuote, 40);

quoteStartTime = millis();

quoteEndTime = quoteStartTime + quoteDisplayDuration;

}

Reflections and Further Improvements

One thing I’d like to improve is the collision detection when placing words. Right now, the words sometimes get placed in awkward spots if they shrink too much. I’d like to refine the placement system so the words always look balanced on the screen. It might be a good idea to have words be more crowded around the centered word (Hogwarts) and then spreading out gradually. Also, I think adding more magical effects, like words changing color or spinning, would enhance the overall experience.

This project helped me better understand how interactive elements can transform a simple idea into something engaging and dynamic. I look forward to adding even more magical effects to this canvas in the future!

References

https://www.bloomsbury.com/uk/discover/harry-potter/fun-facts/harry-potter-glossary/