Concept

This started off as a joke. A friend won a Jigglypuff at a fair and we joked how attaching wheels and a speaker to it would be amazing and how I should do it for my IM Final project.

So, I have decided I will do it for my IM Final Project.

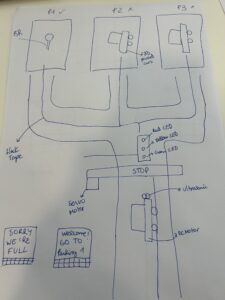

I want to build A moving robot that has a Jigglypuff (which is a Pokemon) plushy at the top . It uses a gesture sensor to detect gestures and respond accordingly. The robot can be controlled through the laptop via P5. A speaker will be used to produce sounds depending on the ‘mood’ the JigglyPuff is currently in .

Some sensors that I plan on experimenting with and seeing what works :

- Infrared distance sensors (to check for collisions)

- Gesture sensors

- Speakers apart from the buzzer

- Some kind of camera module (or Go Pro that will stream video to P5)

- Maybe a makey makey that would allow sound response to certain touches. (such as if you pat the head of JigglyPuff)

- Maybe a mini-projector to project something’s on command

Initial Things to do

- Think of a container for the JigglyPuff. What kind of container will look good ? A large 3-D printed bowl? A decorated Wooden Cart ? The Whole module needs a reliable design and I would like to avoid sewing stuff into the plush. Since it is a rounded plushy, placing components on top of it is not very practical.

- Code for the movement of the container.

- Check out gesture sensor and code if-else statements

- Attach a camera module to transfer input to P5.

- Get different sound clips from The Pokemon series for different emotions of JigglyPuff that are to be played for different gestures.

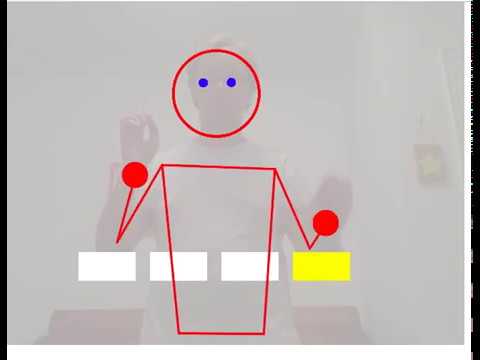

- Use Posenet to detect poses ?

Expected challenges

- Designing the container

- Connecting video feed to P5

- Coding for movement in P5

- May need multiple microcontrollers for the video feed if connecting to a goPro does not work

- Finding the sound clips and a speaker that can play them