Concept and Inspiration

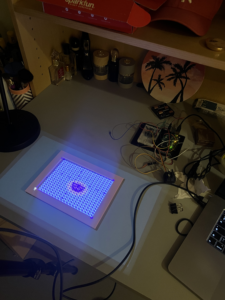

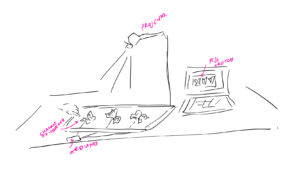

It was easy to come up with an overall abstract direction for the final project as I had set my mind early on to revisit the butterfly motif in different ways throughout the course. As for the actual concept behind the final, I wanted to experiment with something more centered around interaction and visuals in an installation-like manner and explore a new mode of design that I have not tapped into yet. After iterations and iterations of deliberations and conversations with Professor Aaron, I settled on creating a small piece centered around a mechanical butterfly that flutters when touched. The butterfly would be mounted atop a physical canvas, onto which p5-generated animations would be mapped and projected. The idea was to create a cohesive piece, with the hardware and the software working hand-in-hand to bring some life to a butterfly.

The mechanical butterfly is constructed out of two servo motors, with one moving at an angle supplementary to that of the other. The butterfly wings are printed on paper, laminated, cut, and attached to the servo motor blades. The butterfly “senses” touch through its antennas. My mechanical butterfly’s antennas are made of wires stripped, twisted to shape, and connected to a touch capacitive sensor. I used a box, which I wrapped with multiple layers of white paper and decorated with flowers (to look like the butterfly is in a flower field), with an opening for the Arduino and the circuit.

Interaction Design

For this piece, I wanted to emphasize designing a relatively simple interaction optimally well. The name I chose for the piece, “Pet-A-Butterfly” would be displayed to the user and would act as a signifier to touch the butterfly. The placement of the butterfly antennas opposite the user is intentional to maximize the probability that a user strokes the wires in the chance that they do not realize the antennas are to be touched. The user can interact with the piece by touching the butterfly antennas. Once touched, the butterfly wings flap, and a kaleidoscope of small p5-generated/projected butterflies emerge from beneath the butterfly and move outward in a synergistic, spiral motion.

Implementation

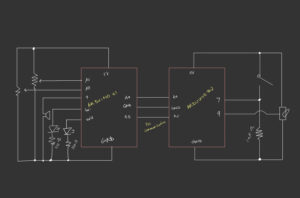

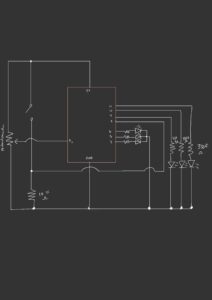

Arduino

The Arduino program gets the input from the sensor through the touched()method, which returns an 8-bit value representing the touch state of all pins, and sends it to the p5 sketch through serial communication. The program also gets the current status of the butterfly movement from the p5 sketch program. If the status is 1 (the butterfly is moving), the servo motor positions are updated every interval seconds. The angles of the motors are constrained to the range [25,50] and the direction of each motor’s movement alternates after each range span to achieve the flapping movement. The Arduino program also sends the current servo position to the p5 sketch to ensure the sketch only stops the butterfly animation if the servos are in the maximum angle position, ensuring the flapping stops when the wings are maximally spread.

Below is the full Arduino sketch:

/***************************************************

This is a library for the CAP1188 I2C/SPI 8-chan Capacitive Sensor

Designed specifically to work with the CAP1188 sensor from Adafruit

----> https://www.adafruit.com/products/1602

These sensors use I2C/SPI to communicate, 2+ pins are required to

interface

Adafruit invests time and resources providing this open source code,

please support Adafruit and open-source hardware by purchasing

products from Adafruit!

Written by Limor Fried/Ladyada for Adafruit Industries.

BSD license, all text above must be included in any redistribution

****************************************************/

#include <Wire.h>

#include <SPI.h>

#include <Adafruit_CAP1188.h>

#include <Servo.h>

// Reset Pin is used for I2C or SPI

#define CAP1188_RESET 9

// CS pin is used for software or hardware SPI

#define CAP1188_CS 10

// These are defined for software SPI, for hardware SPI, check your

// board's SPI pins in the Arduino documentation

#define CAP1188_MOSI 11

#define CAP1188_MISO 12

#define CAP1188_CLK 13

#define CAP1188_SENSITIVITY 0x1F

// For I2C, connect SDA to your Arduino's SDA pin, SCL to SCL pin

// On UNO/Duemilanove/etc, SDA == Analog 4, SCL == Analog 5

// On Leonardo/Micro, SDA == Digital 2, SCL == Digital 3

// On Mega/ADK/Due, SDA == Digital 20, SCL == Digital 21

// Use I2C, no reset pin!

Adafruit_CAP1188 cap = Adafruit_CAP1188();

// Or...Use I2C, with reset pin

//Adafruit_CAP1188 cap = Adafruit_CAP1188(CAP1188_RESET);

// Or... Hardware SPI, CS pin & reset pin

// Adafruit_CAP1188 cap = Adafruit_CAP1188(CAP1188_CS, CAP1188_RESET);

// Or.. Software SPI: clock, miso, mosi, cs, reset

//Adafruit_CAP1188 cap = Adafruit_CAP1188(CAP1188_CLK, CAP1188_MISO, CAP1188_MOSI, CAP1188_CS, CAP1188_RESET);

// make a servo object

Servo servoRight;

Servo servoLeft;

// servo pposition

int position=50;

// direction of wing movement

boolean direction = true;

unsigned long previousMillis = 0;

const long interval = 100; // interval between each wing flap in milliseconds

void setup() {

Serial.begin(9600);

Serial.println("CAP1188 test!");

// Initialize the sensor, if using i2c you can pass in the i2c address

if (!cap.begin(0x28)) {

if (!cap.begin()) {

while (1);

}

cap.writeRegister(CAP1188_SENSITIVITY, 0x5F);

// attach the servo to pin 9

servoRight.attach(11);

servoLeft.attach(5);

// write the position

servoRight.write(180- position);

servoLeft.write(position);

// // start the handshake

while (Serial.available() <= 0) {

digitalWrite(LED_BUILTIN, HIGH); // on/blink while waiting for serial data

Serial.println("0"); // send a starting message

delay(300); // wait 1/3 second

digitalWrite(LED_BUILTIN, LOW);

delay(50);

}

}

}

void loop() {

// wait for data from p5 before doing something

while (Serial.available()) {

uint8_t touched = cap.touched();

int isMoving = Serial.parseInt(); // check if butterfly is still moving

Serial.print(touched);

Serial.print(',');

if (isMoving == 1) {

unsigned long currentMillis = millis();

// check if it's time to update the wing position

if (currentMillis - previousMillis >= interval) {

// move servos to simulate wing flapping motion

if (direction) {

position += 10;

if (position >= 50) { // flip direction twhen max angle is reached

direction = false;

}

} else {

position -= 10;

if (position <= 25) {

direction = true;

}

}

// move servos in opposite directions

servoRight.write(180-position);

servoLeft.write(position);

previousMillis = currentMillis;

}

};

Serial.println(position); // send servc position to p5 sketch

}

digitalWrite(LED_BUILTIN, LOW);

}

P5

The p5 sketch is mainly responsible for triggering the animation of the smaller butterflies and for performing projection mapping which is essential for ensuring that the canvas of the sketch can always be calibrated to fit the surface of the physical box. For the latter, I made use of the p5.mapper library to create a quad map that could be calibrated to match the aspect ratios of the box’s surface dynamically. By pressing the ‘c’ key, the map’s points can be toggled and moved appropriately. This eliminated the challenge of having to align the projector height consistently across locations and manually configuring the sketch’s canvas dimensions to match the surface. After calibrating the map, the p5 program can save the map in a json file to be loaded with every program run by pressing the ‘s’ key. This code snippet of the setup()function shows how to initialize a map object and load an existing map configuration.

function setup() {

createCanvas(windowWidth, windowHeight, WEBGL);

// create mapper object

pMapper = createProjectionMapper(this);

quadMap = pMapper.createQuadMap(mapWidth, mapHeight);

// loads calibration in the "maps" directory

pMapper.load("maps/map.json");

// initialize objects

bigButterfly = new Butterfly(

centerX,

centerY,

null,

null,

null,

null,

null,

false,

false,

null,

null,

false

); // dummy butterfly object simulating the state of the physical butterfly

interaction = new Interaction(); // an interaction object that handles all interaction-related animations

// play background music in loop

backgroundMusic.loop();

}

To implement the animation, I created an Interaction class that would start and display the animation of the butterflies in a method called play(). This method would be the argument to a function of the pMapper object called displaySketch that would handle displaying the sketch only within the map’s bounds.

// class that controls the animation trigger by the interaction

class Interaction {

constructor() {

this.bigButterfly = bigButterfly; // the butterfly object containing information about the physical butterfly in the center

this.smallButterflies = []; // array that stores the smaller butterflies whose animation is triggered and displayed when signal is received from arduion

this.numButterflies = 100; // number of small butterflies

this.inTheCenter = this.numButterflies; // number of butterflies in the center

// initialize randomly colored butterfly objects and append to the smallButterflies array

let randomNum;

for (let i = 0; i < this.numButterflies; i++) {

randomNum = random([1, 2, 3]);

if (randomNum == 1) {

this.smallButterflies.push(

new SmallButterfly(

centerX,

centerY,

smallButterflySpritesheet2,

4,

10,

0,

3,

true,

false,

null,

null,

false

)

);

}

else if (randomNum == 2){

this.smallButterflies.push(

new SmallButterfly(

centerX,

centerY,

smallButterflySpritesheet1,

4,

10,

0,

5,

true,

false,

null,

null,

false

)

);

}

else if (randomNum == 3){

this.smallButterflies.push(

new SmallButterfly(

centerX,

centerY,

smallButterflySpritesheet3,

4,

10,

0,

13,

true,

false,

null,

null,

false

)

);

}

}

}

play(pg) {

/* function that controls that controls the sketch

display -> passed to mappper object's displaySketch function

*/

pg.clear();

pg.push();

pg.background(color("#B2D2A2"));

// display instructions text only before connecting to serial

if (textShow){

pg.push()

pg.fill(color("#2c4c3b"))

pg.textFont(font);

pg.textAlign(CENTER);

pg.textSize(16)

pg.text(textString, centerX+20, centerY+150);

pg.pop()

}

// display butterflies

for (let i = 0; i < interaction.numButterflies; i++) {

pg.push();

let angle = radians(180);

pg.translate(

interaction.smallButterflies[i].x,

interaction.smallButterflies[i].y

);

pg.rotate(angle); // rotate butterflies 180 degrees --> better visibility for the user

if (interaction.smallButterflies[i].moving) { // display the small butterfly if it's moving

pg.image(interaction.smallButterflies[i].show(), 0, 0, 40, 40);

interaction.smallButterflies[i].move(); // update movement of butterflies

}

pg.pop();

}

pg.push();

// ellipse enclosing projected surface area of the physical butterfly

pg.fill(color("#B2D2A4"));

// pg.fill(color("black"))

pg.noStroke();

// pg.ellipse(215, 180, butterflyWidth, butterflyHeight)

pg.pop();

// stop butterfly from moving after a set time has elapsed and only if the

// position of the servo is in the right direction

if (millis() - movementTime >= interval && servoPos == 50) {

bigButterfly.moving = false;

}

}

}

The movement of the butterflies follows a spiral-like path, originating outward and around the physical butterfly. It is implemented in a method of thesmallButterflyclass which inherits from a parent Butterflyclass. Here is a code snippet showing the implementation of the path movement in the smallButterflyclass :

move() {

// update the step of the animation

if (frameCount % this.animationSpeed == 0) {

this.step = (this.step + this.animationDir * 1) % this.numSpritesCol;

}

// control the direction of the sprite movement as spritesheet must be traversed back and forth to display correct movement

if (this.step == 0) {

this.animationDir = 1;

} else if (this.step == this.numSpritesCol - 1) {

this.animationDir = -1;

}

// update the x and y positions based on the current angle and radius

this.x = centerX + cos(this.angle)* this.radius + random(-0.5,0.5);

this.y = centerY + sin(this.angle)* this.radius + random(-0.5,0.5);

this.angle += this.angleSpeed; // increment angle to move the butterfly along a circular path

this.radius += this.radiusSpeed; // increment the radius to move the butterfly outward

// move back to center if butterfly exceeds the bounds

if (this.x < minX || this.y < minY || this.x > maxX || this.y > maxY) {

this.x = centerX;

this.y = centerY;

interaction.inTheCenter += 1; // butterfly is now counted as being in the center

this.moving = false; // stop butterfly from moving

// update angle and radius speed parameters to random values

this.angleSpeed = random(-0.02, 0.02);

this.radiusSpeed = random(0.5,1.2);

this.angle = 0;

this.radius = 0;

}

// flip butterfly direction depending on location in the sketch

if (this.x < centerX && this.sprites.length > 1) {

this.dir = 1;

} else {

this.dir = 0;

}

}

When the p5 sketch receives the touch state and servo position from Arduino, it sets the moving attribute of both the butterfly object simulating the physical butterfly in the sketch and the small butterflies to true. It also starts the timer, as the physical butterfly should only stop moving after 6 seconds have elapsed and if the servos are in the right position:

function readSerial(data) {

////////////////////////////////////

//READ FROM ARDUINO HERE

////////////////////////////////////

if (data != null) {

// make sure there is actually a message

let fromArduino = data;

// if the right length, then proceed

if (fromArduino.length > 0) {

// get value only when data sent from arduino is greater than 0

fromArduino = split(trim(fromArduino), ",");

touchSensorVal = int(fromArduino[0]); // get touch sensor val

servoPos = int(fromArduino[1]); // get servo pos

if (touchSensorVal >= 1) { // if sensor is touched, set the bigButterfly moving attribut to true

interaction.bigButterfly.moving = true;

movementTime = millis(); // record starting movement time

interaction.inTheCenter = 0;

// move smaller butterflies

for (let i = 0; i < interaction.numButterflies; i++) {

interaction.smallButterflies[i].moving = true;

}

}

}

//////////////////////////////////

//SEND TO ARDUINO HERE (handshake)

//////////////////////////////////

let sendToArduino;

if (interaction.bigButterfly.moving == true) {

sendToArduino = 1 + "\n"; // send 1 to Arduino if the butterfly is moving

} else {

sendToArduino = 0 + "\n"; // send 0 to Arduino if the butterfly is done with its animation

}

writeSerial(sendToArduino);

}

}

Here is an embedding of the full sketch (you can press the ‘d’ key to play the animation without the signal from Arduino):

Reflections and Parts I am Proud of

My biggest concern going into this, especially as I was going to employ projection mapping, was that I would be unable to align the p5 sketch and the physical butterfly together in a cohesive manner that still looks visually pleasing. I am, thus, proud that the final product resembles what I had envisioned. I also spent a lot of time thinking of the proper mechanism to automate the wing flapping motion and where/how to place the wings. I experimented with a lot of methods, such as attaching a vertical straw/wooden stick from the middle of the wings to the servo blades, and tugging on the wings when moving down to move the wings up and down. When that proved to be unhelpful, I switched to simply attaching each wing to a blade, which, in hindsight, should have been what I experimented with first. I also love the detail of having the connection between the butterfly and the sensor be through antenna-looking sensors, resembling the sense mechanisms of an actual butterfly (thanks to Professor Aaron for pointing this out). Finally, I am proud that I managed to properly calibrate the sensitivity of the touch sensor, as it initially was too sensitive, sometimes even detecting signals even when untouched. Keeping the sensitivity in check was a major challenge that I thankfully was able to overcome to keep the interaction consistent.

Areas for Future Improvements

I think the project could definitely be enhanced in a lot of ways. Because I spent a lot of time putting the interface together, an area of future improvement could be the p5-generated animation itself. I could have different path movements triggered with every touch, for example. I had initially wanted to map an actual animated butterfly from p5 onto a blank silhouette cutout of a butterfly, controlled by the servos in the same way. Because of difficulties in mapping the software animations to the movement of the hardware, I decided to pivot toward having the central butterfly be completely in hardware form. One improvement to explore is going in that direction, where I effectively add physical objects, like flowers, on the surface of the box and map simpler, more limited animations onto them.