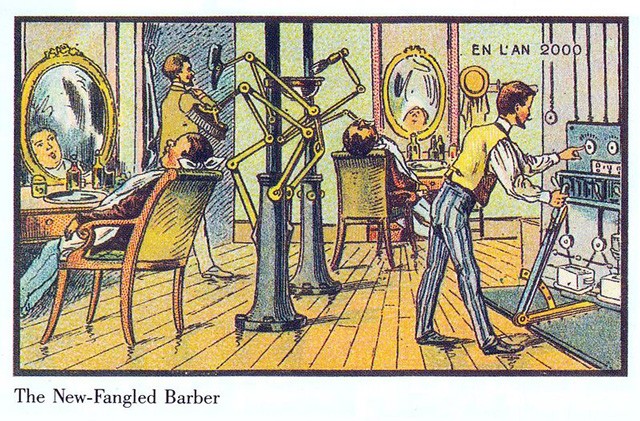

This week’s reading about the future of interaction design made me stop and think. It talked about how designs confined under glass aren’t great for the future of how we interact with stuff. The author had some strong thoughts about a video showcasing future interactions. They were skeptical about the interactions presented in the video because they had experience working with real prototypes, not just computer-generated animations. But surprisingly, that wasn’t the main issue for them. What bothered them the most was that the video didn’t offer anything truly groundbreaking from an interaction standpoint. They felt it was just a small step forward from what’s already there, which, according to them, isn’t that great to begin with. They stress how crucial it is to have visionary ideas that truly revolutionize how we interact with technology and the world around us. It got me pondering, but honestly, I don’t fully buy into that idea.

Sure, our bodies have a ton of complex ways we handle things, how we touch, hold, and interact with everything around us. But saying that designs restricted to “pictures under glass” are all bad? I’m not on board with that. Take something as simple as a PDF file versus printing out a reading. That PDF might be called “numb” in terms of design, but let’s be real, it’s way easier to handle and interact with than dealing with a printed paper. It’s about usability and convenience, isn’t it? If it’s not convenient or easy to use, is it even really interaction?

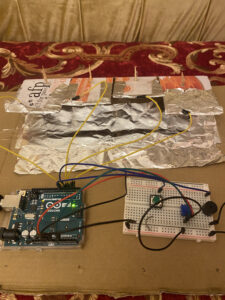

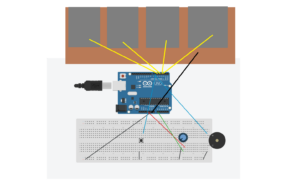

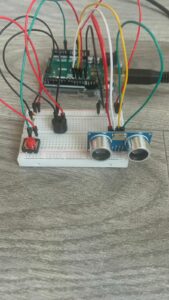

I believe interaction goes beyond just physically touching something. It’s about how easy and helpful it is to use. Some things will always need to be tangible. There’s magic in touching, feeling textures, estimating weights, and seeing how things respond to us. Like a couch that adjusts slightly to fit you but still does its job of being a comfy place to sit. That’s something you can’t replicate behind glass.

I think it’s crucial to know what can be behind glass and what can’t. Some folks might prioritize convenience in the future, but there are things you just can’t replicate virtually. I mean, you can’t virtually brush your teeth, right?

For me, I don’t see the connection or agree with that rant about interaction design. Maybe it’s just me, though. Everyone’s got their take on things, and that’s cool.