For this week’s assignment I wanted to create a sketch that would be responsive to audio. From the beginning, I knew that I wanted to use a piece of classical music and utilize amplitude to visualize the music. I wanted to recreate a low-fidelity feeling of something like this:

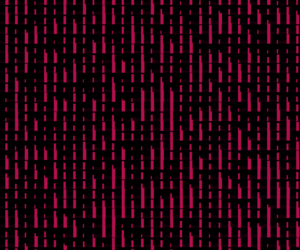

Here is my sketch (open in a separate tab for the sound):

The p5.js library is used in this project to build an audio-driven visual representation. The Particle class is in charge of creating particles with certain properties such as position, angle, speed, and radius. The amplitude of the Orchestral Suite by Johann Sebastian Bach is utilized to modify the speed and angle of the particle. When the audio amplitude exceeds a predetermined threshold, the particles vary their angle and radius, resulting in visually unique behavior. Furthermore, boundary checks are used to verify that particles do not bounce off the canvas’s edges. The primary program generates a grid of tiny particles and dynamically draws lines between them, resulting in a complicated, developing visual pattern.

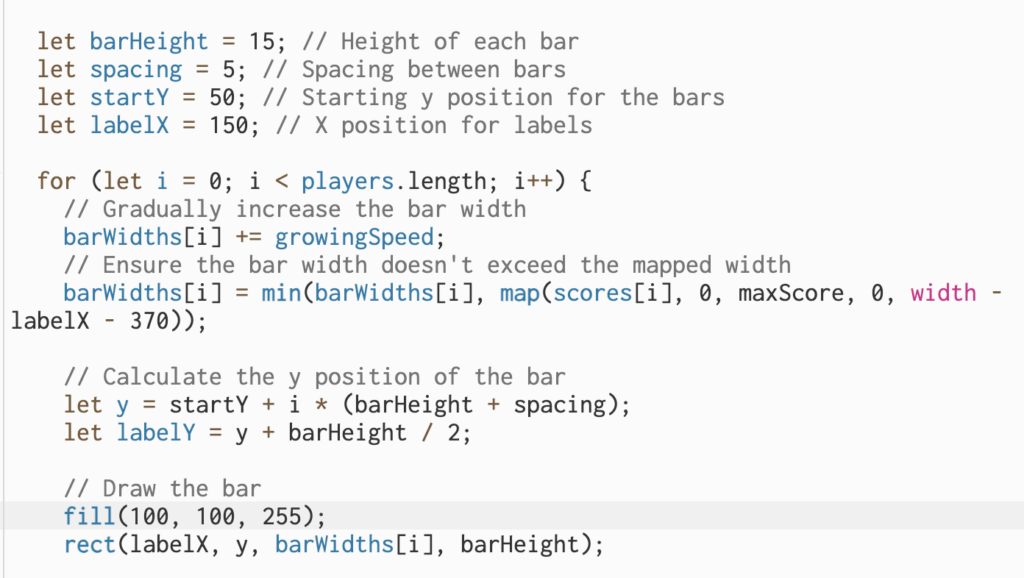

This project was a good opportunity to learn about the sound library. Here some crucial lines:

this.amp = new p5.Amplitude(); // and let vol = this.amp.getLevel();

The p5.Amplitude class is part of the p5.js sound package and allows to measure the amplitude or loudness of audio in real-time. By creating this instance, the sketch has the capacity to evaluate and respond to the dynamics of the audio. The getLevel() function of the p5.Amplitude object (this.amp) gets the current audio amplitude level. This value changes while the audio plays, offering a dynamic indicator of the intensity of the song.

Another part of the code that I was proud of was the drawing of the lines between particles:

// looping through all the particles

for (let i = 0; i < particles.length; i++) {

let particleA = particles[i];

particleA.update();

particleA.display();

// initializing an inner loop to compare the current particle to other particles and calculating distances

for (let j = i + 1; j < particles.length; j++) {

let particleB = particles[j];

let distance = dist(

particleA.position.x,

particleA.position.y,

particleB.position.x,

particleB.position.y

);

// drawing a line between particles that are closer than 100 pixels

if (distance < 100) {

line(

particleA.position.x,

particleA.position.y,

particleB.position.x,

particleB.position.y

);

}

}

}

}

This is a very simple principle, where basically every particle in the outer loop is compared to all the other particles by looping through them in the inner loop. It was quite a basic structure but it allowed for of the main effects in the sketch.

Ideas for future improvements would be performance optimization, especially when dealing with a large number of particles. The audio tended to become messy once I would initialize more particles (sometimes it can be heard even in the current sketch). I would also like to introduce different types of particles with varying behaviors, for example, some particles could respond to bass frequencies, while others react to treble.