PROJECT DESCRIPTION

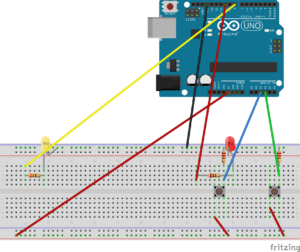

My project, Mani-Drive, consists of a robot car controlled by an Arduino board and a p5.js program. The robot car’s movements are controlled through a combination of hand tracking and user input via the p5.js program. The program utilizes the ml5.js library for hand tracking using a webcam. The position of the tracked hand determines the movement of the robot car. Moving the hand to the left, right, or forward commands the robot to move in the corresponding direction, while moving the hand downward makes the robot car move backward. The p5.js program communicates with the Arduino board via serial communication to control the robot’s movements. The circuit setup consists of the arduino uno board, basic wire connections, the DRV8833 Controller DC Motor driver, resistor, an ultrasonic sensor, four wheels, a buzzer, an LED and a bread board.The Arduino Uno board is responsible for motor control and obstacle detection. It uses an ultrasonic sensor to measure the distance to obstacles. If an obstacle is detected within a safe distance, the Arduino stops the robot’s movement, plays a sound using the buzzer, and turns on the LED as a warning. The Arduino code continuously checks the distance to handle object detection and resumes normal movement if no obstacles are detected. The Arduino board also receives commands from the p5.js program to control the robot’s movements based on the hand tracking data.

INTERACTION DESIGN

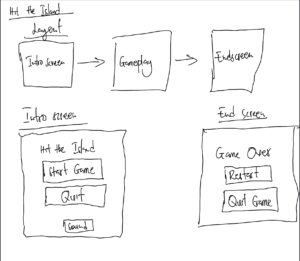

The interaction design of the project provides a user-friendly and intuitive experience for controlling and interacting with the robot car. By running the p5.js program and pressing the “s” key, the user initiates the program and activates hand tracking. The webcam captures the user’s hand movements, which are visually represented by a circular shape on the screen. Moving the hand left, right, up, or down controls the corresponding movement of the robot car. The color of the circular shape changes to indicate the intended movement direction, enhancing user understanding. Visual feedback includes a live video feed from the webcam and a warning message if an obstacle is detected. The Arduino board measures obstacle distances using an ultrasonic sensor and provides visual feedback through an LED and auditory feedback through a buzzer to alert the user about detected obstacles. This interactive design empowers users to control the robot car through natural hand gestures while receiving real-time visual and auditory feedback, ensuring a seamless and engaging interaction experience.

CODE(ARDUINO)

// Pin definitions for motor control

const int ain1Pin = 3;

const int ain2Pin = 4;

const int pwmaPin = 5;

const int bin1Pin = 8;

const int bin2Pin = 7;

const int pwmbPin = 6;

const int triggerPin = 9; // Pin connected to the trigger pin of the ultrasonic sensor

const int echoPin = 10; // Pin connected to the echo pin of the ultrasonic sensor

const int safeDistance = 10; // Define a safe distance in centimeters

const int buzzerPin = 11; // Pin connected to the buzzer

const int ledPin = 2; // Pin connected to the LED

long duration;

int distance;

bool isBraking = false;

void setup() {

// Configure motor control pins as outputs

pinMode(ain1Pin, OUTPUT);

pinMode(ain2Pin, OUTPUT);

pinMode(pwmaPin, OUTPUT);

pinMode(bin1Pin, OUTPUT);

pinMode(bin2Pin, OUTPUT);

pinMode(pwmbPin, OUTPUT);

// Initialize the ultrasonic sensor pins

pinMode(triggerPin, OUTPUT);

pinMode(echoPin, INPUT);

// Initialize the buzzer pin

pinMode(buzzerPin, OUTPUT);

// Initialize the LED pin

pinMode(ledPin, OUTPUT);

// Initialize serial communication

Serial.begin(9600);

}

void loop() {

// Measure the distance

digitalWrite(triggerPin, LOW);

delayMicroseconds(2);

digitalWrite(triggerPin, HIGH);

delayMicroseconds(10);

digitalWrite(triggerPin, LOW);

duration = pulseIn(echoPin, HIGH);

// Calculate the distance in centimeters

distance = duration * 0.034 / 2;

// Handle object detection

if (distance <= safeDistance) {

if (!isBraking) {

isBraking = true;

stopRobot();

playNote();

digitalWrite(ledPin, HIGH); // Turn on the LED when braking

}

} else {

isBraking = false;

digitalWrite(ledPin, LOW); // Turn off the LED when not braking

// Continue with normal movement

if (Serial.available() > 0) {

char command = Serial.read();

// Handle movement commands

switch (command) {

case 'L':

moveLeft();

break;

case 'R':

moveRight();

break;

case 'U':

moveForward();

break;

case 'D':

moveBackward();

break;

case 'S':

stopRobot();

break;

}

}

}

}

// Move the robot left

void moveLeft() {

digitalWrite(ain1Pin, HIGH);

digitalWrite(ain2Pin, LOW);

analogWrite(pwmaPin, 0);

digitalWrite(bin1Pin, HIGH);

digitalWrite(bin2Pin, LOW);

analogWrite(pwmbPin, 255);

}

// Move the robot right

void moveRight() {

digitalWrite(ain1Pin, LOW);

digitalWrite(ain2Pin, HIGH);

analogWrite(pwmaPin, 255);

digitalWrite(bin1Pin, LOW);

digitalWrite(bin2Pin, HIGH);

analogWrite(pwmbPin, 0);

}

// Move the robot forward

void moveForward() {

digitalWrite(ain1Pin, LOW);

digitalWrite(ain2Pin, HIGH);

analogWrite(pwmaPin, 255);

digitalWrite(bin1Pin, HIGH);

digitalWrite(bin2Pin, LOW);

analogWrite(pwmbPin, 255);

}

// Move the robot backward

void moveBackward() {

digitalWrite(ain1Pin, HIGH);

digitalWrite(ain2Pin, LOW);

analogWrite(pwmaPin, 255);

digitalWrite(bin1Pin, LOW);

digitalWrite(bin2Pin, HIGH);

analogWrite(pwmbPin, 255);

// Check the distance after moving backward

delay(10); // Adjust this delay based on your needs

// Measure the distance again

digitalWrite(triggerPin, LOW);

delayMicroseconds(2);

digitalWrite(triggerPin, HIGH);

delayMicroseconds(10);

digitalWrite(triggerPin, LOW);

duration = pulseIn(echoPin, HIGH);

// Calculate the distance in centimeters

int newDistance = duration * 0.034 / 2;

// If an obstacle is detected, stop the robot

if (newDistance <= safeDistance) {

stopRobot();

playNote();

digitalWrite(ledPin, HIGH); // Turn on the LED when braking

}

else {

digitalWrite(ledPin, LOW); // Turn off the LED when not braking

}

}

// Stop the robot

void stopRobot() {

digitalWrite(ain1Pin, LOW);

digitalWrite(ain2Pin, LOW);

analogWrite(pwmaPin, 0);

digitalWrite(bin1Pin, LOW);

digitalWrite(bin2Pin, LOW);

analogWrite(pwmbPin, 0);

}

// Play a note on the buzzer

void playNote() {

// Define the frequency of the note to be played

int noteFrequency = 1000; // Adjust this value to change the note frequency

// Play the note on the buzzer

tone(buzzerPin, noteFrequency);

delay(500); // Adjust this value to change the note duration

noTone(buzzerPin);

}

DESCRIPTION OF CODE

The code begins by defining the pin assignments for motor control, ultrasonic sensor, buzzer, and LED. These pins are configured as inputs or outputs in the setup() function, which initializes the necessary communication interfaces and hardware components.

The core functionality is implemented within the loop() function, which is executed repeatedly. Within this function, the distance to any obstacles is measured using the ultrasonic sensor. The duration of the ultrasonic pulse is captured and converted into distance in centimeters. This distance is then compared to a predefined safe distance.

If an object is detected within the safe distance, the robot enters a braking mode. The stopRobot() function is called to stop its movement by setting the appropriate motor control pins and turning off the motors. The playNote() function is called to emit an audible alert using the buzzer, and the LED is illuminated by setting the corresponding pin to high.

On the other hand, if no objects are detected within the safe distance, the robot continues with normal movement. It waits for commands received through serial communication. These commands correspond to different movement actions:

moveLeft(): This function is called when the command ‘L’ is received. It sets the motor control pins to make the robot turn left by activating the left motor in one direction and the right motor in the opposite direction.

moveRight(): This function is called when the command ‘R’ is received. It sets the motor control pins to make the robot turn right by activating the left motor in the opposite direction and the right motor in one direction.

moveForward(): This function is called when the command ‘U’ is received. It sets the motor control pins to make the robot move forward by activating both motors in the same direction.

moveBackward(): This function is called when the command ‘D’ is received. It sets the motor control pins to make the robot move backward by activating both motors in the opposite direction. After a small delay, it performs an additional obstacle check by measuring the distance using the ultrasonic sensor. If an obstacle is detected, the stopRobot() function is called, and the playNote() function emits an audible alert. The LED is also illuminated.

stopRobot(): This function is called to stop the robot’s movement. It sets all motor control pins to low and stops the motors by setting the PWM value to 0.

playNote(): This function is called to generate tones on the buzzer. The frequency and duration of the played note can be adjusted by modifying the variables within the function. It uses the tone() and noTone() functions to play the note and pause the sound, respectively.

The modular structure of the code, with separate functions for each movement action, allows for easier maintenance and future enhancements. The implementation enables the robot car to navigate its environment, detect obstacles, and take appropriate actions for collision avoidance. It showcases the integration of hardware components with the Arduino microcontroller and demonstrates the practical application of sensor-based control in robotics.

P5.js CODE( For hand detection and movement of robot )

function gotHands(results = []) {

if (!programStarted && results.length > 0) {

const hand = results[0].annotations.indexFinger[3];

handX = hand[0];

handY = hand[1];

// Start the program when hand is detected and 's' is pressed

if (handX && handY && keyIsPressed && (key === 's' || key === 'S')) {

programStarted = true;

startRobot();

}

} else if (results.length > 0) {

const hand = results[0].annotations.indexFinger[3];

handX = hand[0];

handY = hand[1];

} else {

handX = null;

handY = null;

}

}

function moveLeft() {

if (isConnected) {

serial.write('L');

}

}

function moveRight() {

if (isConnected) {

serial.write('R');

}

}

function moveForward() {

if (isConnected) {

serial.write('U');

}

}

function moveBackward() {

if (isConnected) {

serial.write('D');

}

}

function stopRobot() {

if (isConnected) {

serial.write('S');

}

}

function startRobot() {

// Start the robot movement when the hand tracking model is ready

console.log('Hand tracking model loaded');

}

// Function to detect obstacle

function detectObstacle() {

obstacleDetected = true;

carMovingBack = true;

}

// Function to stop obstacle detection

function stopObstacleDetection() {

obstacleDetected = false;

carMovingBack = false;

}

DESCRIPTION OF P5.js code

The code starts by declaring variables such as isConnected, handX, handY, video, handpose, obstacleDetected, carMovingBack, and programStarted. These variables are used to track the connection status, hand coordinates, video capture, hand tracking model, obstacle detection status, and program status.

In the preload() function, images for the instructions and introduction screen are loaded using the loadImage() function.

The keyPressed() function is triggered when a key is pressed. In this case, if the ‘s’ key is pressed, the programStarted variable is set to true, and the startRobot() function is called.

The setup() function initializes the canvas and sets up the serial communication with the Arduino board using the p5.serialport library. It also creates a video capture from the webcam and initializes the hand tracking model from the ml5.handpose library. The gotHands() function is assigned as the callback for hand tracking predictions.

The introScreen() function displays the introduction screen image using the image() function, and the instructions() function displays the instructions image.

The draw() function is the main loop of the program. If the programStarted variable is false, the intro screen is displayed, and the function returns to exit the draw loop. Otherwise, the webcam video is displayed on the canvas.

If the handX and handY variables have values, an ellipse is drawn at the position of the tracked hand. Based on the hand position, different movement commands are sent to the Arduino board using the moveLeft(), moveRight(), moveForward(), and moveBackward() functions. The color of the ellipse indicates the direction of movement.

The code checks if the hand position is out of the frame and stops the robot’s movement in that case. It also checks for obstacle detection and displays a warning message on the canvas if an obstacle is detected.

The gotHands() function is the callback function for hand tracking predictions. It updates the handX and handY variables based on the detected hand position. If the programStarted variable is false and a hand is detected while the ‘s’ key is pressed, the programStarted variable is set to true, and the startRobot() function is called.

The moveLeft(), moveRight(), moveForward(), moveBackward(), and stopRobot() functions are responsible for sending corresponding commands to the Arduino board through serial communication.

The startRobot() function is called when the hand tracking model is loaded successfully. Currently, it only logs a message to the console.

The detectObstacle() function sets the obstacleDetected and carMovingBack variables to true, indicating an obstacle has been detected and the robot should move back.

The stopObstacleDetection() function resets the obstacleDetected and carMovingBack variables, indicating the obstacle has been cleared and the robot can resume normal movement.

PARTS I’M PROUD OF AND FUTURE IMPROVEMENTS

The most obvious part of the project that i’m very proud of is how i got to implement the hand tracking library into the code to make it work even though it has some minor control bugs. Initially, i started off by setting the control system to the arrow keys on the keyboard and after i got that to work, i went ahead to integrate the ml5.js library into the code to track the user’s hands through the webcam and then map the movement of the hands to the corresponding arrow keys to make the robot move. Future improvements include making the whole arduino setup work with the p5.js part wirelessly to allow free movement of the car and also improving the implementation and integration of the hand tracking model to ensure accurate response to the movement s of the user’s hands. I also intend to add an LCD screen and also more LED lights to make it very similar to how an actual car looks.